Complete Results

These results are based on Carter (2019) data-generating mechanism with a total of 756 conditions.

Average Performance

Method performance measures are aggregated across all simulated conditions to provide an overall impression of method performance. However, keep in mind that a method with a high overall ranking is not necessarily the “best” method for a particular application. To select a suitable method for your application, consider also non-aggregated performance measures in conditions most relevant to your application.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | WAAPWLS (default) | 0.105 | 1 | WAAPWLS (default) | 0.105 |

| 2 | PEESE (default) | 0.107 | 2 | PEESE (default) | 0.107 |

| 3 | trimfill (default) | 0.113 | 3 | trimfill (default) | 0.113 |

| 4 | PETPEESE (default) | 0.119 | 4 | PETPEESE (default) | 0.119 |

| 5 | FMA (default) | 0.124 | 5 | FMA (default) | 0.124 |

| 5 | WLS (default) | 0.124 | 5 | WLS (default) | 0.124 |

| 7 | WILS (default) | 0.128 | 7 | WILS (default) | 0.128 |

| 8 | RoBMA (PSMA) | 0.135 | 8 | RoBMA (PSMA) | 0.137 |

| 9 | EK (default) | 0.149 | 9 | EK (default) | 0.149 |

| 10 | PET (default) | 0.149 | 10 | PET (default) | 0.149 |

| 11 | AK (AK1) | 0.163 | 11 | AK (AK1) | 0.161 |

| 12 | RMA (default) | 0.164 | 12 | RMA (default) | 0.164 |

| 13 | pcurve (default) | 0.169 | 13 | pcurve (default) | 0.165 |

| 14 | AK (AK2) | 0.189 | 14 | AK (AK2) | 0.221 |

| 15 | mean (default) | 0.249 | 15 | mean (default) | 0.249 |

| 16 | SM (3PSM) | 0.300 | 16 | SM (3PSM) | 0.288 |

| 17 | puniform (default) | 0.453 | 17 | puniform (default) | 0.421 |

| 18 | SM (4PSM) | 0.459 | 18 | SM (4PSM) | 0.479 |

| 19 | puniform (star) | 169.671 | 19 | puniform (star) | 169.671 |

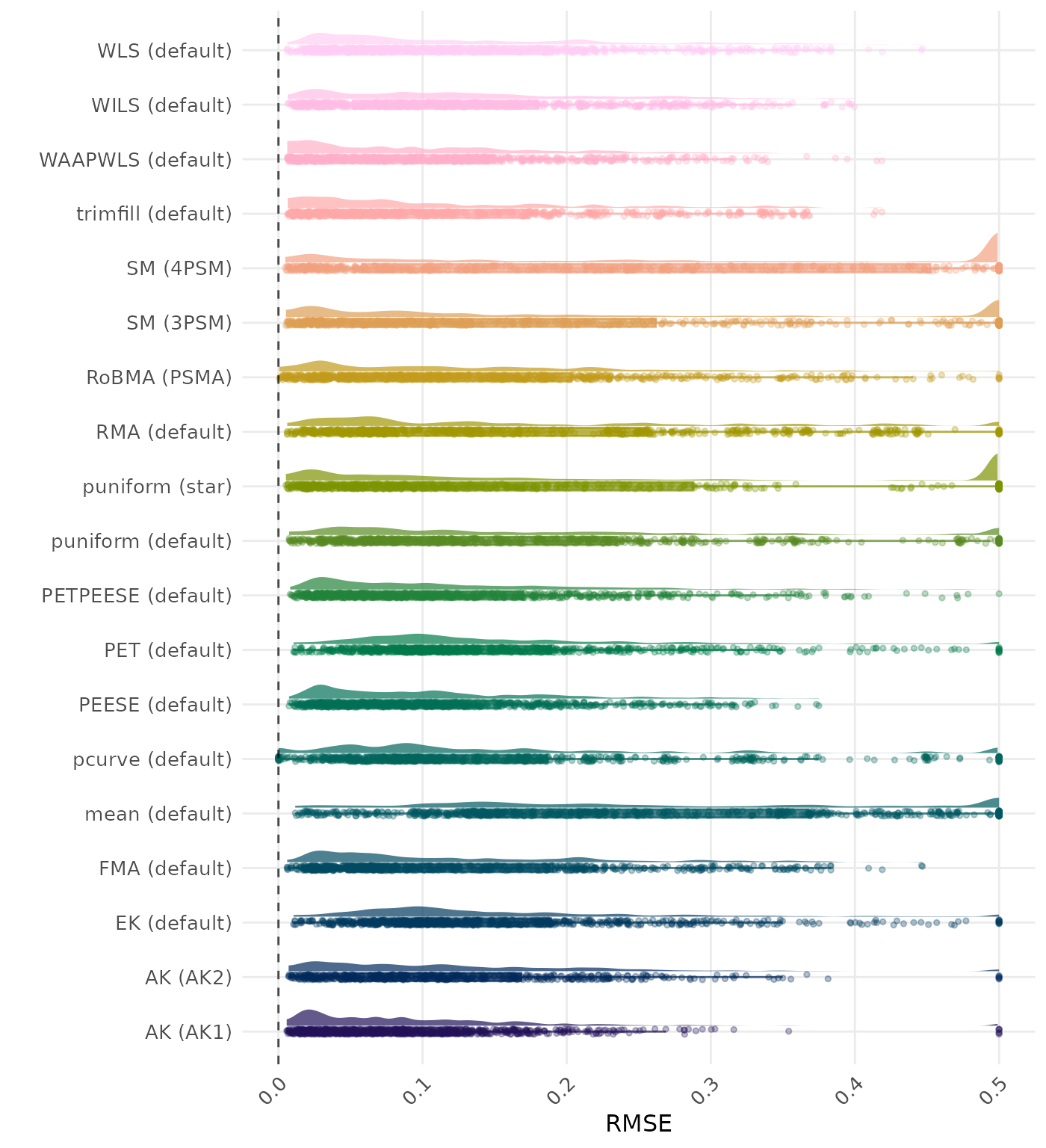

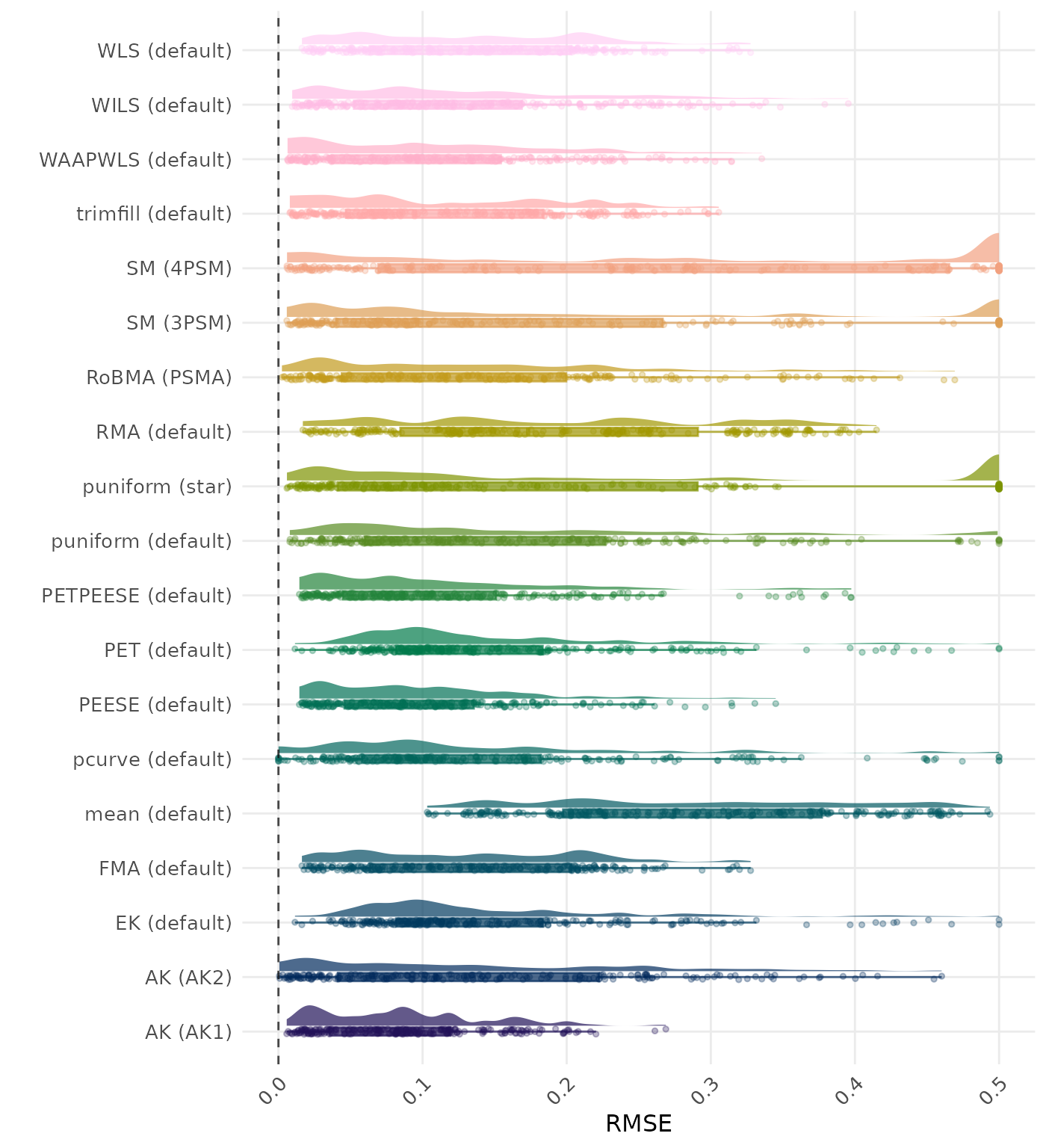

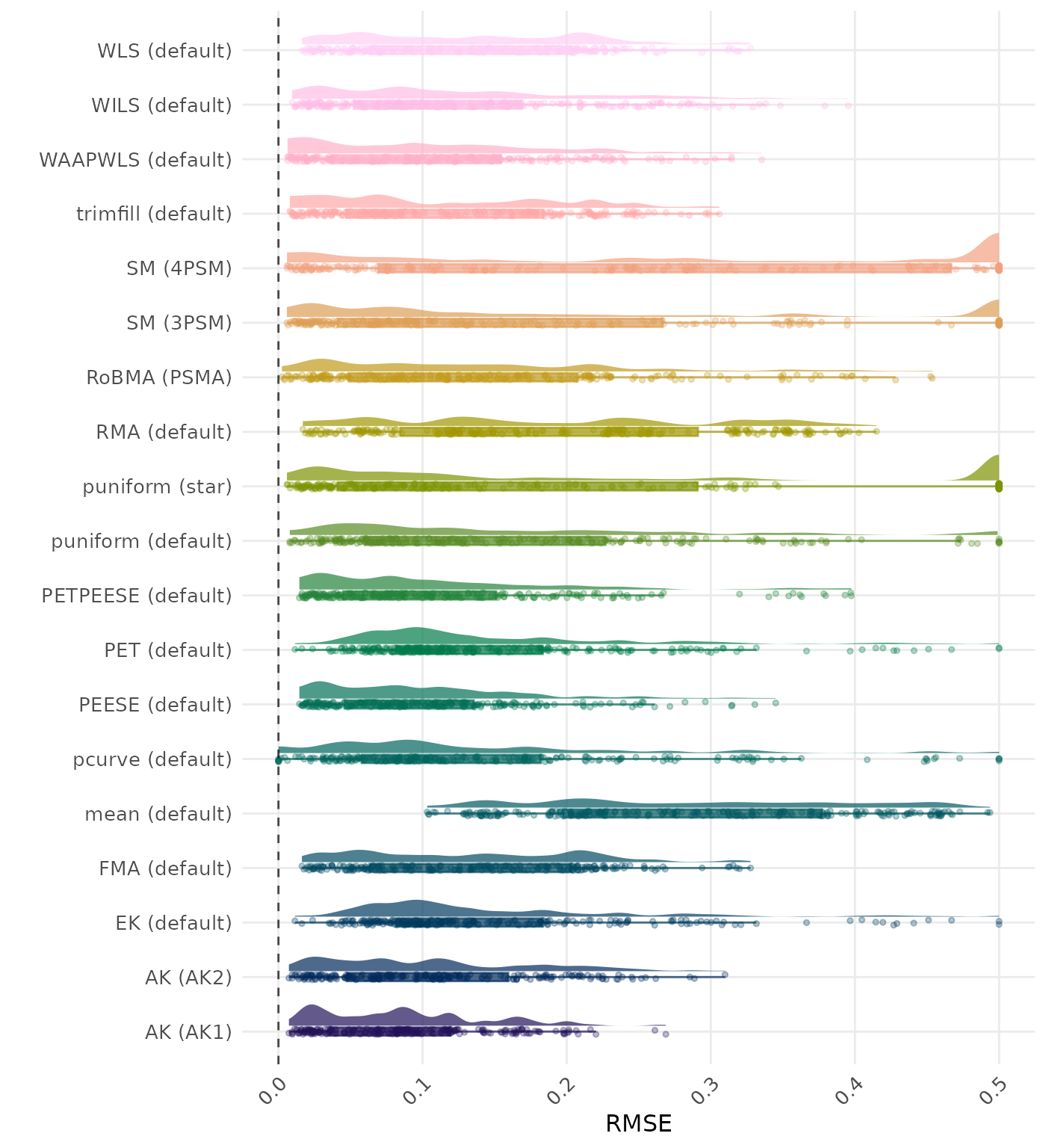

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | PETPEESE (default) | 0.002 | 1 | PETPEESE (default) | 0.002 |

| 2 | AK (AK1) | 0.022 | 2 | AK (AK1) | 0.022 |

| 3 | PEESE (default) | 0.025 | 3 | PEESE (default) | 0.025 |

| 4 | puniform (default) | 0.028 | 4 | puniform (default) | 0.033 |

| 5 | pcurve (default) | 0.052 | 5 | AK (AK2) | -0.035 |

| 6 | WILS (default) | -0.052 | 6 | pcurve (default) | 0.048 |

| 7 | EK (default) | -0.052 | 7 | WILS (default) | -0.052 |

| 8 | PET (default) | -0.052 | 8 | EK (default) | -0.052 |

| 9 | WAAPWLS (default) | 0.054 | 9 | PET (default) | -0.052 |

| 10 | trimfill (default) | 0.067 | 10 | WAAPWLS (default) | 0.054 |

| 11 | AK (AK2) | -0.075 | 11 | trimfill (default) | 0.067 |

| 12 | FMA (default) | 0.092 | 12 | RoBMA (PSMA) | -0.088 |

| 12 | WLS (default) | 0.092 | 13 | FMA (default) | 0.092 |

| 14 | RoBMA (PSMA) | -0.094 | 13 | WLS (default) | 0.092 |

| 15 | SM (3PSM) | -0.108 | 15 | SM (3PSM) | -0.100 |

| 16 | RMA (default) | 0.150 | 16 | RMA (default) | 0.150 |

| 17 | SM (4PSM) | -0.200 | 17 | SM (4PSM) | -0.203 |

| 18 | mean (default) | 0.235 | 18 | mean (default) | 0.235 |

| 19 | puniform (star) | -31.860 | 19 | puniform (star) | -31.860 |

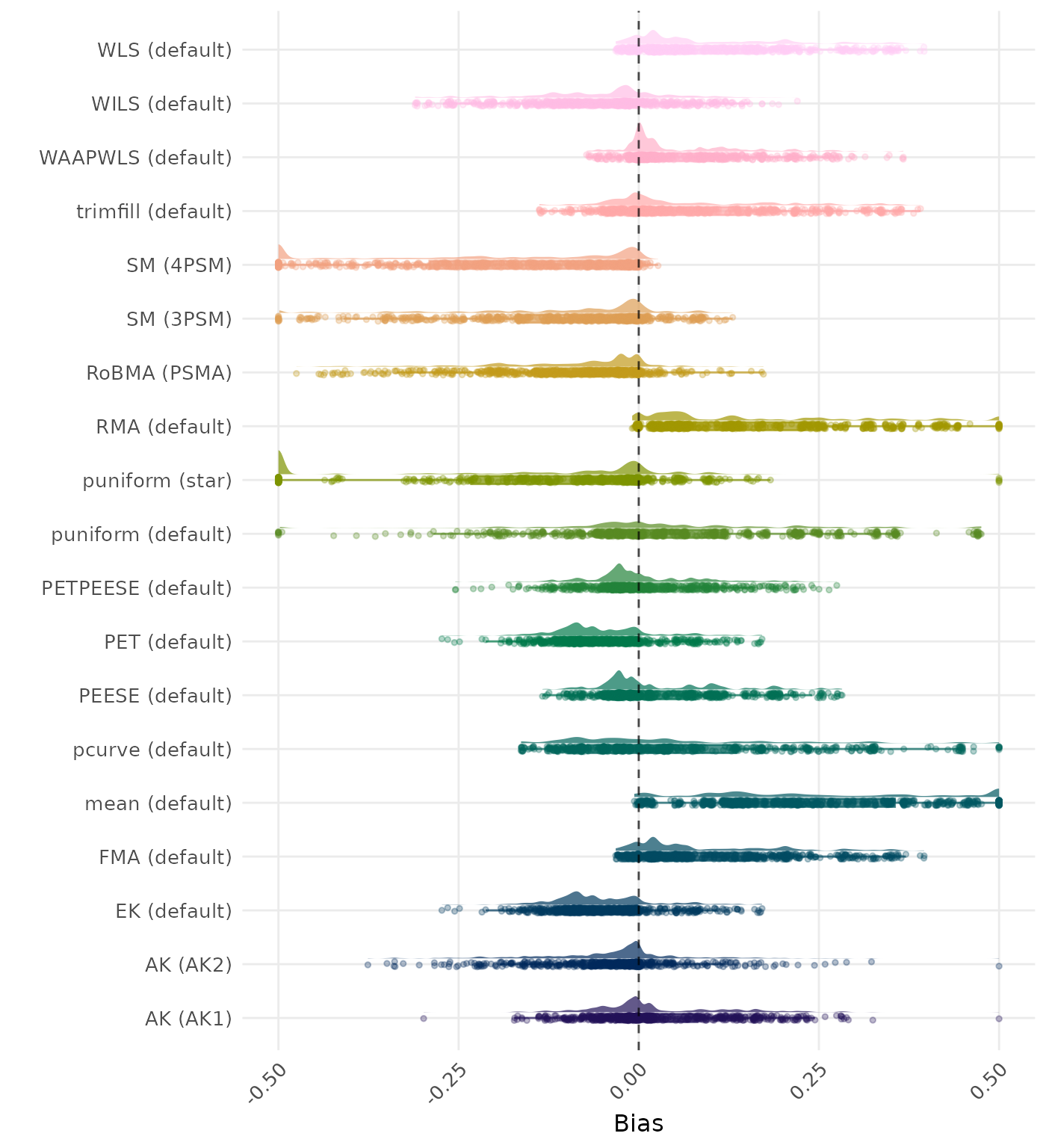

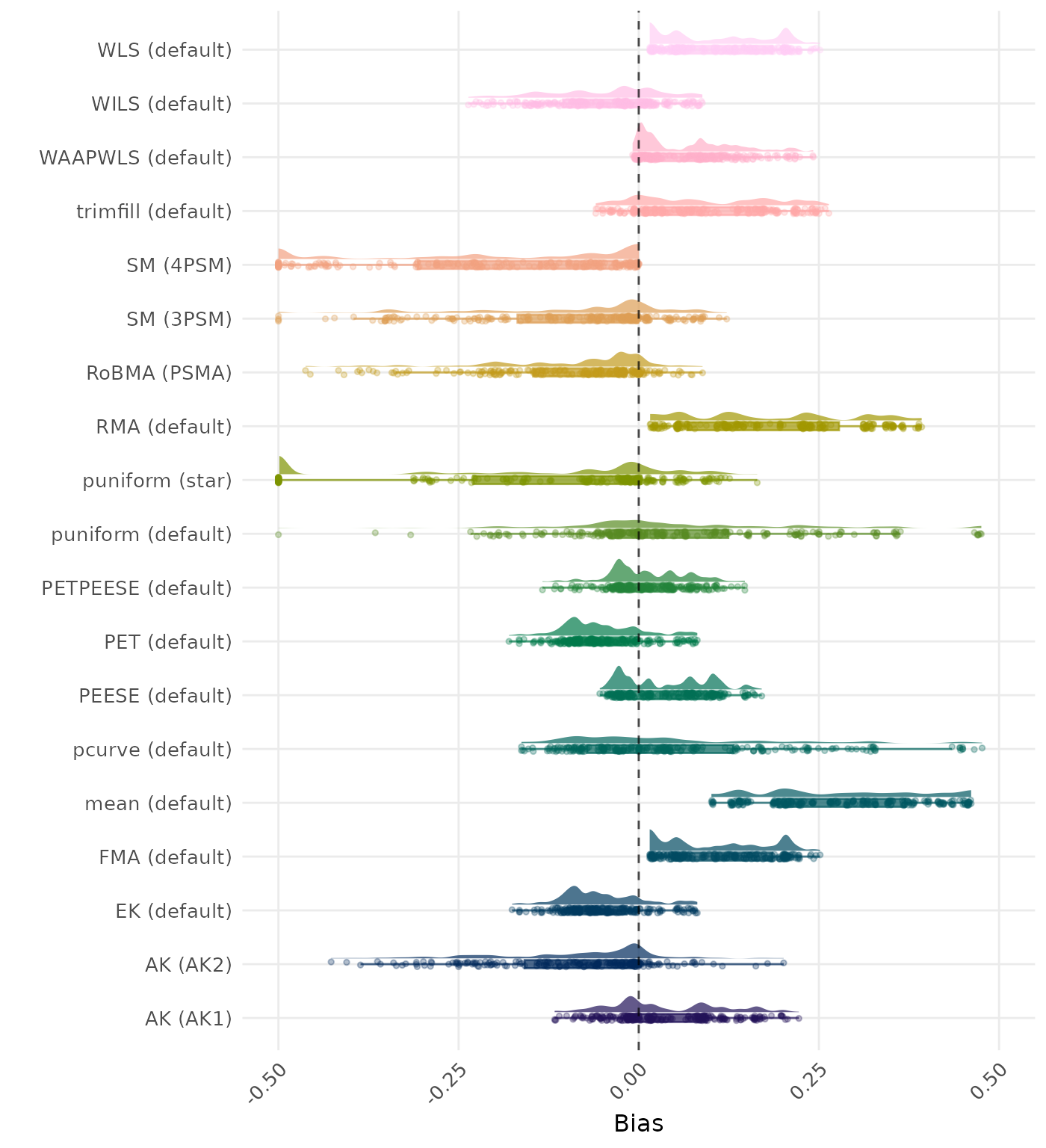

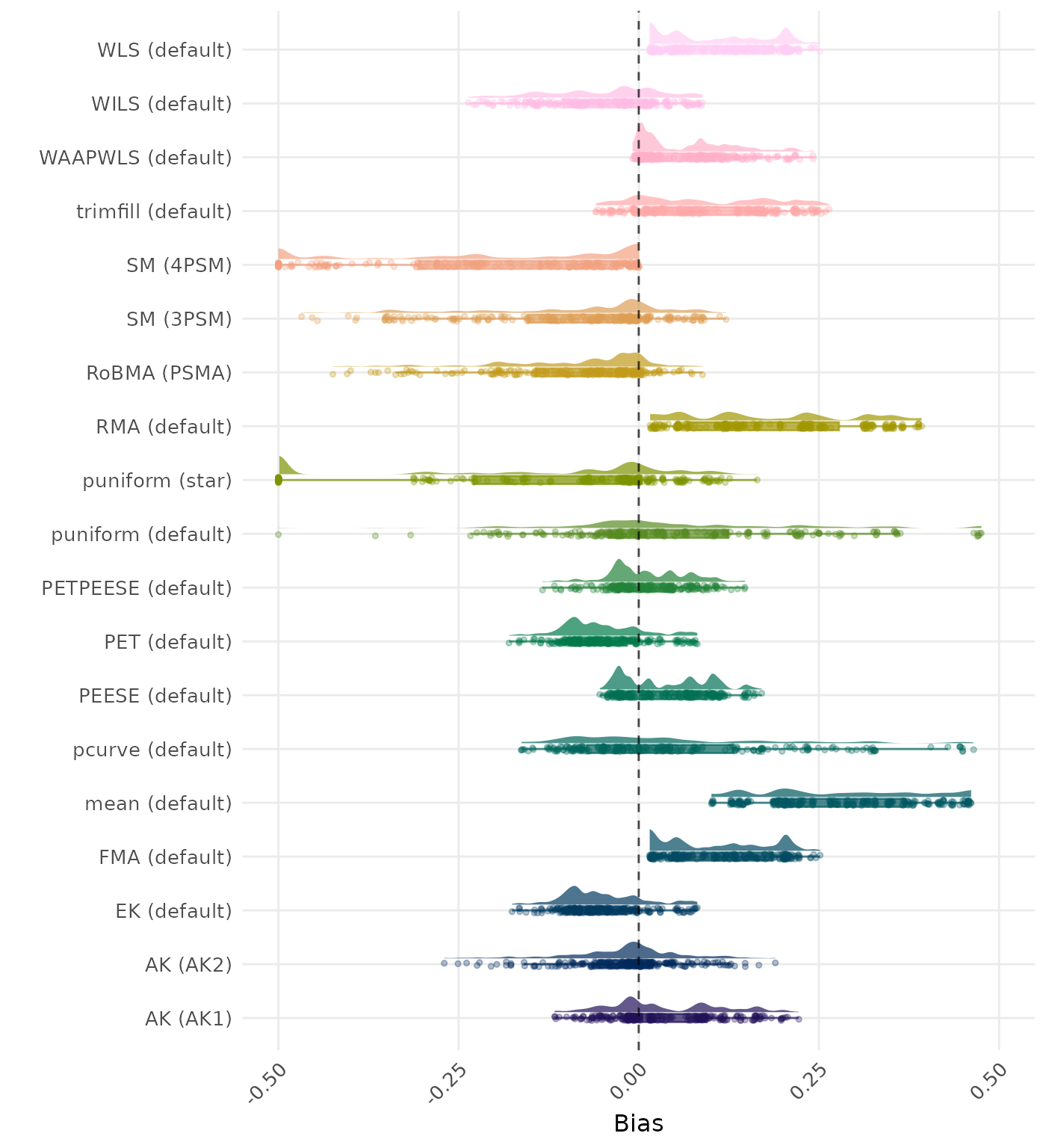

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | 0.041 | 1 | RMA (default) | 0.041 |

| 2 | trimfill (default) | 0.045 | 2 | trimfill (default) | 0.045 |

| 3 | mean (default) | 0.052 | 3 | mean (default) | 0.052 |

| 4 | FMA (default) | 0.058 | 4 | FMA (default) | 0.058 |

| 4 | WLS (default) | 0.058 | 4 | WLS (default) | 0.058 |

| 6 | WAAPWLS (default) | 0.072 | 6 | WAAPWLS (default) | 0.072 |

| 7 | RoBMA (PSMA) | 0.074 | 7 | PEESE (default) | 0.074 |

| 8 | PEESE (default) | 0.074 | 8 | RoBMA (PSMA) | 0.086 |

| 9 | WILS (default) | 0.087 | 9 | WILS (default) | 0.087 |

| 10 | PETPEESE (default) | 0.096 | 10 | pcurve (default) | 0.094 |

| 11 | pcurve (default) | 0.097 | 11 | PETPEESE (default) | 0.096 |

| 12 | PET (default) | 0.119 | 12 | AK (AK1) | 0.117 |

| 13 | EK (default) | 0.119 | 13 | PET (default) | 0.119 |

| 14 | AK (AK1) | 0.119 | 14 | EK (default) | 0.119 |

| 15 | AK (AK2) | 0.130 | 15 | AK (AK2) | 0.184 |

| 16 | SM (3PSM) | 0.246 | 16 | SM (3PSM) | 0.235 |

| 17 | SM (4PSM) | 0.366 | 17 | puniform (default) | 0.348 |

| 18 | puniform (default) | 0.381 | 18 | SM (4PSM) | 0.386 |

| 19 | puniform (star) | 165.831 | 19 | puniform (star) | 165.831 |

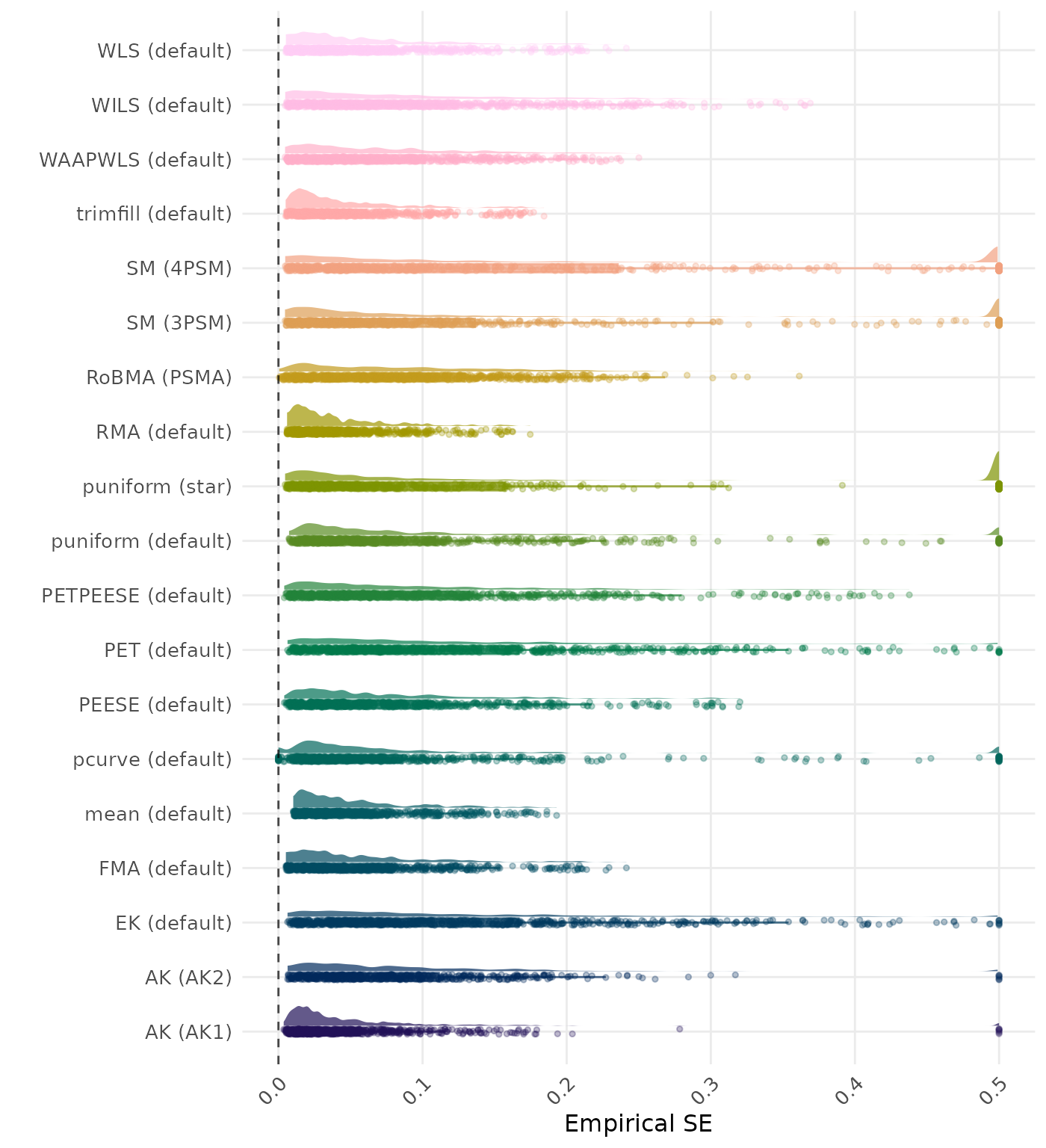

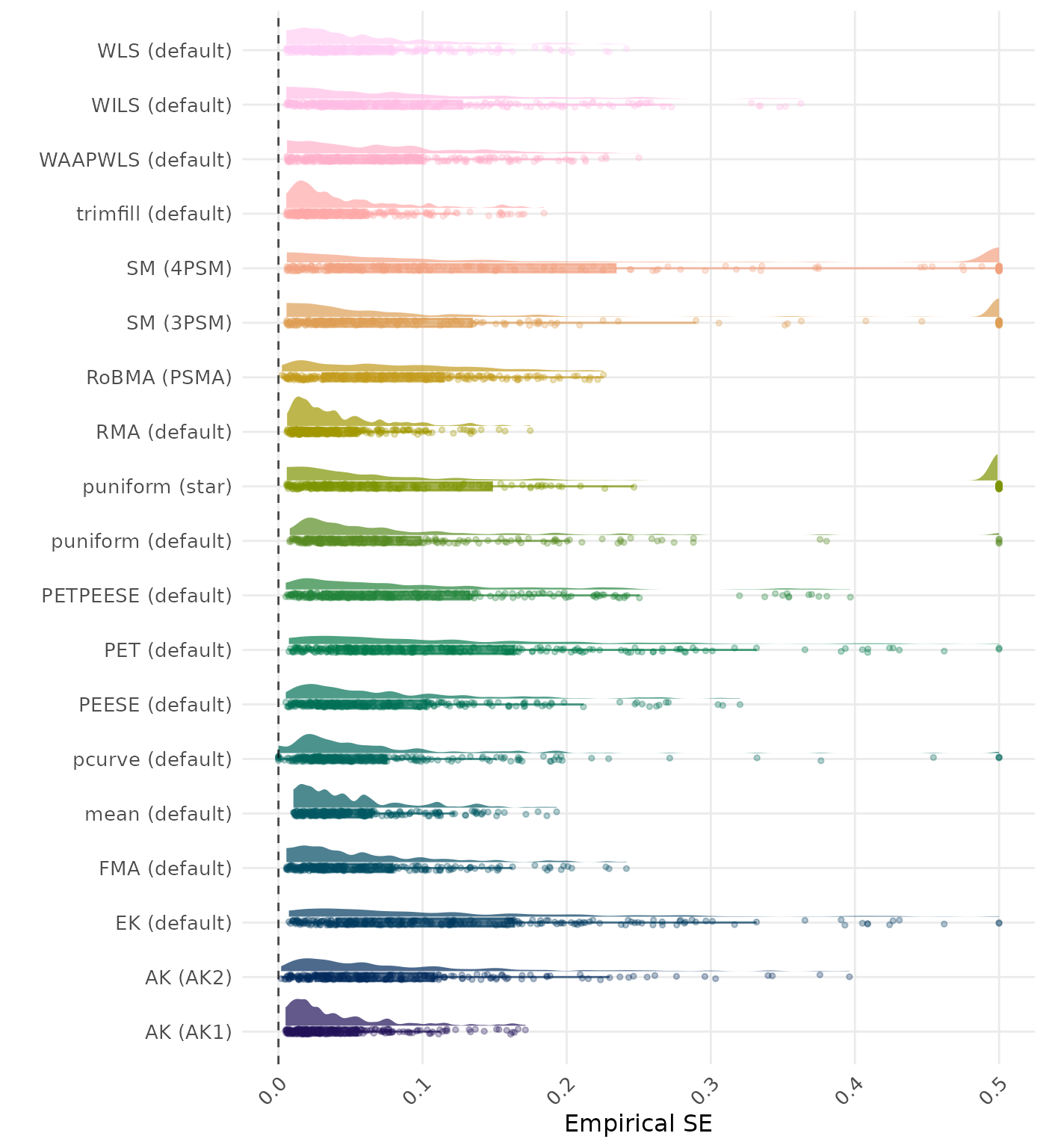

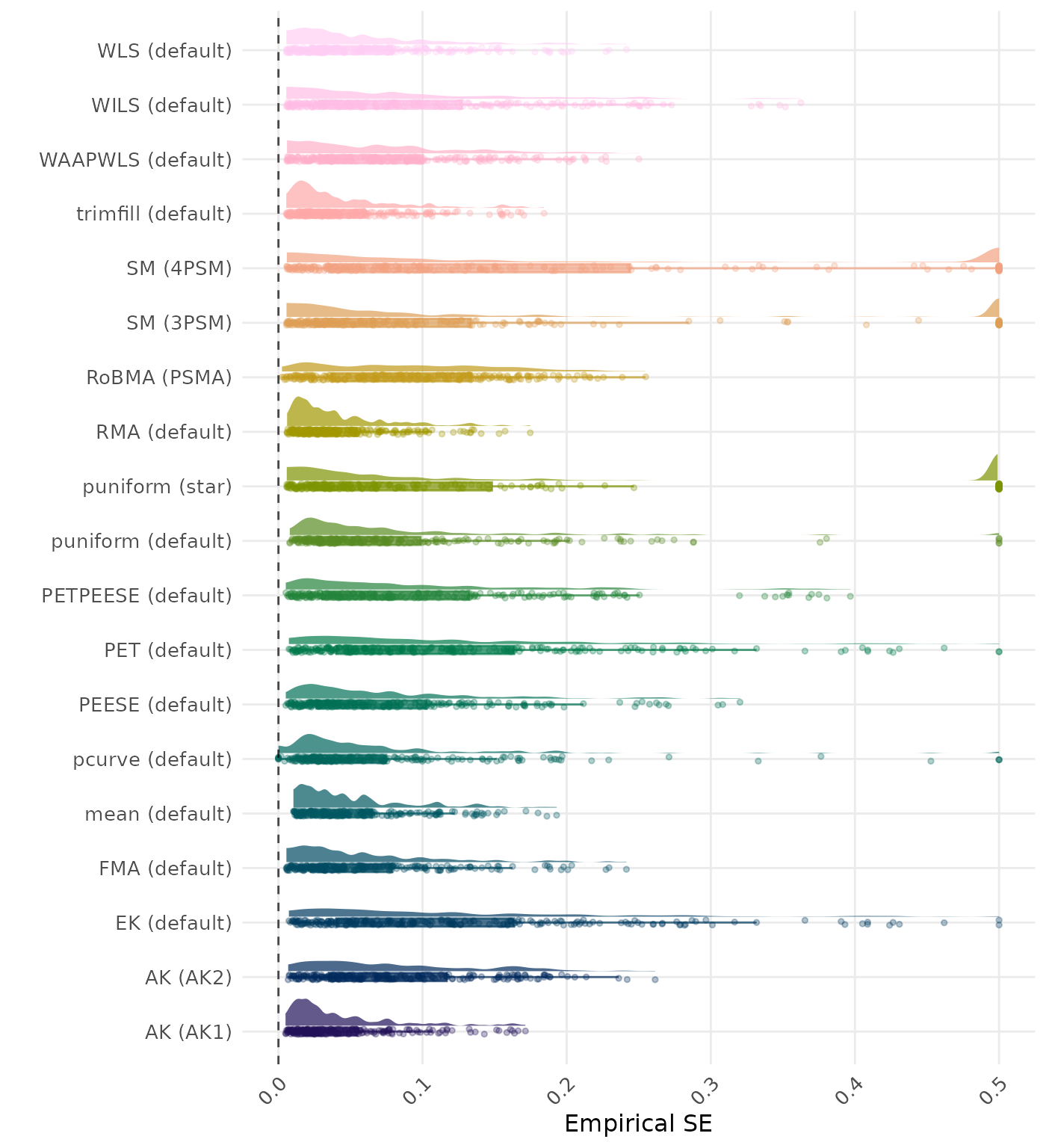

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | puniform (star) | 1.402 | 1 | puniform (star) | 1.402 |

| 2 | WAAPWLS (default) | 1.473 | 2 | WAAPWLS (default) | 1.473 |

| 3 | PETPEESE (default) | 1.679 | 3 | PETPEESE (default) | 1.679 |

| 4 | RoBMA (PSMA) | 1.721 | 4 | PEESE (default) | 1.725 |

| 5 | PEESE (default) | 1.725 | 5 | RoBMA (PSMA) | 1.761 |

| 6 | EK (default) | 1.827 | 6 | SM (3PSM) | 1.824 |

| 7 | SM (3PSM) | 1.841 | 7 | EK (default) | 1.827 |

| 8 | PET (default) | 1.901 | 8 | PET (default) | 1.901 |

| 9 | WILS (default) | 2.220 | 9 | WILS (default) | 2.220 |

| 10 | trimfill (default) | 2.241 | 10 | trimfill (default) | 2.244 |

| 11 | puniform (default) | 2.587 | 11 | puniform (default) | 2.533 |

| 12 | WLS (default) | 2.680 | 12 | WLS (default) | 2.680 |

| 13 | SM (4PSM) | 2.879 | 13 | SM (4PSM) | 2.820 |

| 14 | AK (AK1) | 2.896 | 14 | AK (AK1) | 2.822 |

| 15 | FMA (default) | 3.172 | 15 | FMA (default) | 3.172 |

| 16 | AK (AK2) | 3.599 | 16 | AK (AK2) | 3.480 |

| 17 | RMA (default) | 4.078 | 17 | RMA (default) | 4.078 |

| 18 | mean (default) | 7.200 | 18 | mean (default) | 7.200 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

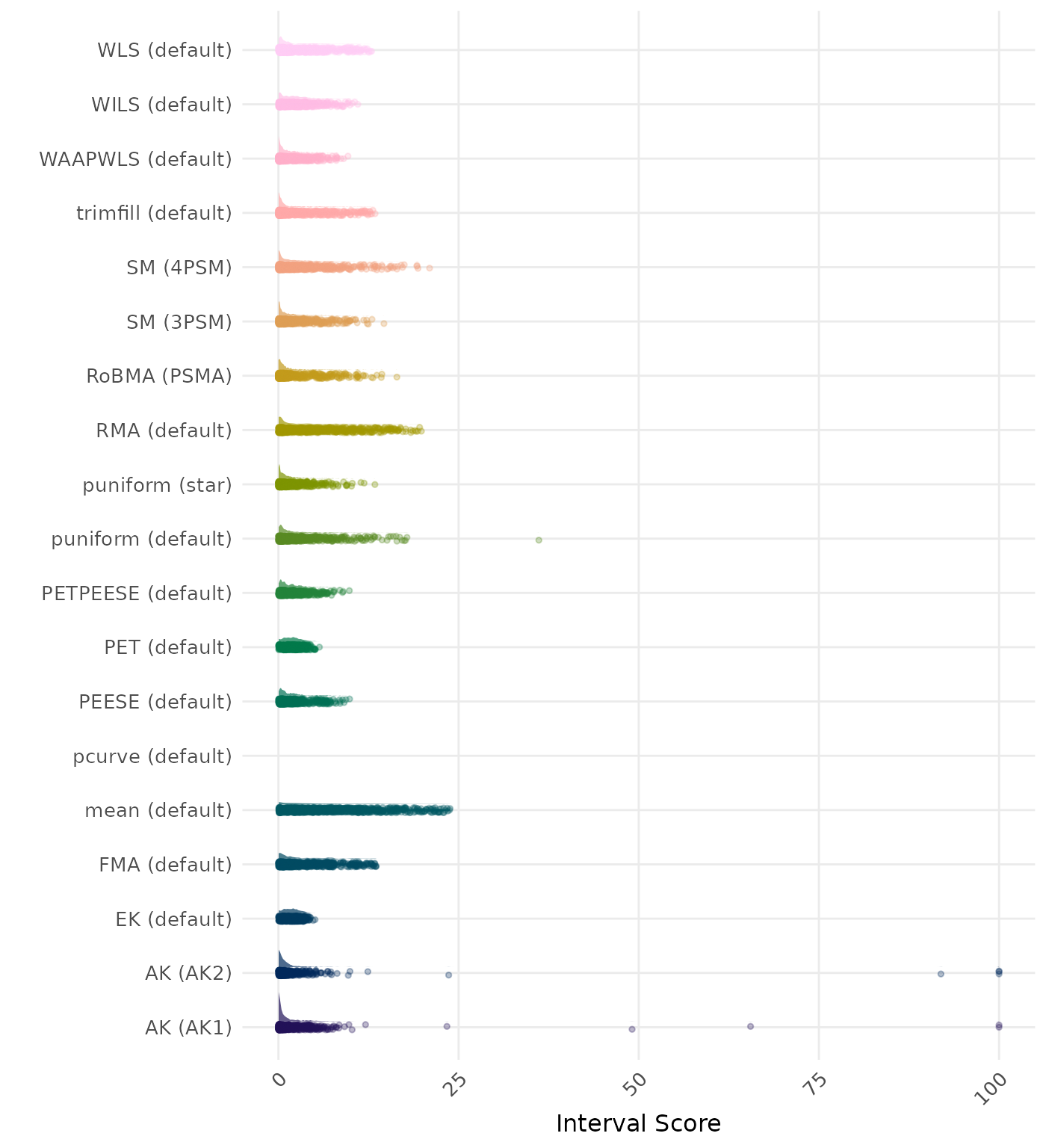

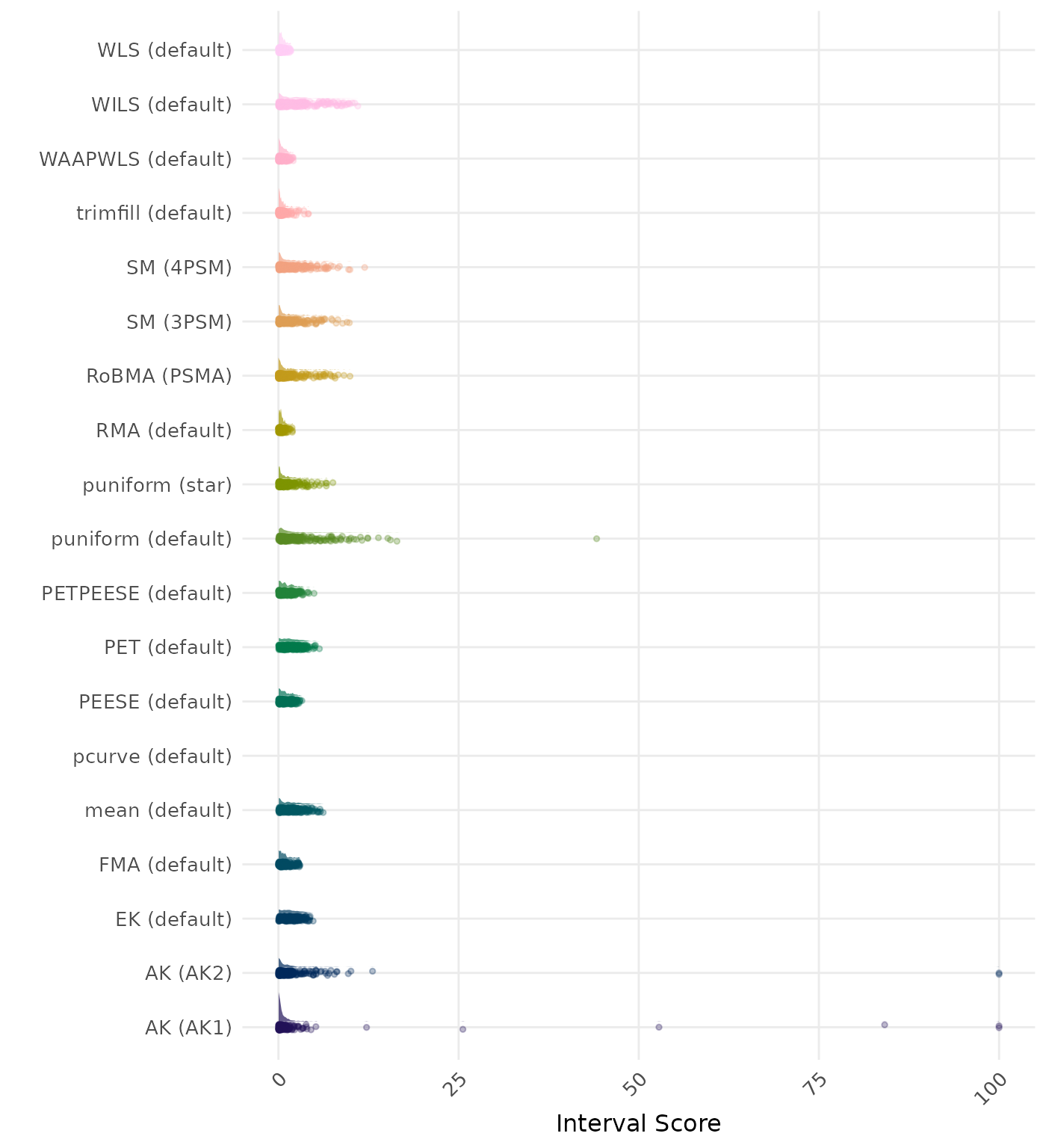

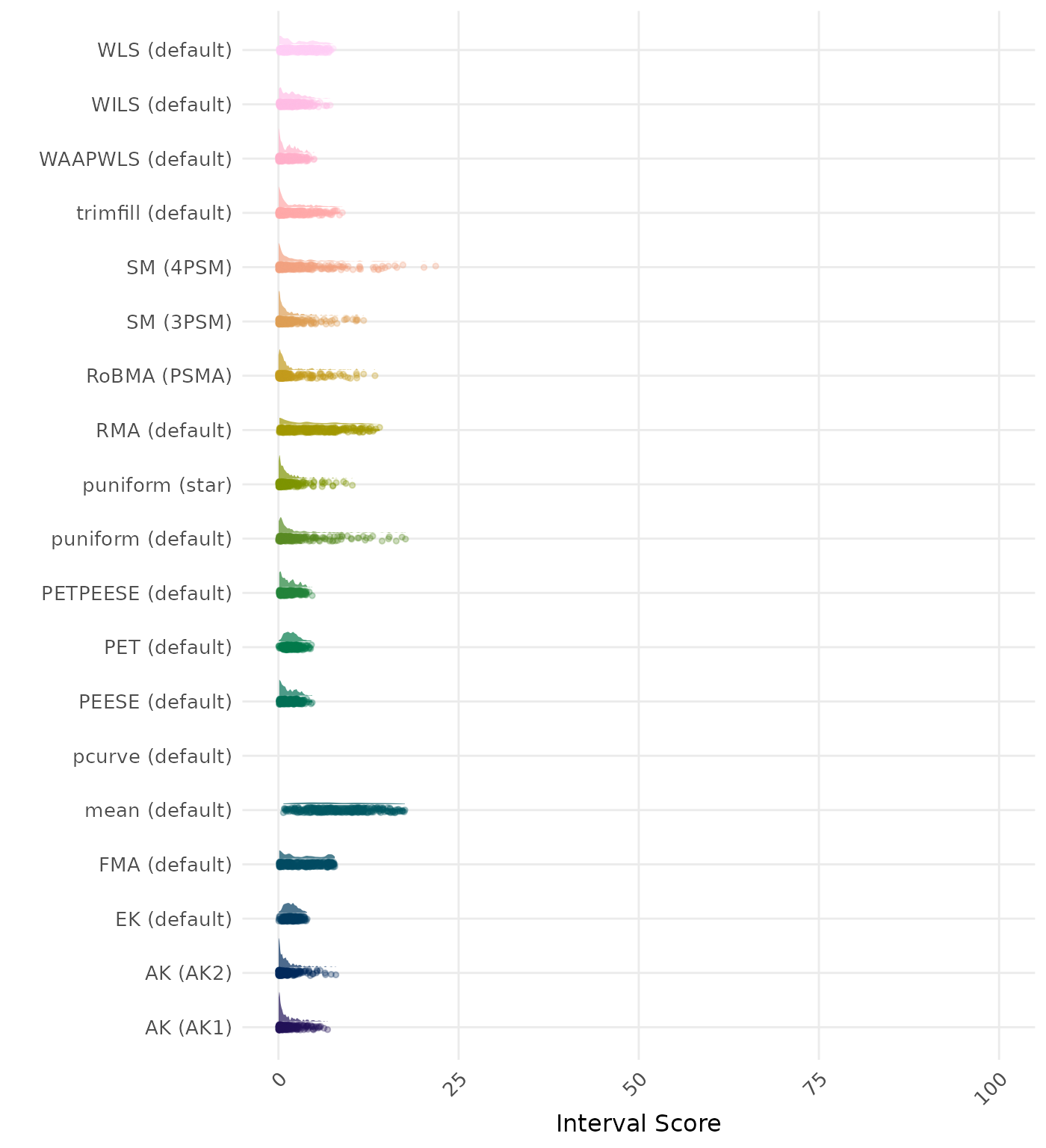

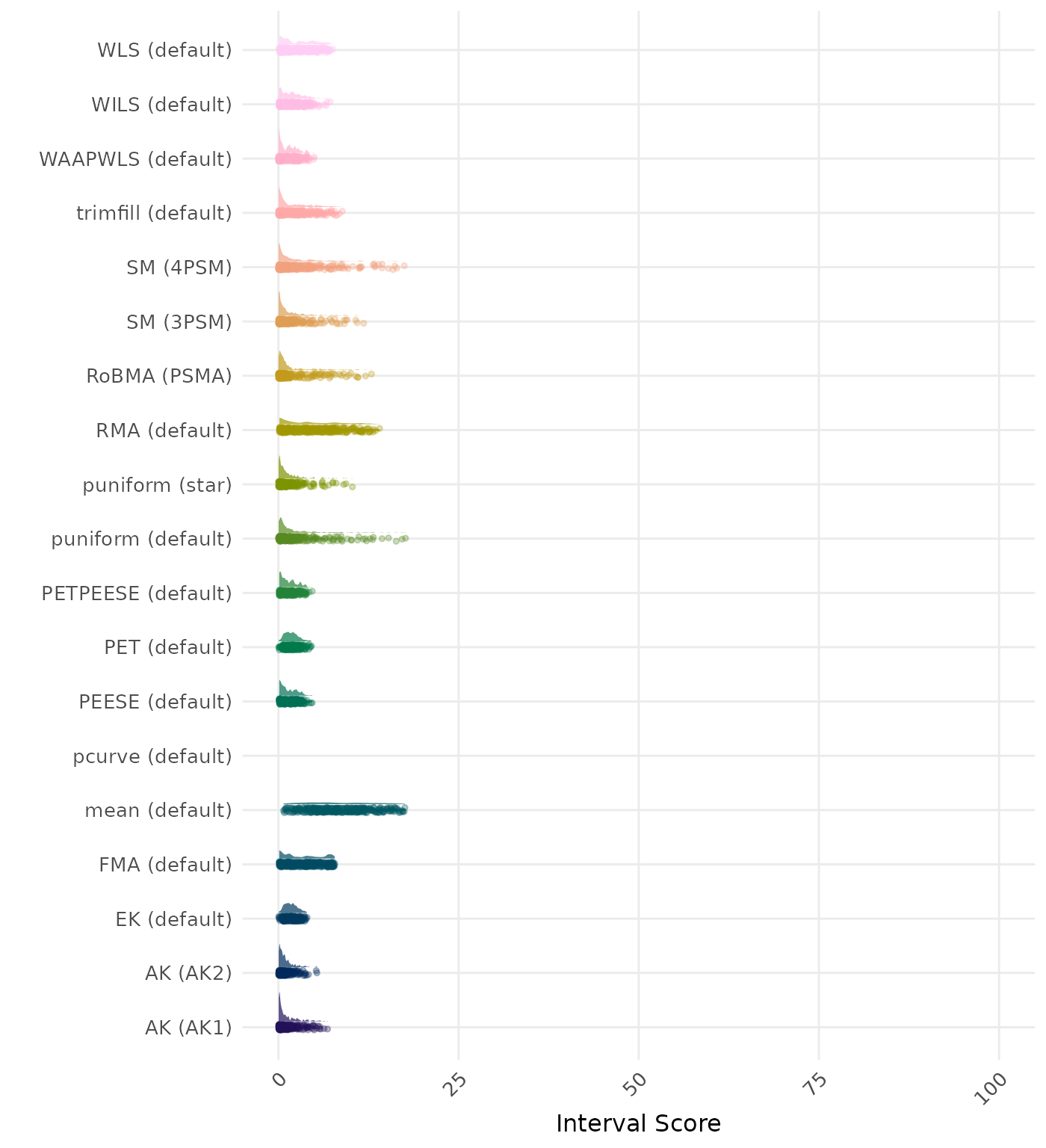

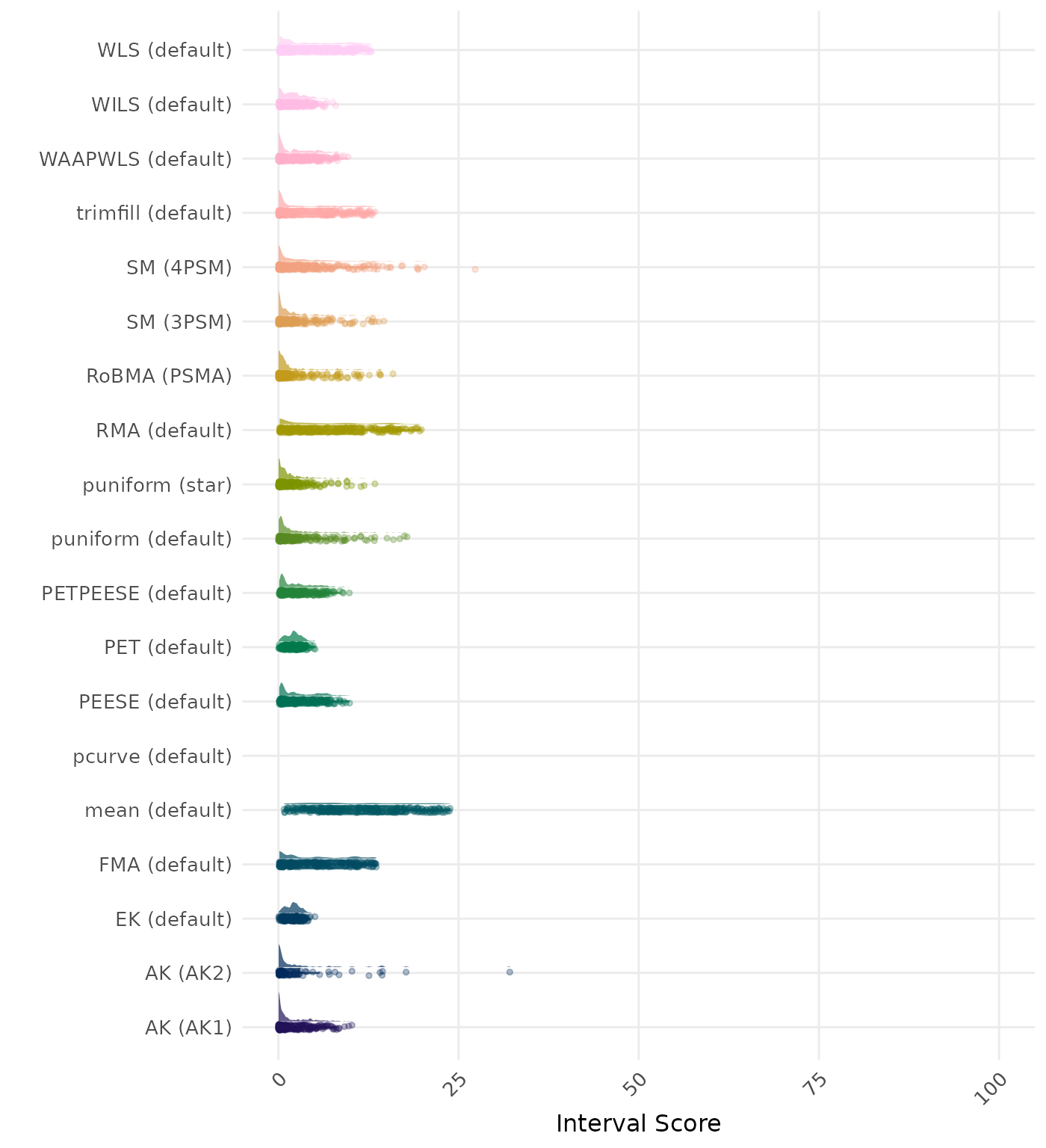

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK2) | 0.680 | 1 | AK (AK2) | 0.674 |

| 2 | RoBMA (PSMA) | 0.647 | 2 | RoBMA (PSMA) | 0.645 |

| 3 | puniform (star) | 0.643 | 3 | puniform (star) | 0.643 |

| 4 | SM (3PSM) | 0.635 | 4 | SM (3PSM) | 0.630 |

| 5 | SM (4PSM) | 0.634 | 5 | SM (4PSM) | 0.629 |

| 6 | WAAPWLS (default) | 0.604 | 6 | WAAPWLS (default) | 0.604 |

| 7 | AK (AK1) | 0.590 | 7 | AK (AK1) | 0.589 |

| 8 | puniform (default) | 0.549 | 8 | puniform (default) | 0.549 |

| 9 | trimfill (default) | 0.512 | 9 | PETPEESE (default) | 0.512 |

| 10 | PETPEESE (default) | 0.512 | 10 | trimfill (default) | 0.512 |

| 11 | EK (default) | 0.477 | 11 | EK (default) | 0.477 |

| 12 | PEESE (default) | 0.464 | 12 | PEESE (default) | 0.464 |

| 13 | PET (default) | 0.463 | 13 | PET (default) | 0.463 |

| 14 | WILS (default) | 0.415 | 14 | WILS (default) | 0.415 |

| 15 | WLS (default) | 0.397 | 15 | WLS (default) | 0.397 |

| 16 | RMA (default) | 0.359 | 16 | RMA (default) | 0.359 |

| 17 | FMA (default) | 0.307 | 17 | FMA (default) | 0.307 |

| 18 | mean (default) | 0.179 | 18 | mean (default) | 0.179 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

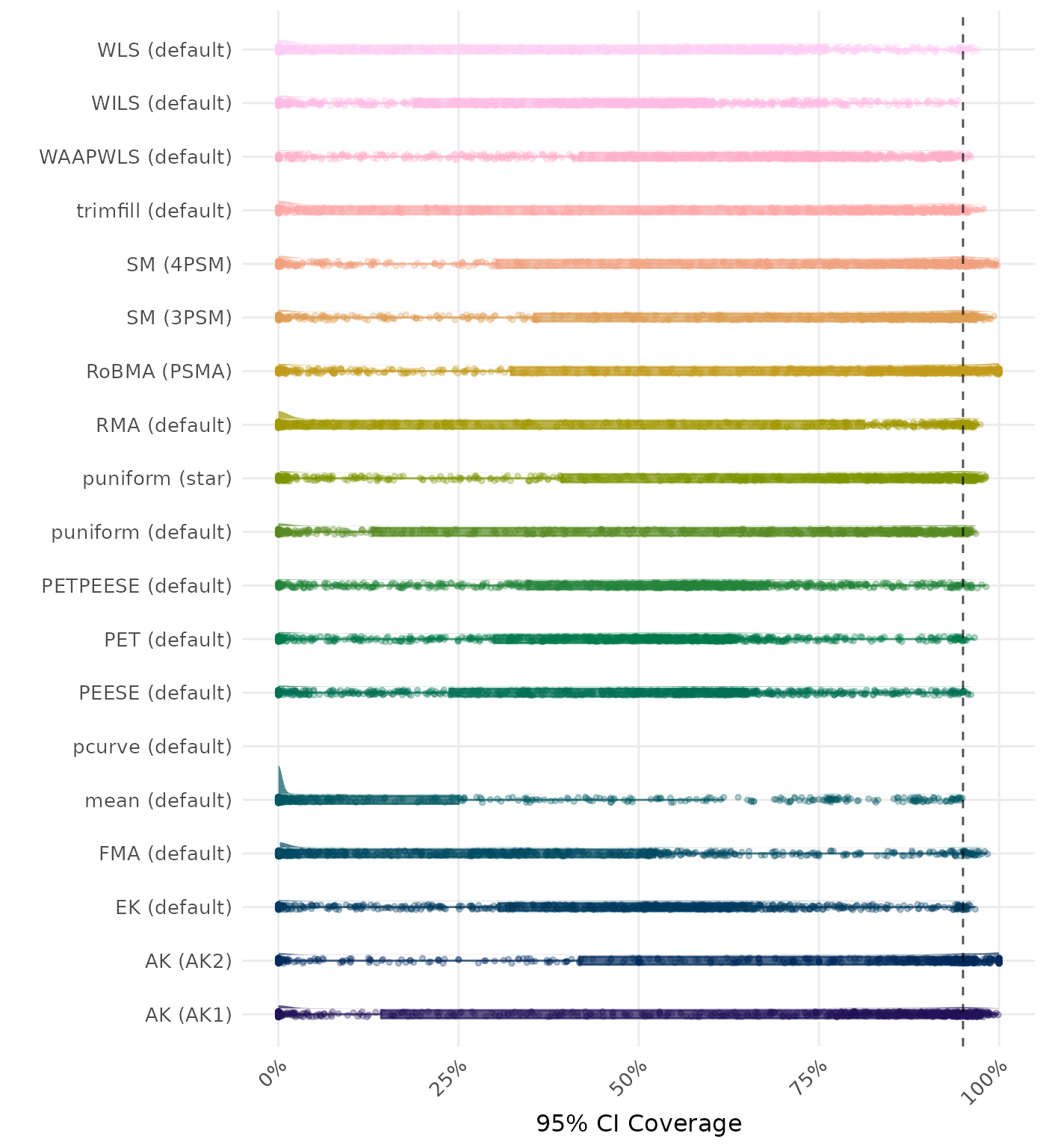

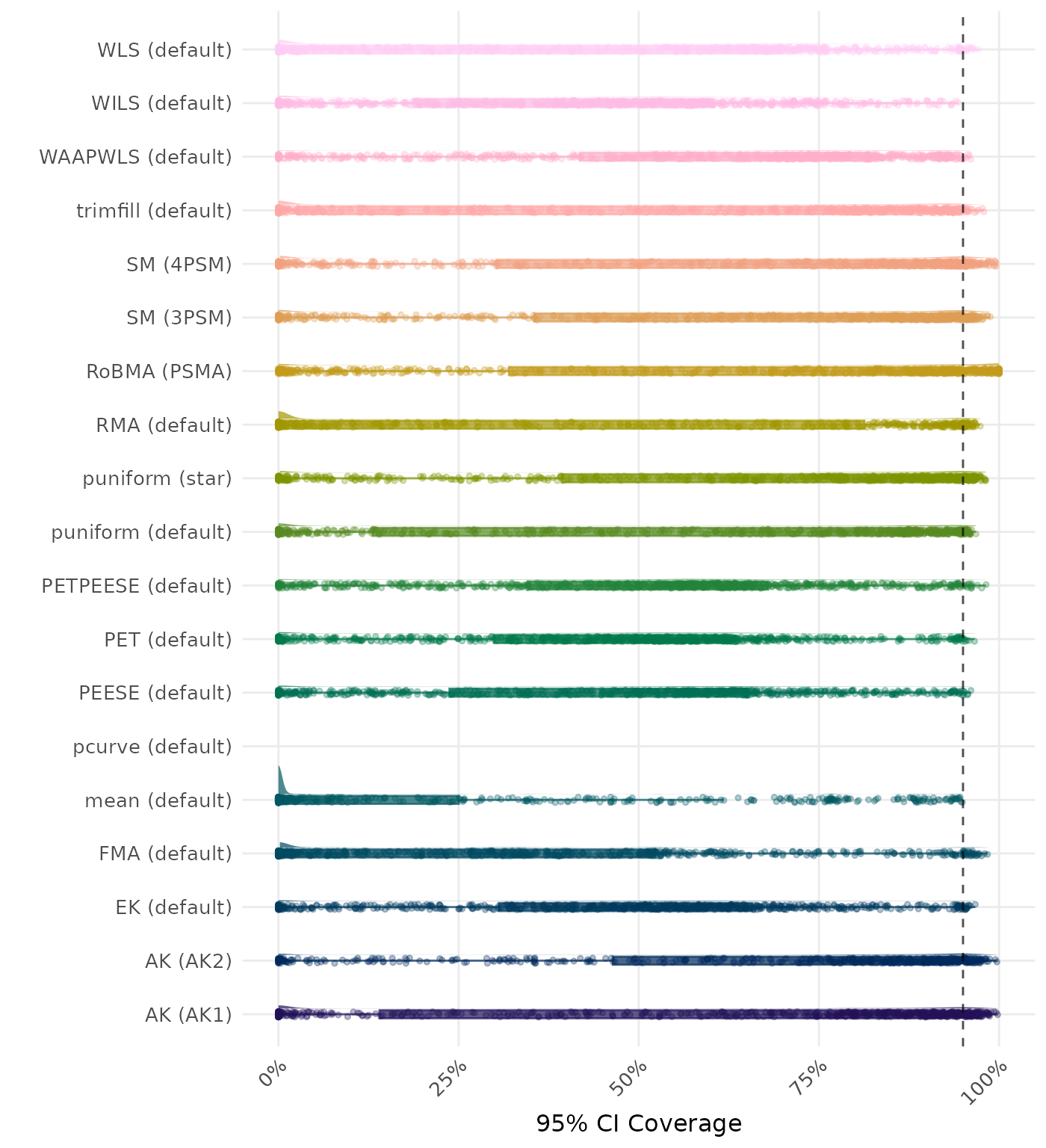

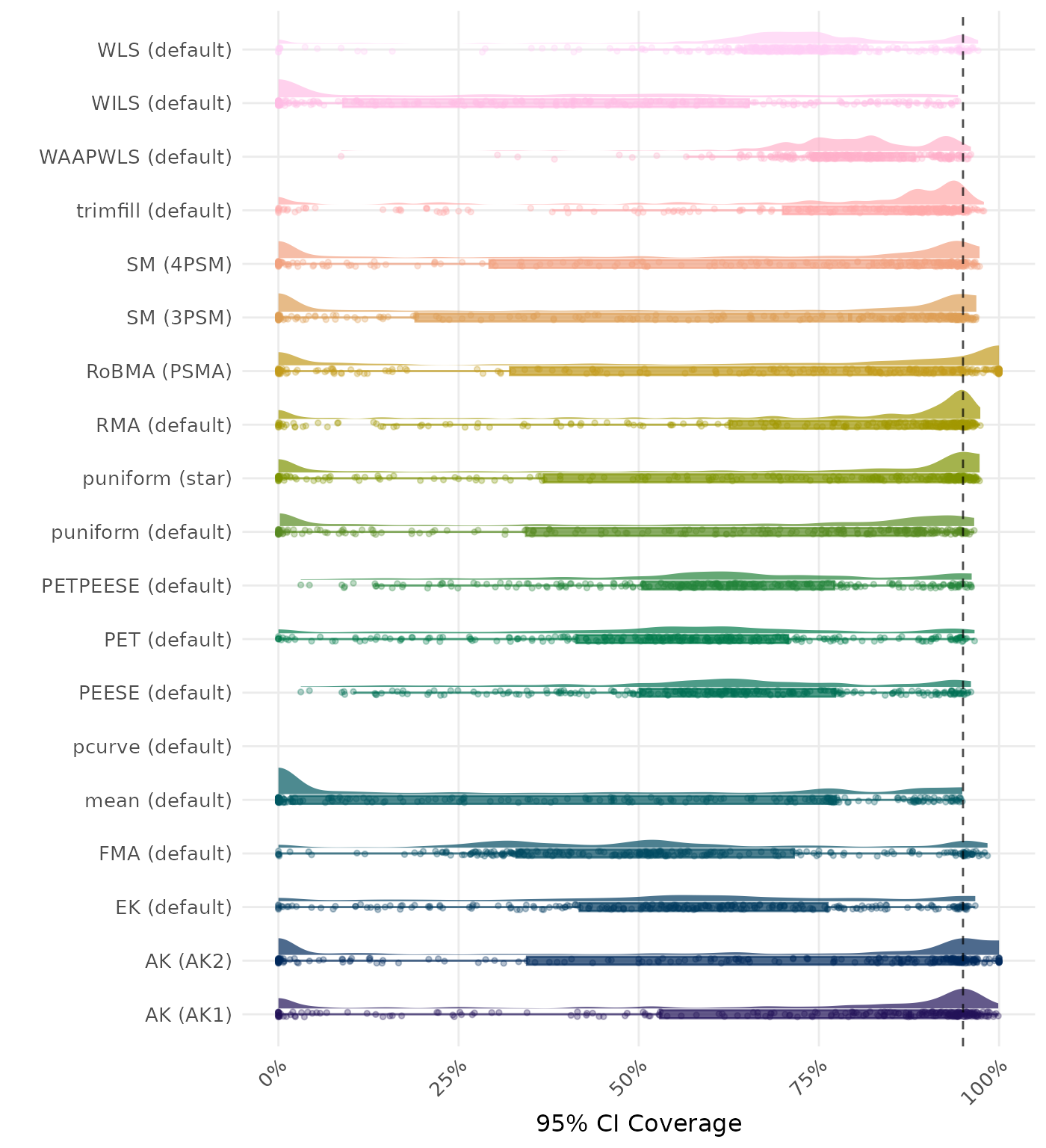

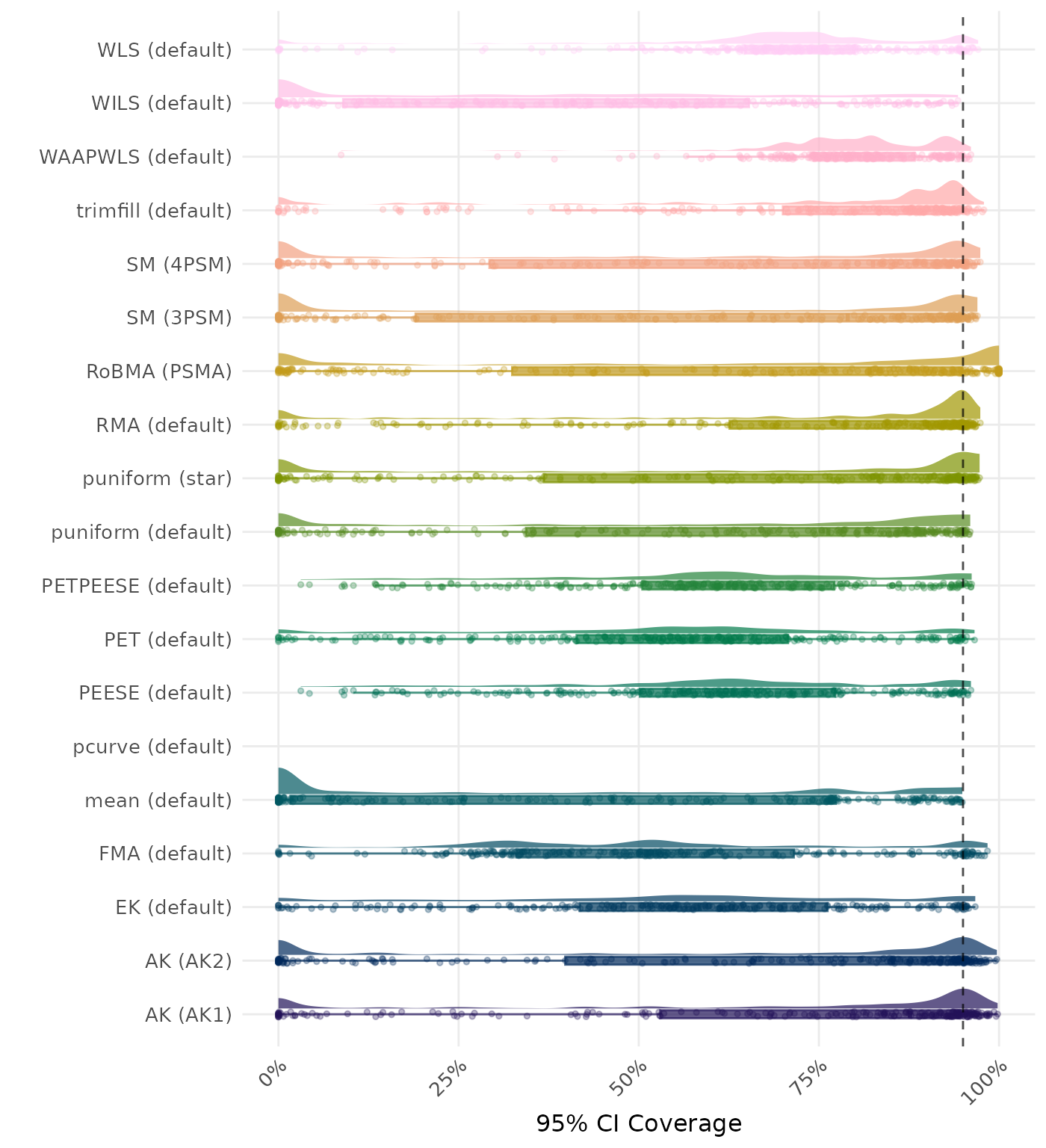

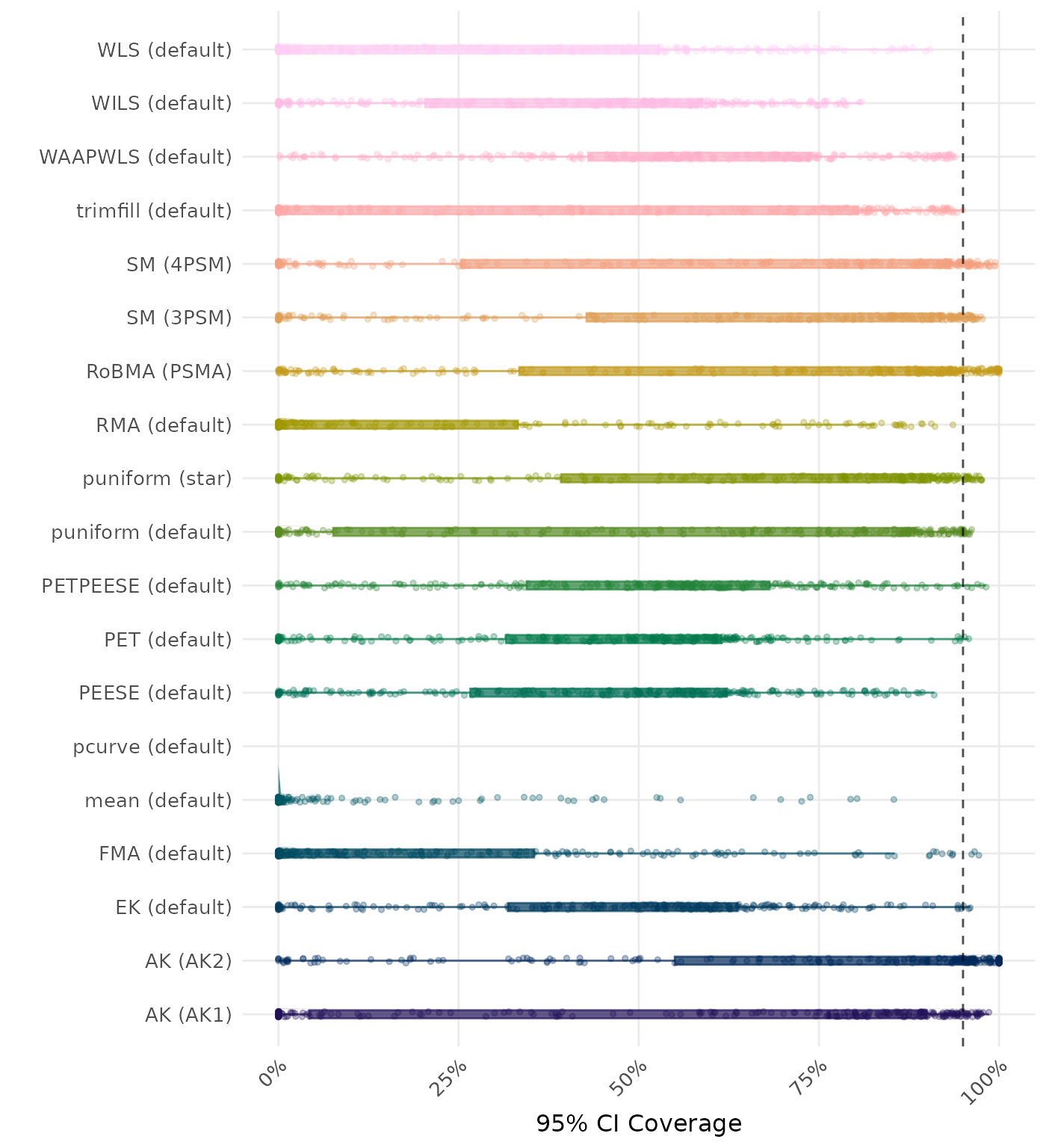

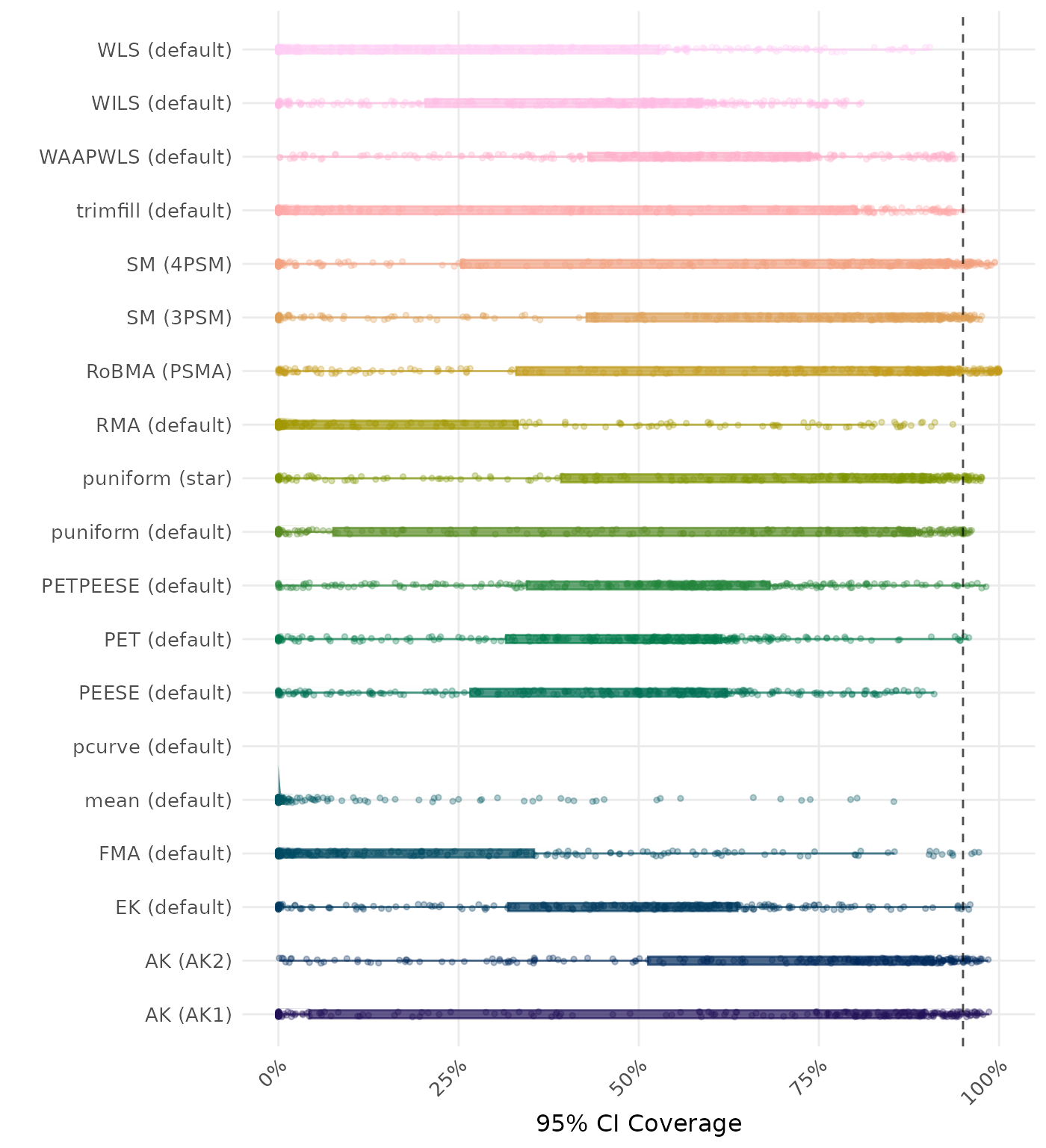

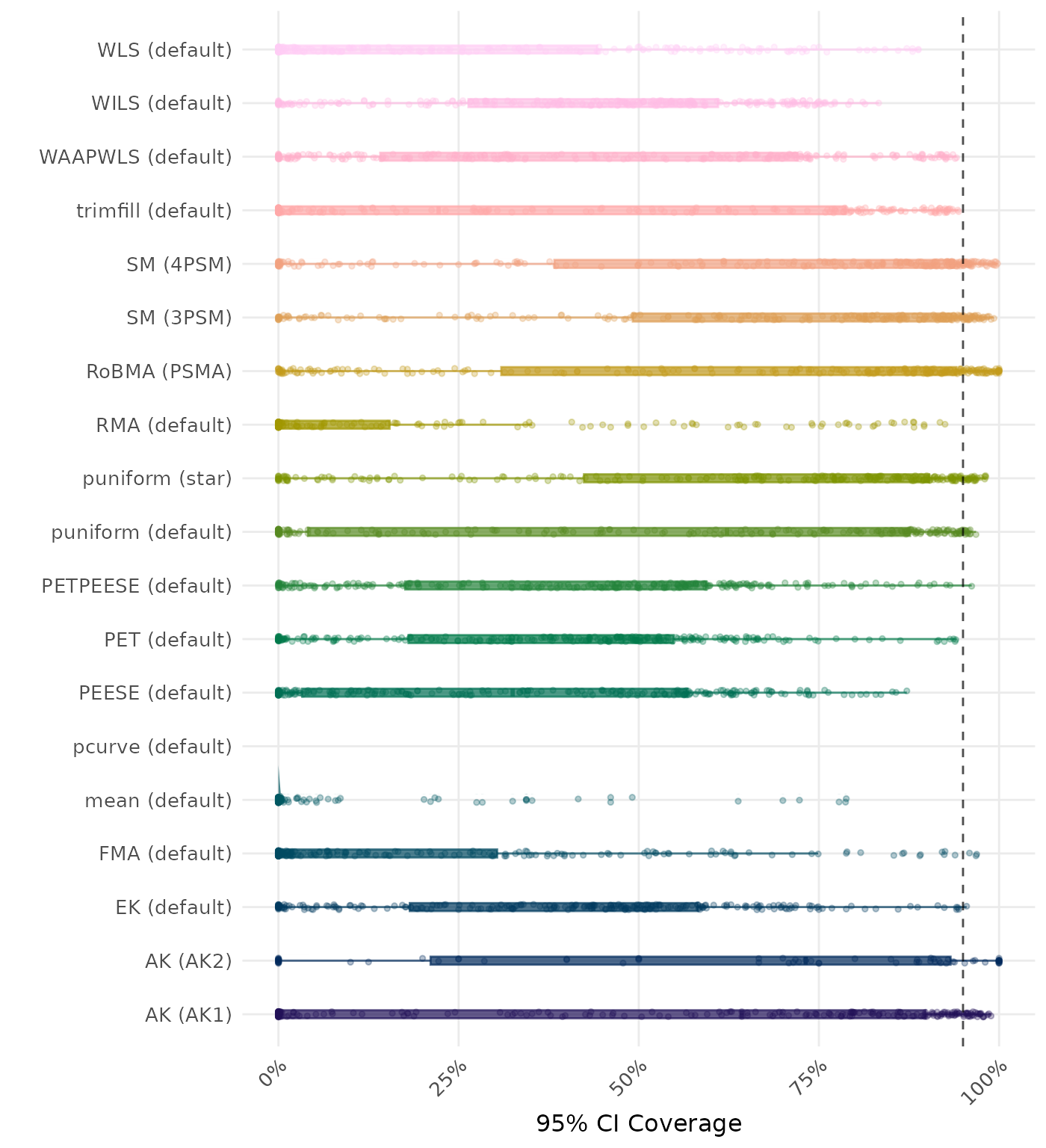

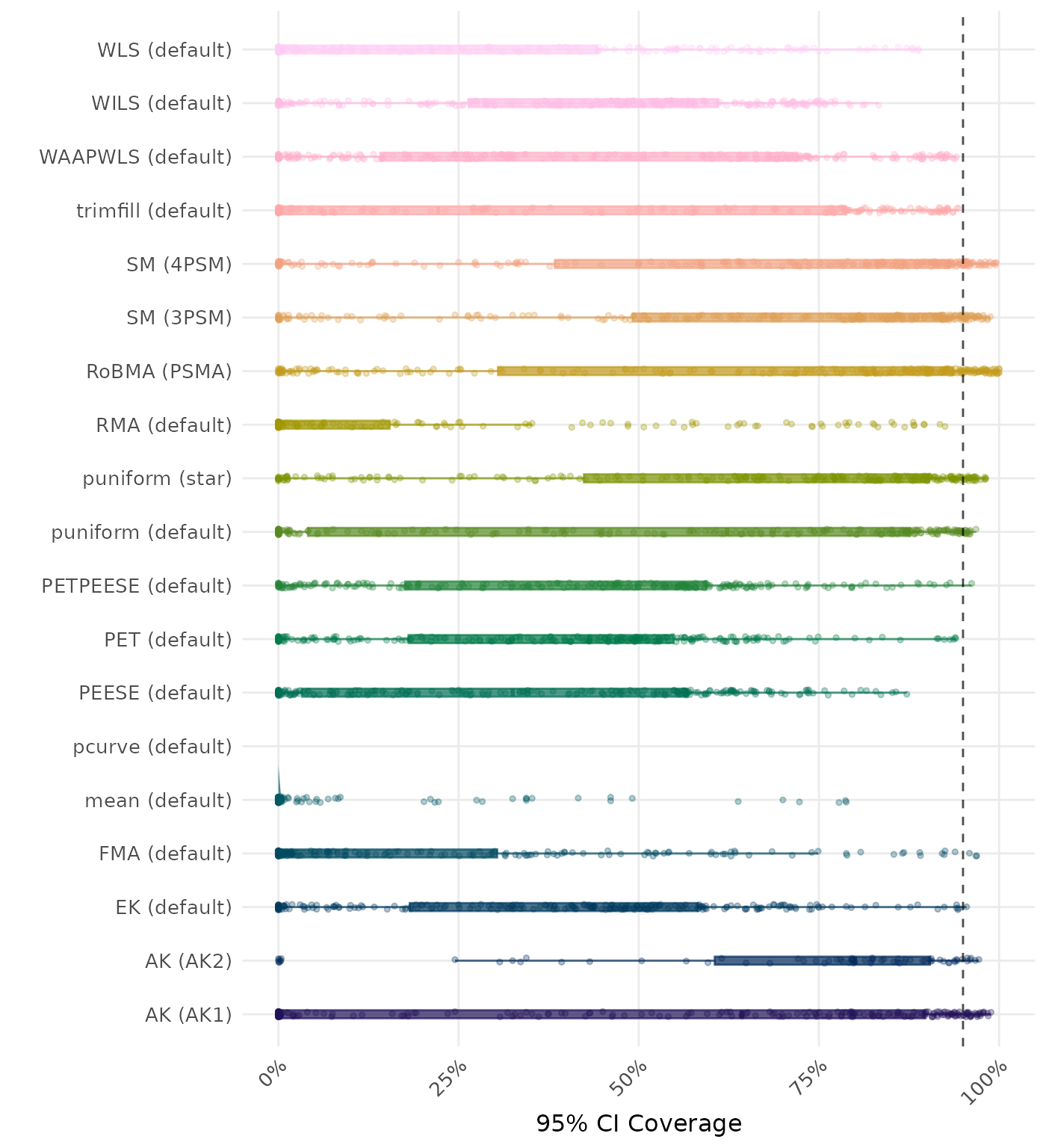

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | FMA (default) | 0.091 | 1 | FMA (default) | 0.091 |

| 2 | WLS (default) | 0.133 | 2 | WLS (default) | 0.133 |

| 3 | mean (default) | 0.148 | 3 | mean (default) | 0.148 |

| 4 | PEESE (default) | 0.153 | 4 | PEESE (default) | 0.153 |

| 5 | trimfill (default) | 0.160 | 5 | trimfill (default) | 0.160 |

| 6 | WILS (default) | 0.161 | 6 | WILS (default) | 0.161 |

| 7 | RMA (default) | 0.163 | 7 | RMA (default) | 0.163 |

| 8 | WAAPWLS (default) | 0.189 | 8 | WAAPWLS (default) | 0.189 |

| 9 | PETPEESE (default) | 0.190 | 9 | PETPEESE (default) | 0.190 |

| 10 | PET (default) | 0.244 | 10 | PET (default) | 0.244 |

| 11 | EK (default) | 0.264 | 11 | EK (default) | 0.264 |

| 12 | RoBMA (PSMA) | 0.279 | 12 | RoBMA (PSMA) | 0.277 |

| 13 | puniform (star) | 0.341 | 13 | puniform (star) | 0.341 |

| 14 | puniform (default) | 0.540 | 14 | puniform (default) | 0.494 |

| 15 | SM (3PSM) | 0.615 | 15 | SM (3PSM) | 0.557 |

| 16 | SM (4PSM) | 1.079 | 16 | SM (4PSM) | 0.985 |

| 17 | AK (AK1) | 1.787 | 17 | AK (AK1) | 1.711 |

| 18 | AK (AK2) | 2.464 | 18 | AK (AK2) | 2.604 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

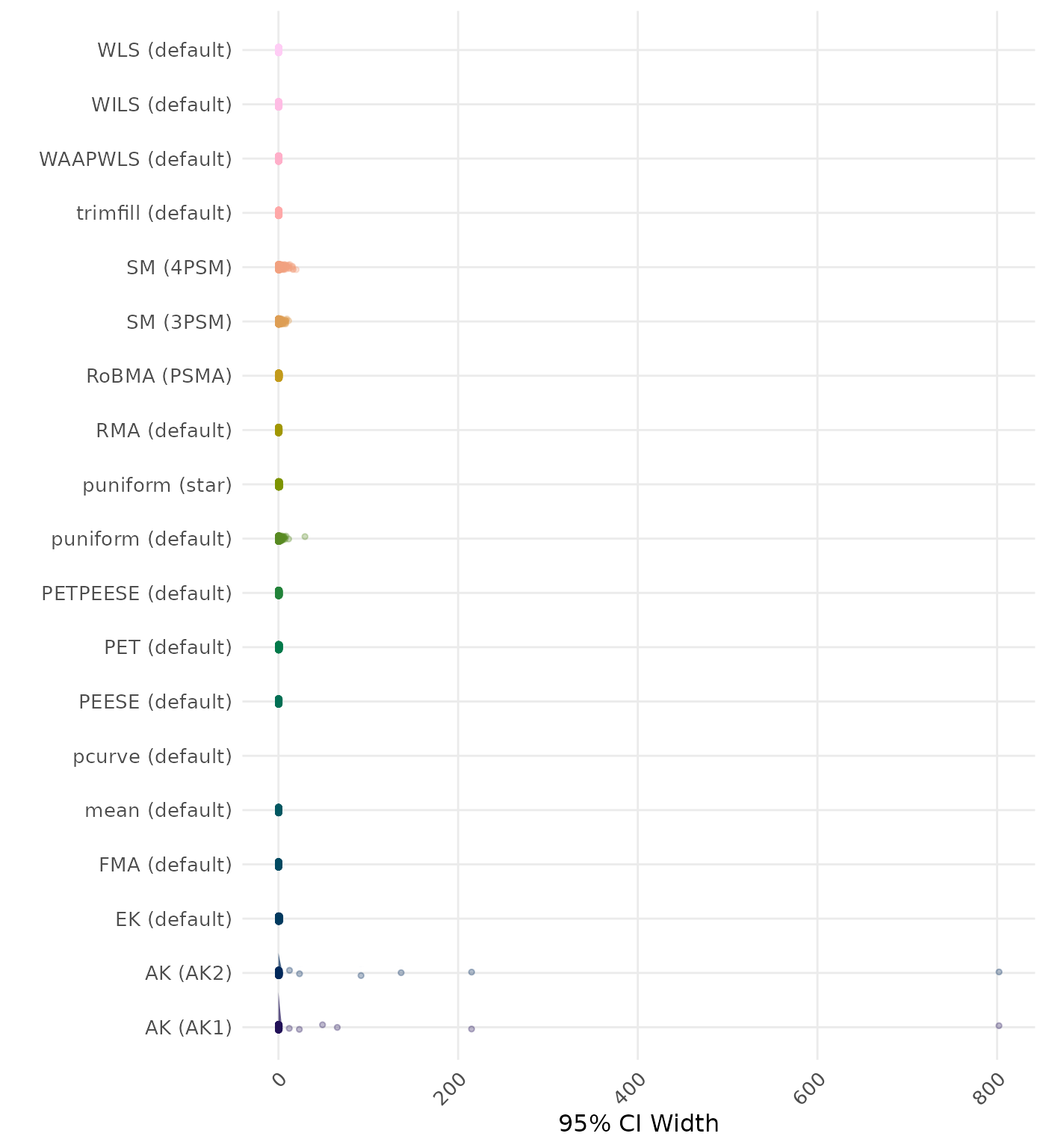

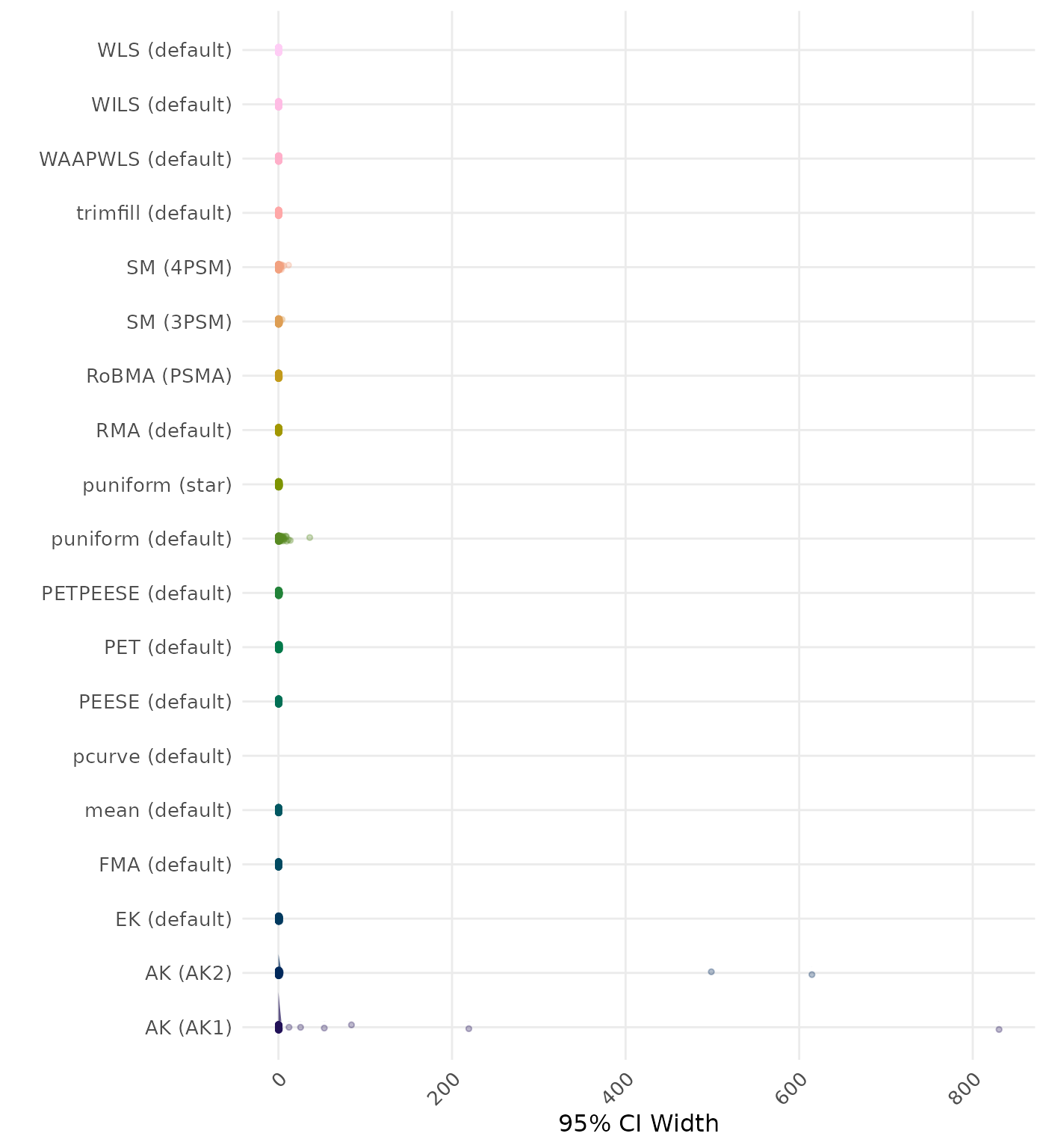

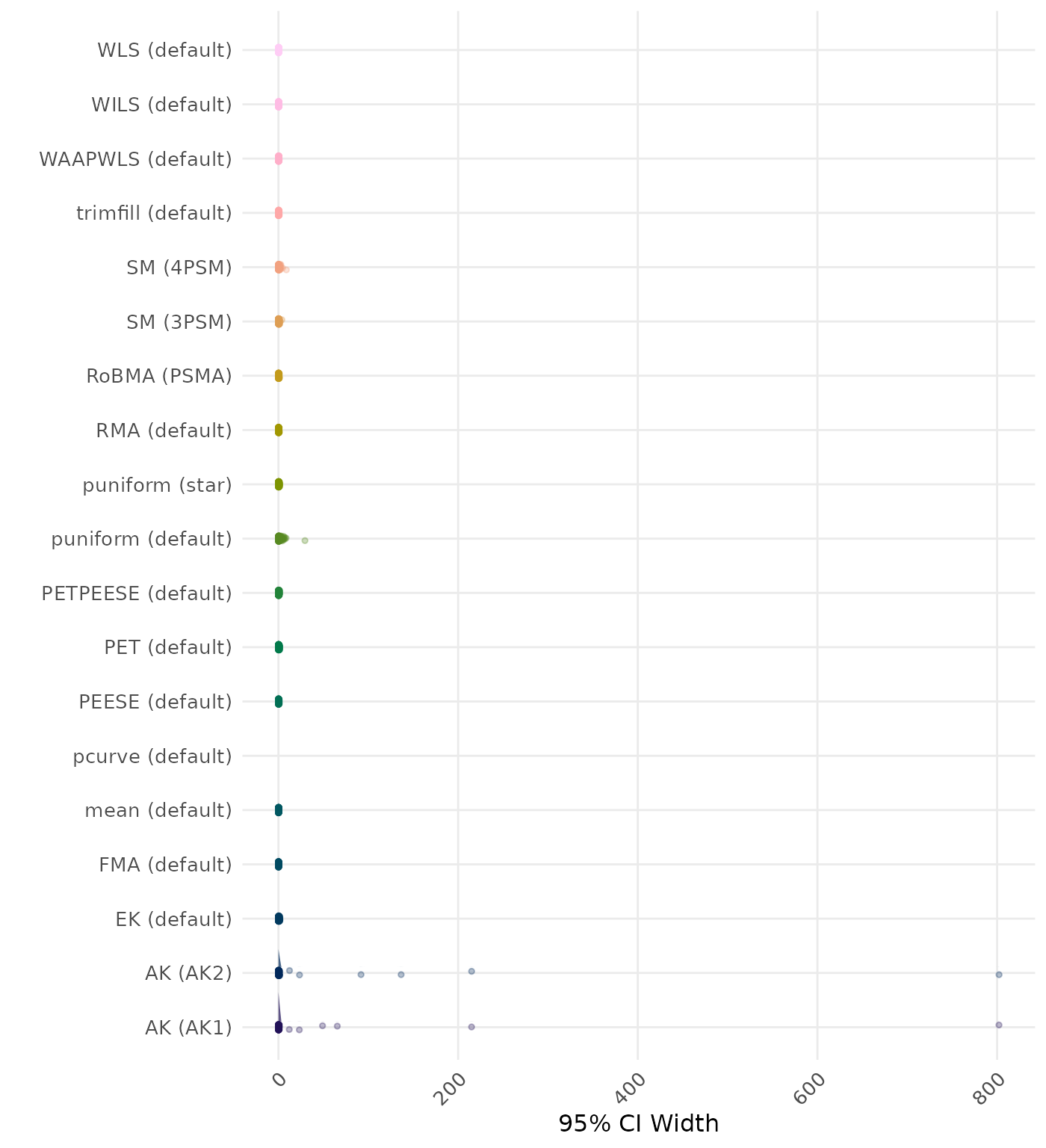

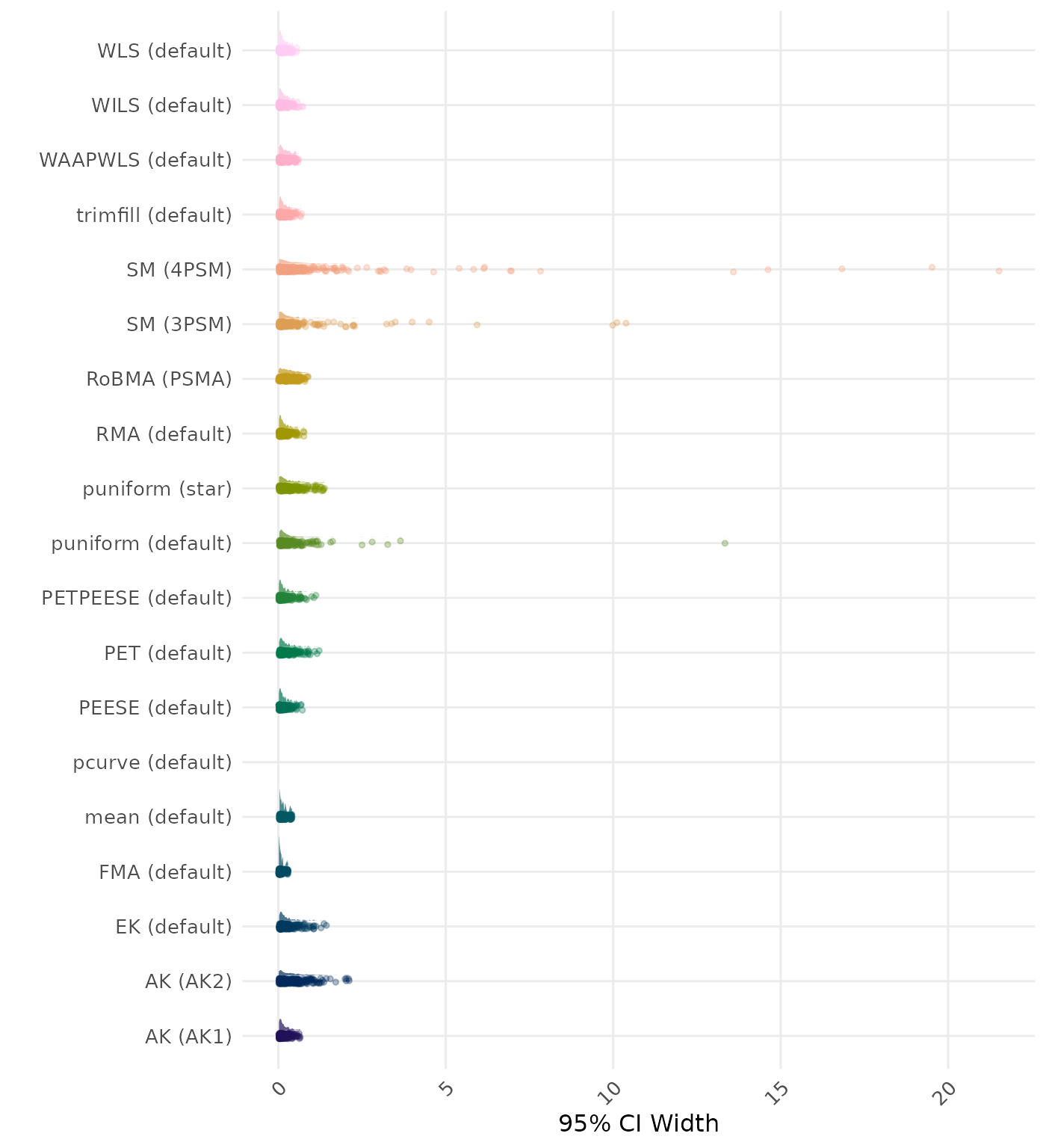

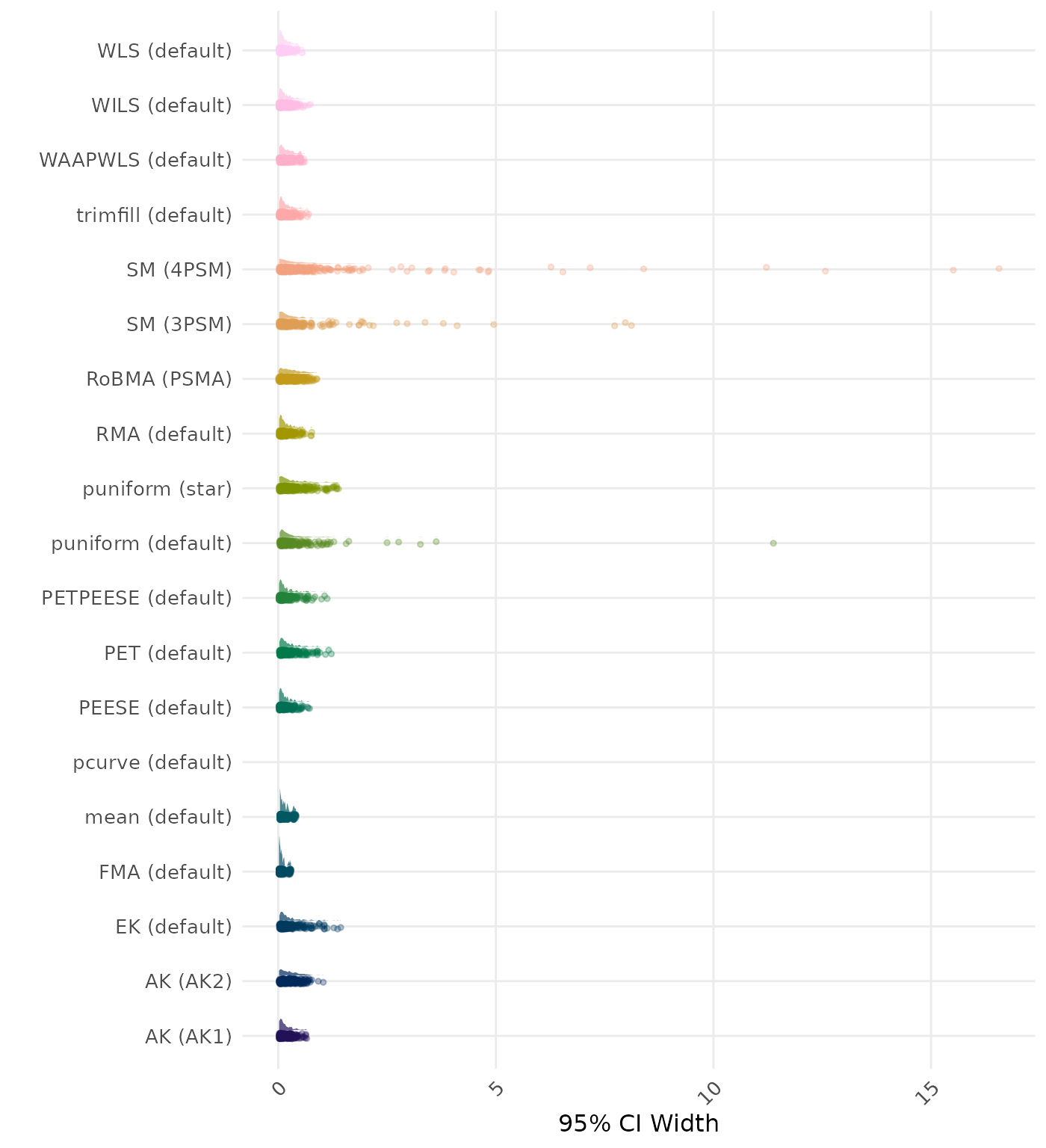

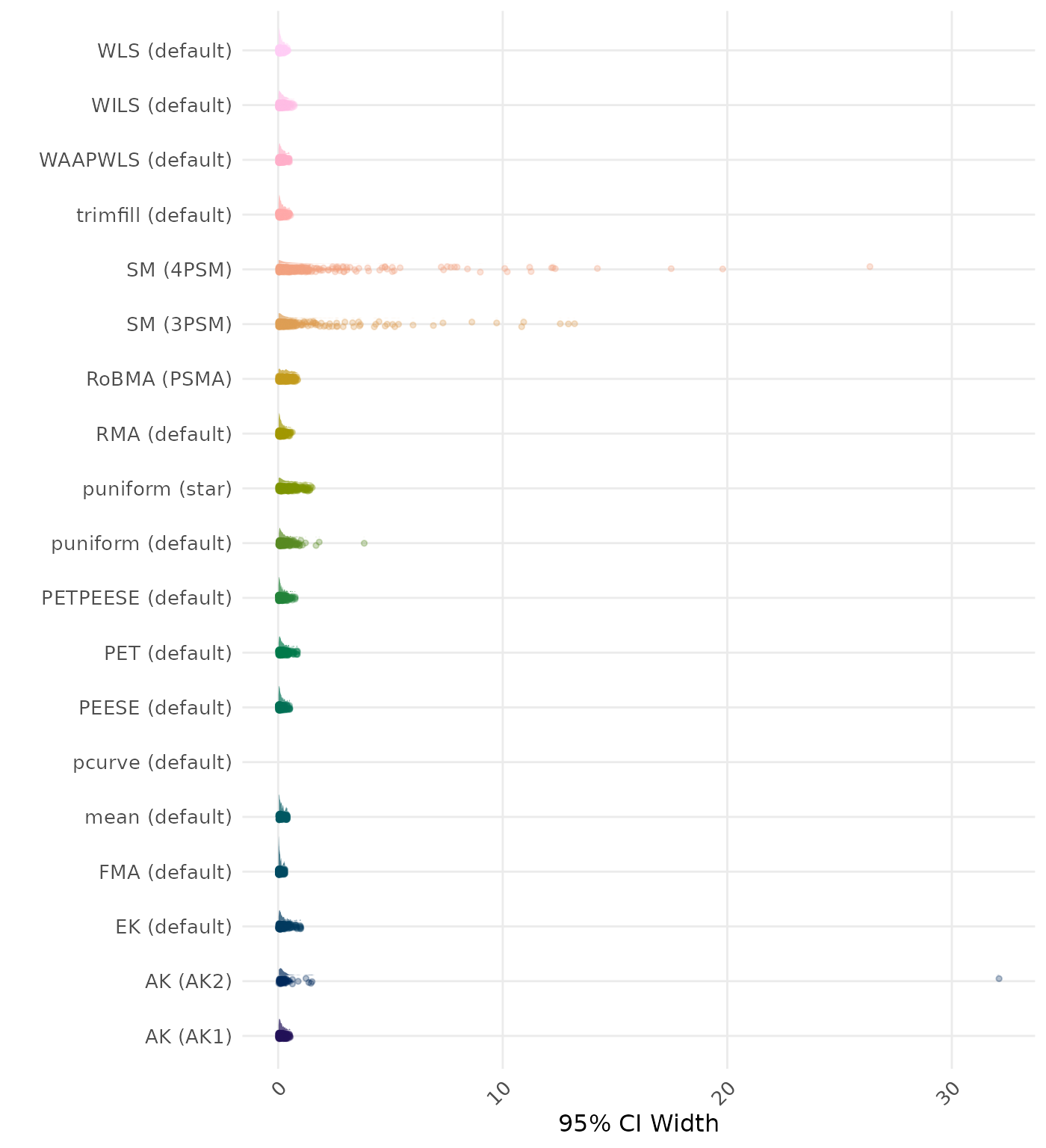

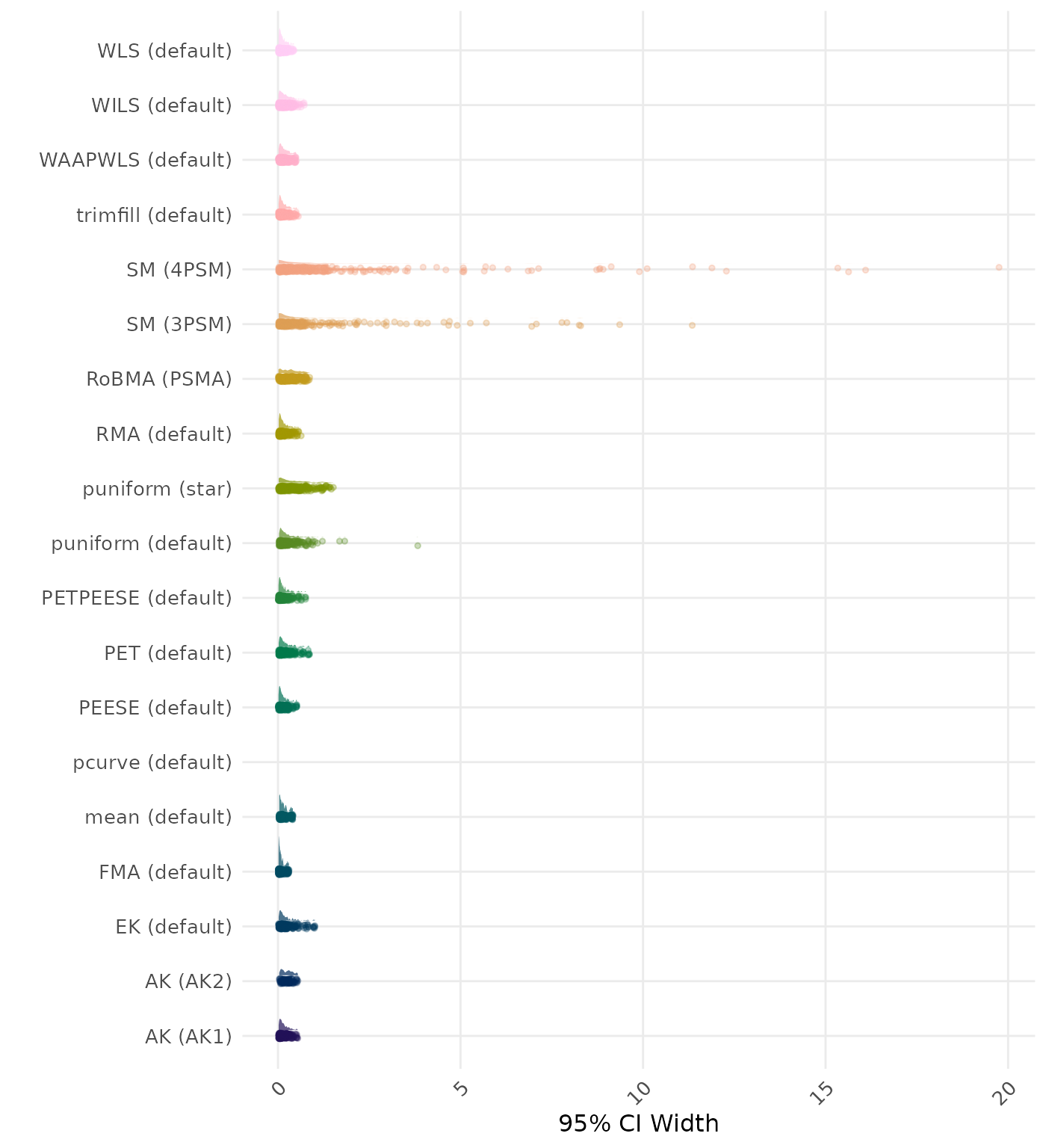

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | RoBMA (PSMA) | 2.323 | 1 | RoBMA (PSMA) | 2.252 |

| 2 | puniform (default) | 1.987 | 2 | puniform (default) | 1.979 |

| 3 | AK (AK2) | 1.351 | 3 | AK (AK2) | 1.165 |

| 4 | PETPEESE (default) | 1.091 | 4 | PETPEESE (default) | 1.091 |

| 5 | AK (AK1) | 1.030 | 5 | AK (AK1) | 1.027 |

| 6 | PET (default) | 0.961 | 6 | PET (default) | 0.961 |

| 7 | EK (default) | 0.960 | 7 | EK (default) | 0.960 |

| 8 | SM (3PSM) | 0.909 | 8 | SM (3PSM) | 0.895 |

| 9 | WAAPWLS (default) | 0.821 | 9 | WAAPWLS (default) | 0.821 |

| 10 | puniform (star) | 0.798 | 10 | puniform (star) | 0.798 |

| 11 | trimfill (default) | 0.793 | 11 | trimfill (default) | 0.793 |

| 12 | RMA (default) | 0.764 | 12 | RMA (default) | 0.764 |

| 13 | PEESE (default) | 0.627 | 13 | PEESE (default) | 0.627 |

| 14 | WLS (default) | 0.621 | 14 | WLS (default) | 0.621 |

| 15 | WILS (default) | 0.573 | 15 | WILS (default) | 0.573 |

| 16 | FMA (default) | 0.385 | 16 | FMA (default) | 0.385 |

| 17 | SM (4PSM) | 0.368 | 17 | SM (4PSM) | 0.381 |

| 18 | mean (default) | 0.351 | 18 | mean (default) | 0.351 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

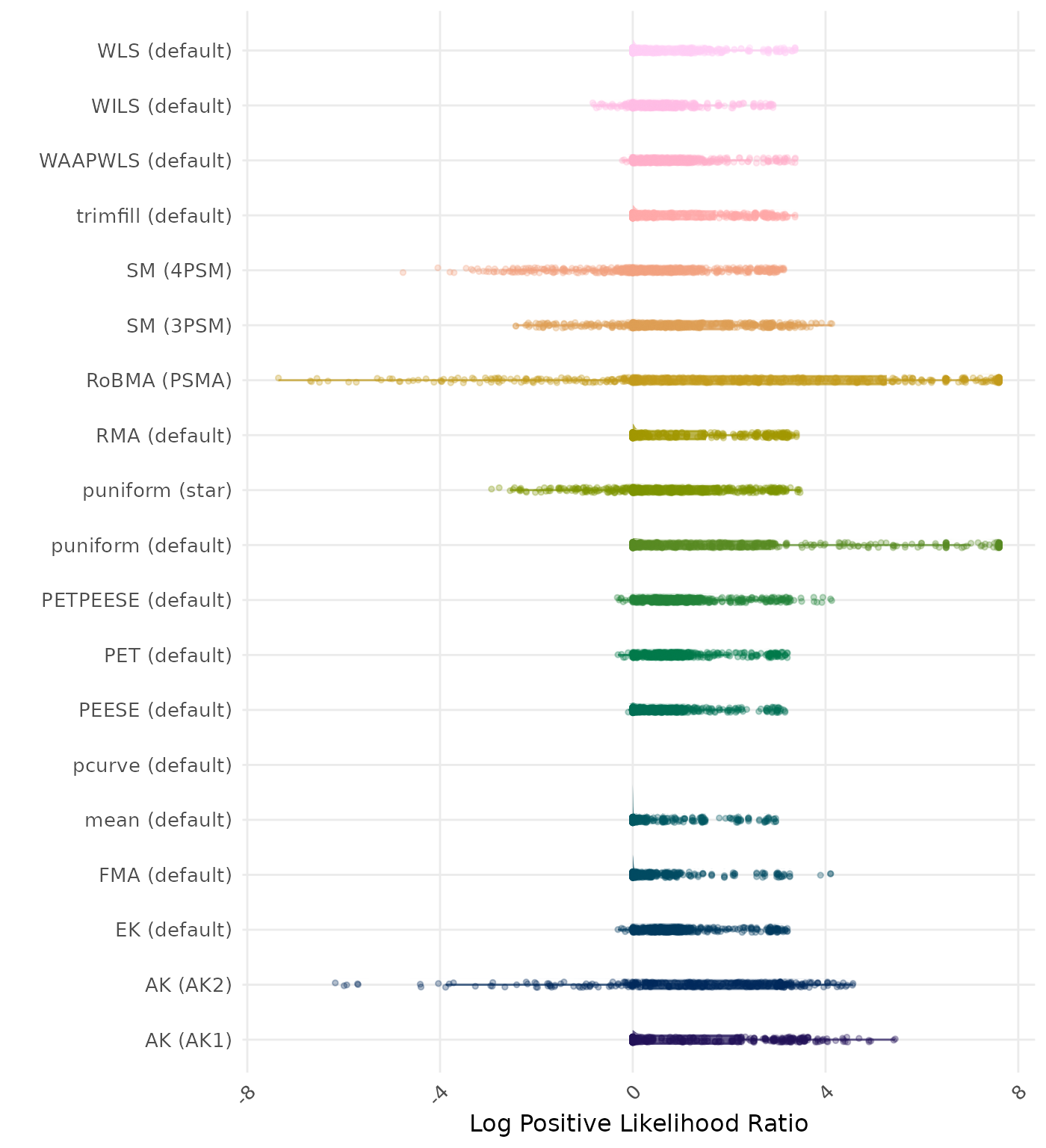

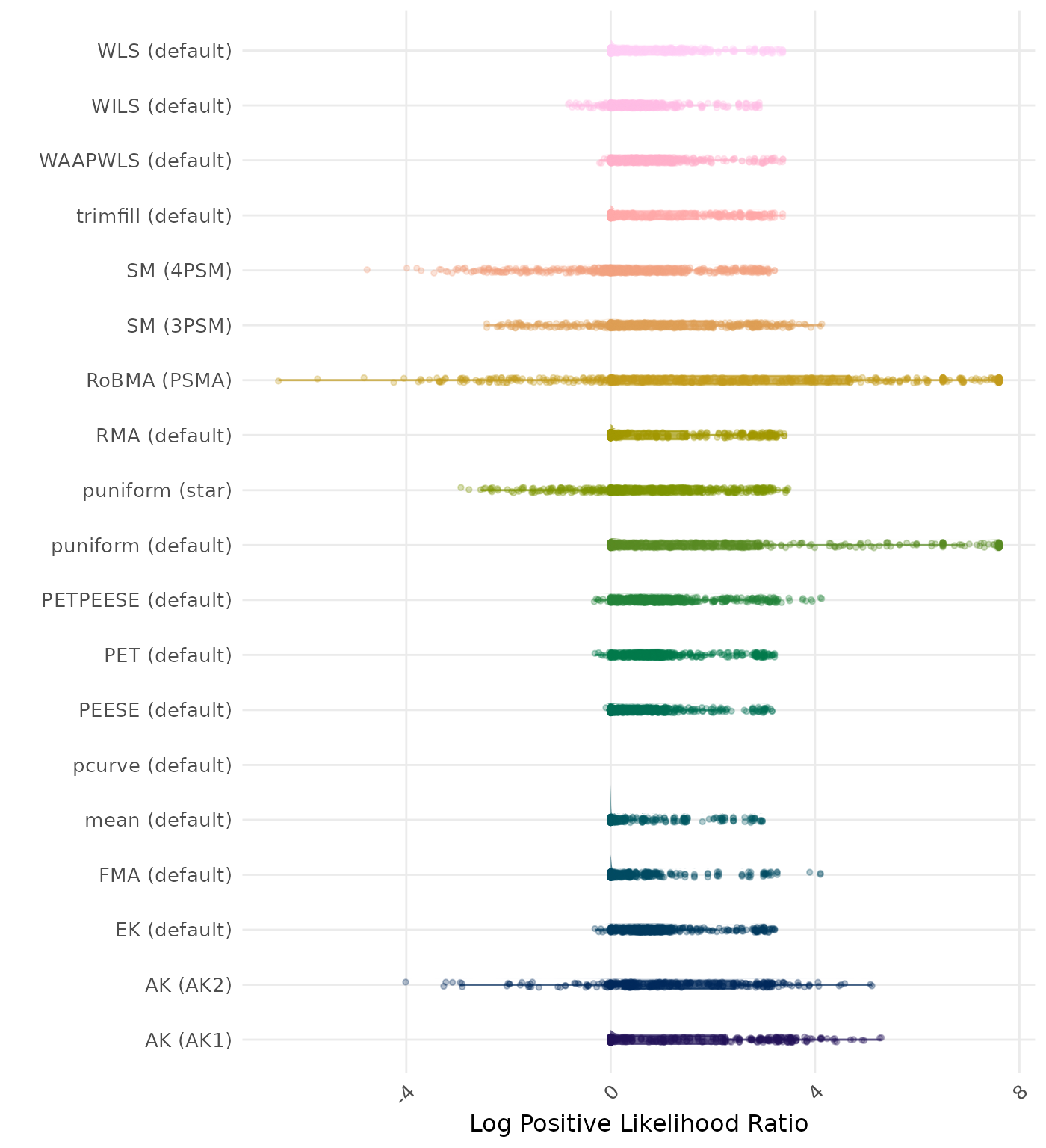

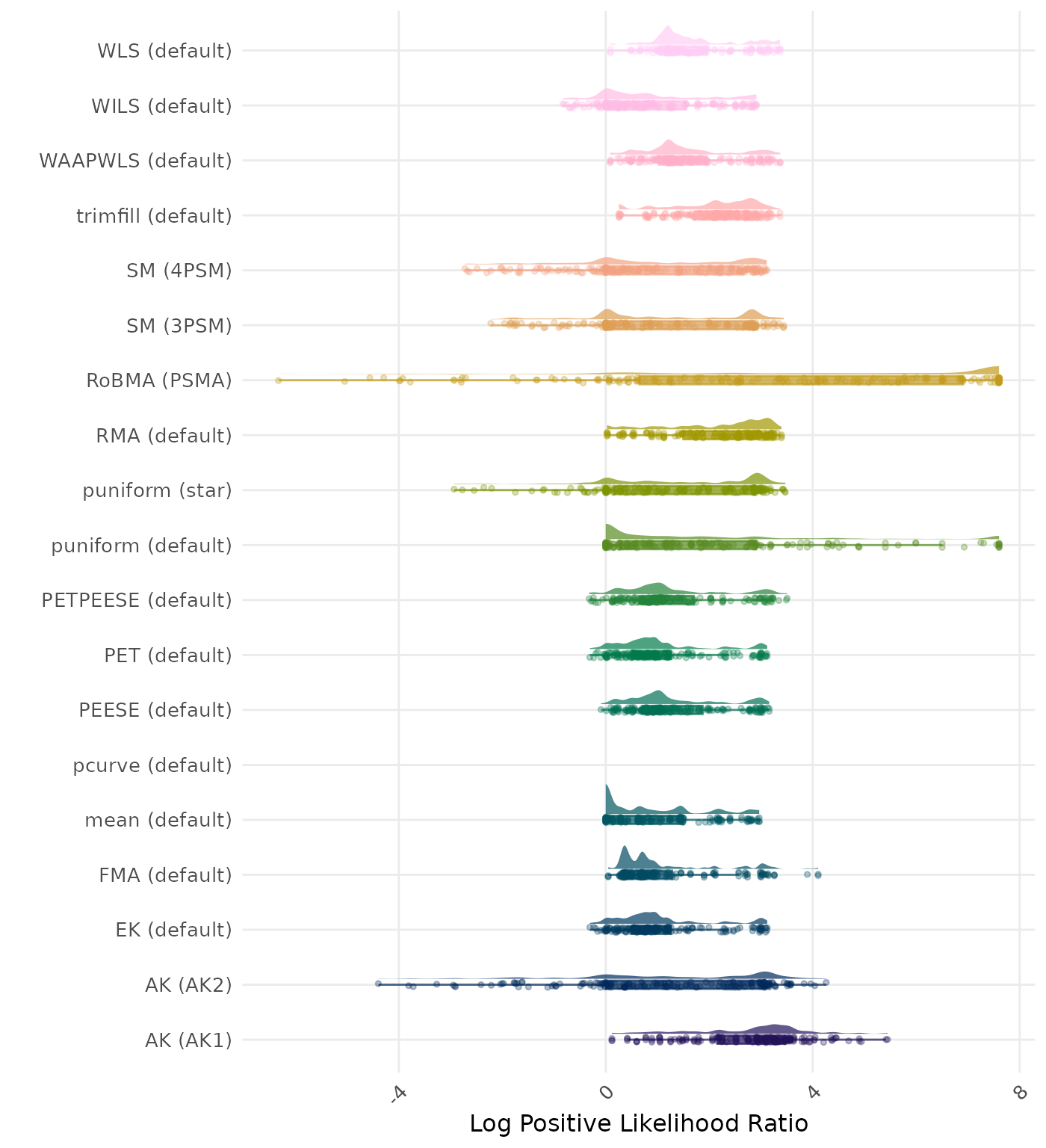

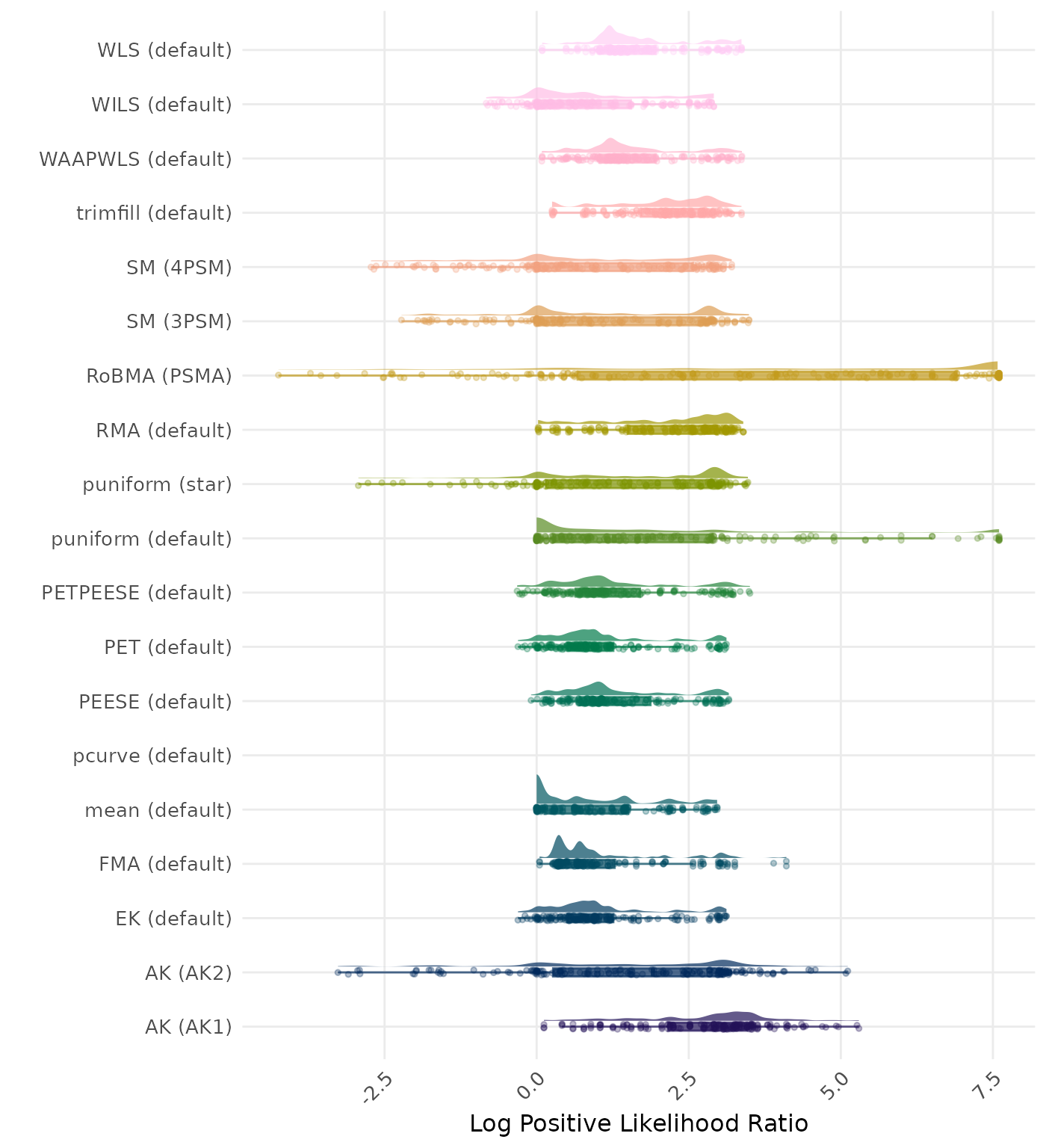

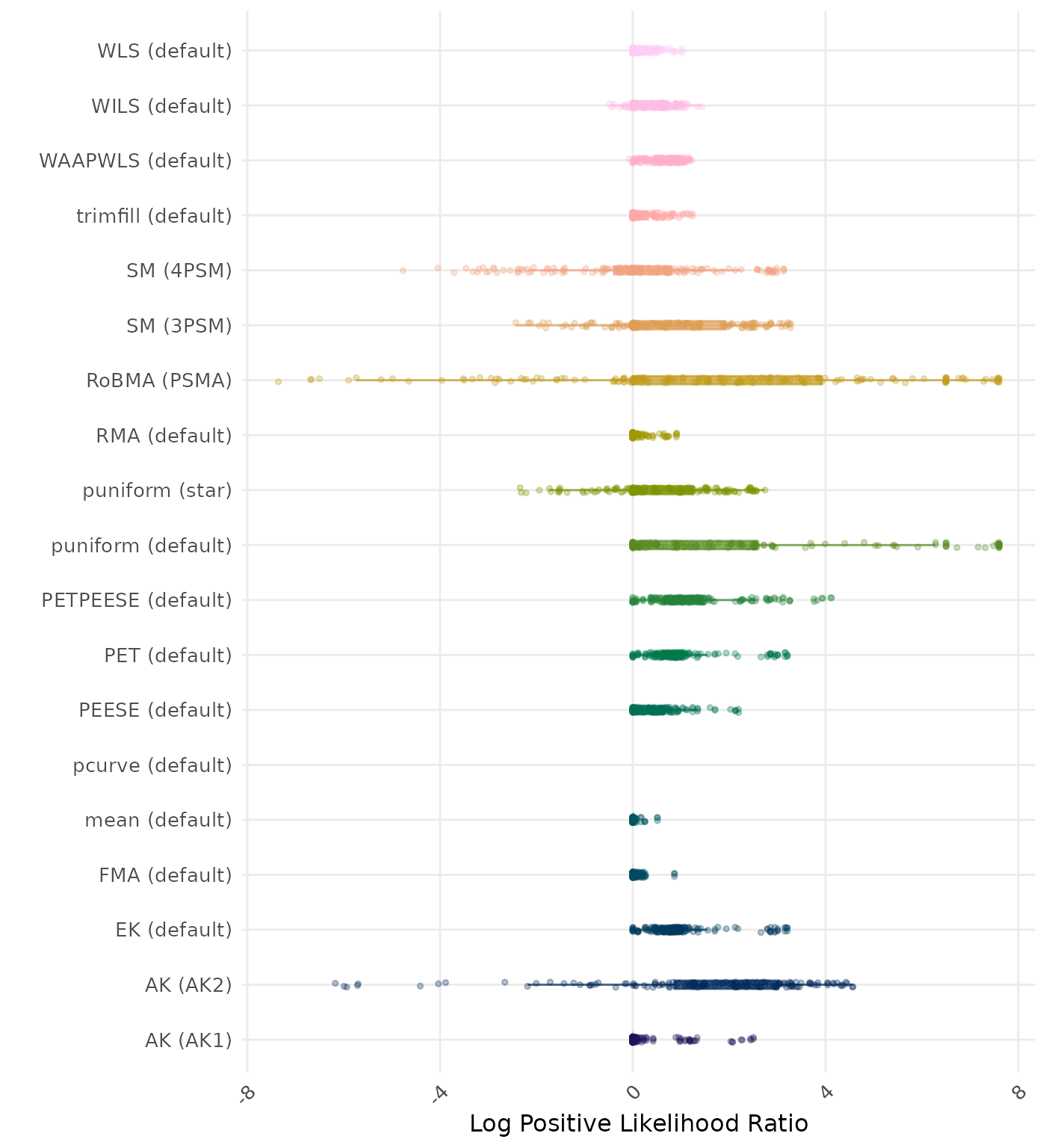

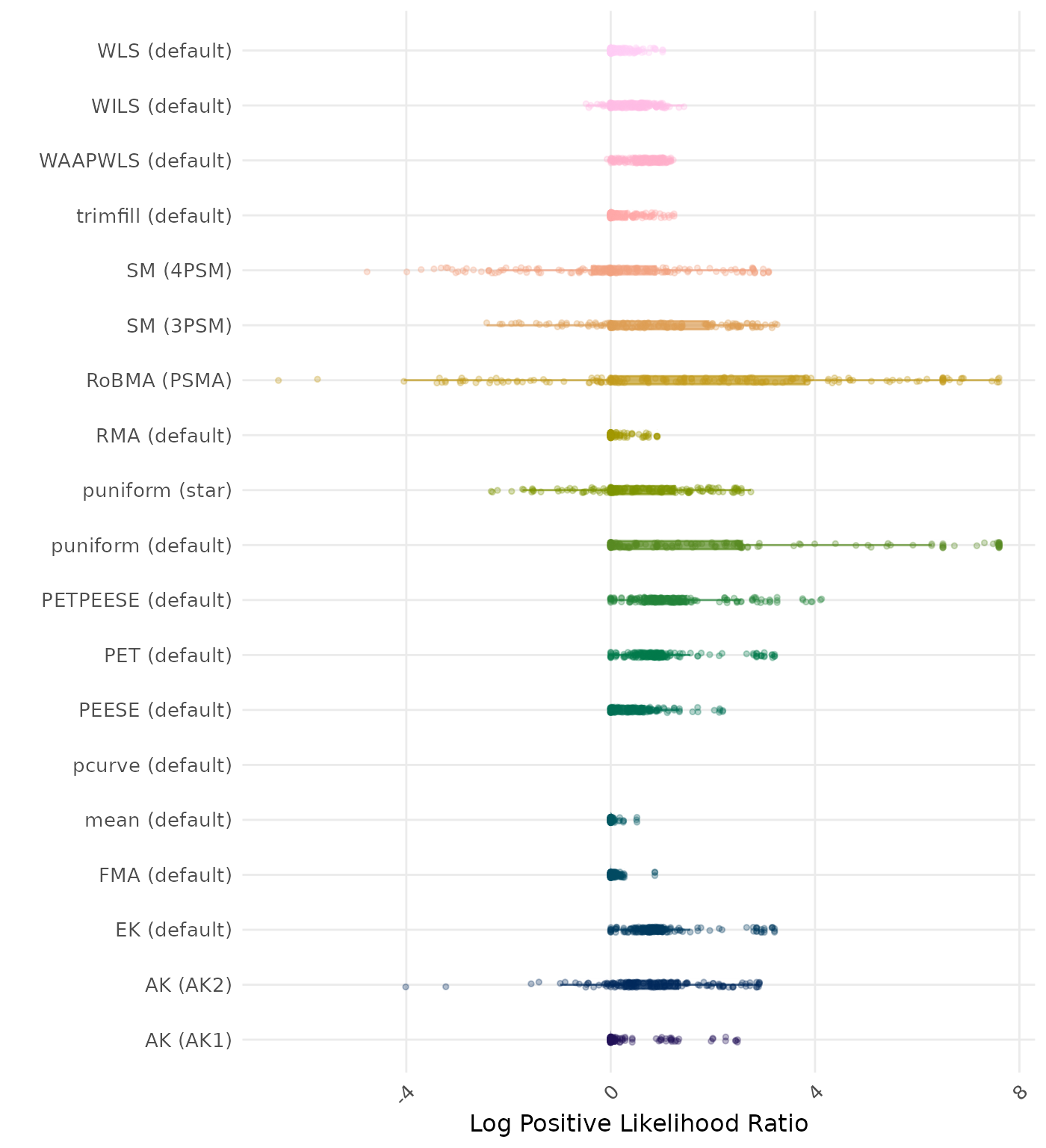

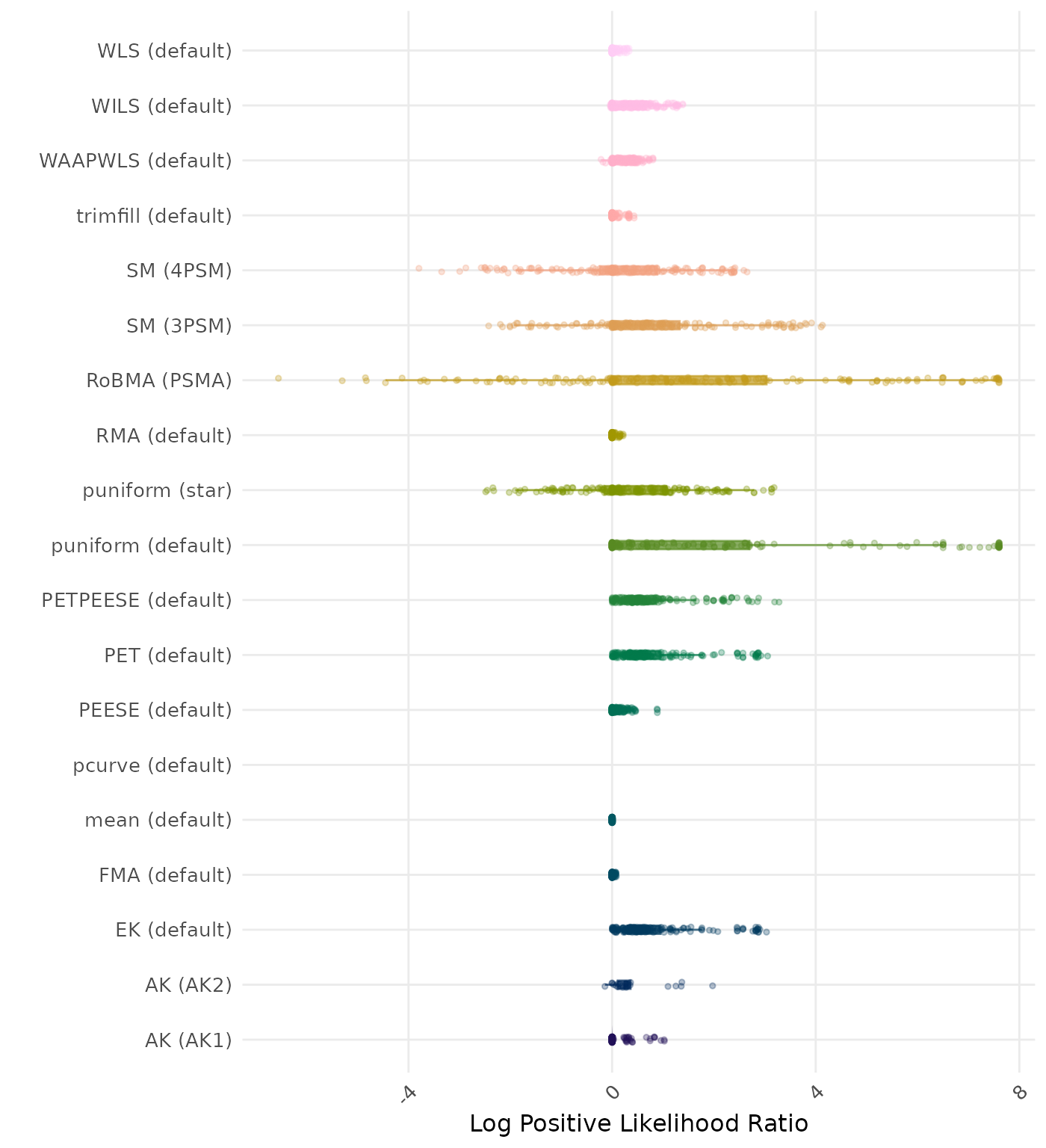

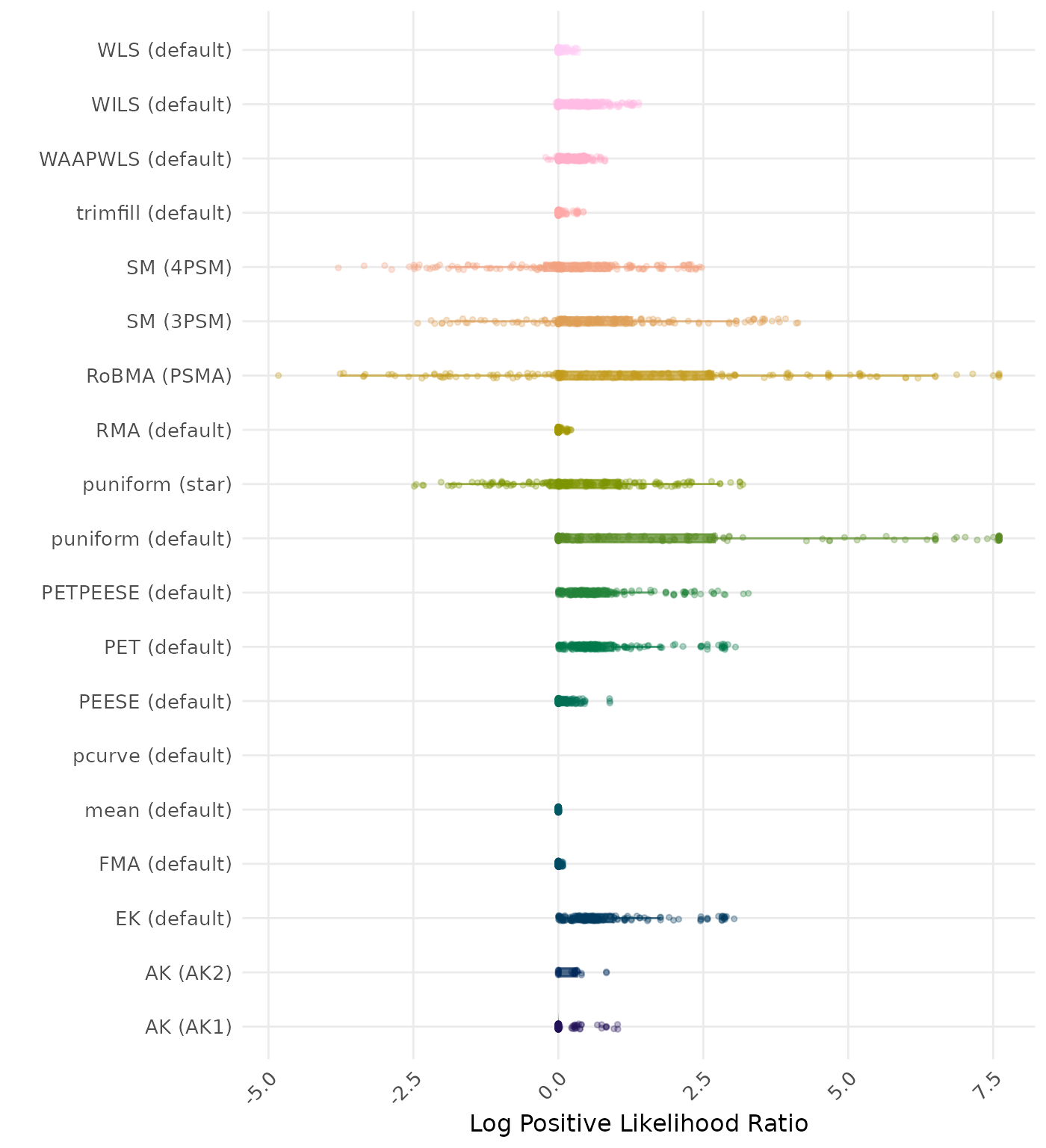

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | PETPEESE (default) | -4.367 | 1 | PETPEESE (default) | -4.367 |

| 2 | PET (default) | -4.242 | 2 | PET (default) | -4.242 |

| 3 | EK (default) | -4.242 | 3 | EK (default) | -4.242 |

| 4 | WAAPWLS (default) | -4.199 | 4 | WAAPWLS (default) | -4.199 |

| 5 | PEESE (default) | -3.861 | 5 | PEESE (default) | -3.861 |

| 6 | puniform (default) | -3.695 | 6 | AK (AK2) | -3.721 |

| 7 | WLS (default) | -3.403 | 7 | puniform (default) | -3.695 |

| 8 | trimfill (default) | -3.240 | 8 | WLS (default) | -3.403 |

| 9 | AK (AK1) | -3.054 | 9 | trimfill (default) | -3.241 |

| 10 | FMA (default) | -3.028 | 10 | AK (AK1) | -3.057 |

| 11 | RMA (default) | -2.866 | 11 | FMA (default) | -3.028 |

| 12 | SM (3PSM) | -2.688 | 12 | RMA (default) | -2.866 |

| 13 | puniform (star) | -2.427 | 13 | SM (3PSM) | -2.705 |

| 14 | WILS (default) | -2.292 | 14 | puniform (star) | -2.427 |

| 15 | RoBMA (PSMA) | -2.270 | 15 | WILS (default) | -2.292 |

| 16 | mean (default) | -2.164 | 16 | RoBMA (PSMA) | -2.266 |

| 17 | AK (AK2) | -1.915 | 17 | mean (default) | -2.164 |

| 18 | SM (4PSM) | -1.222 | 18 | SM (4PSM) | -1.314 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

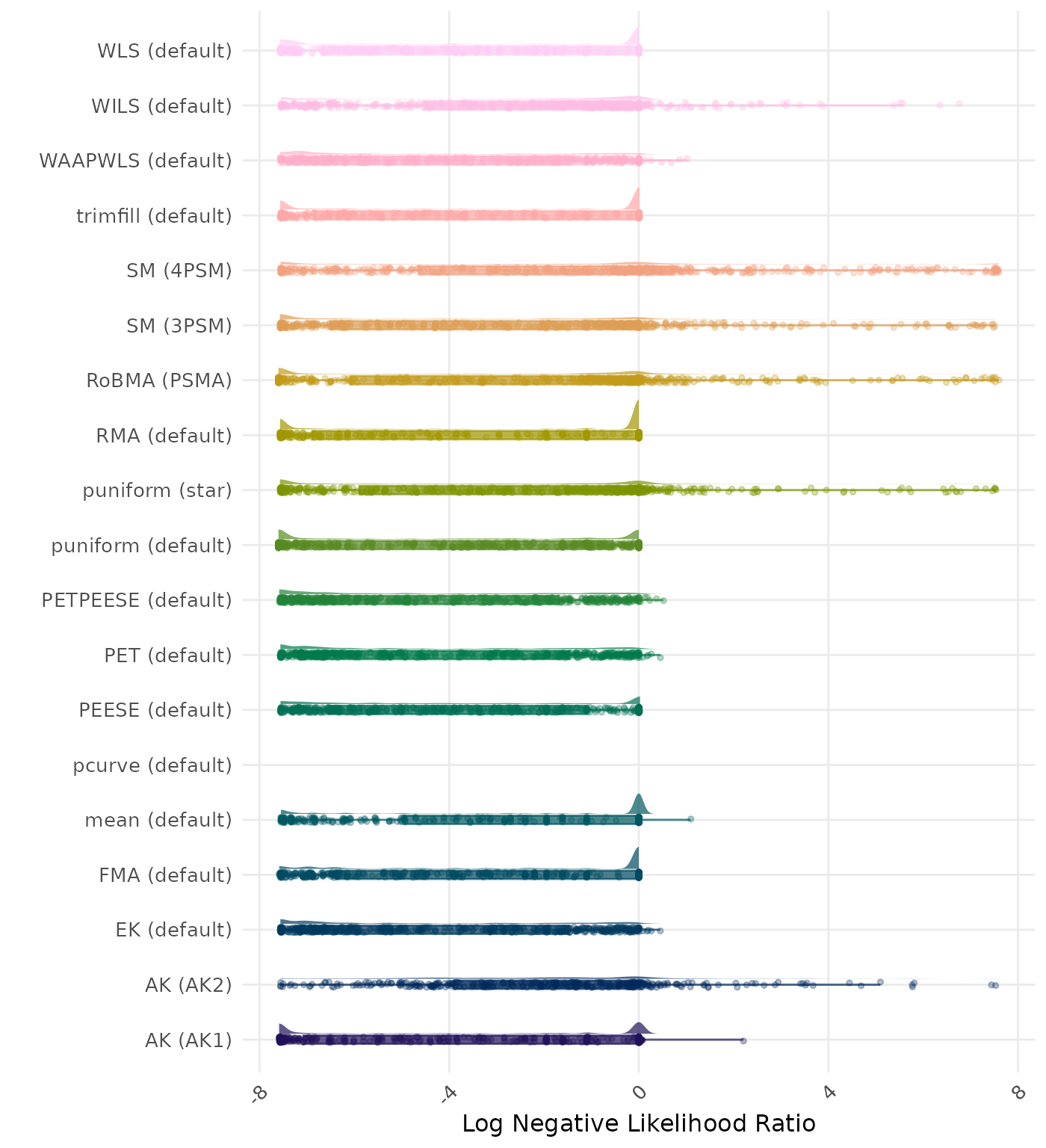

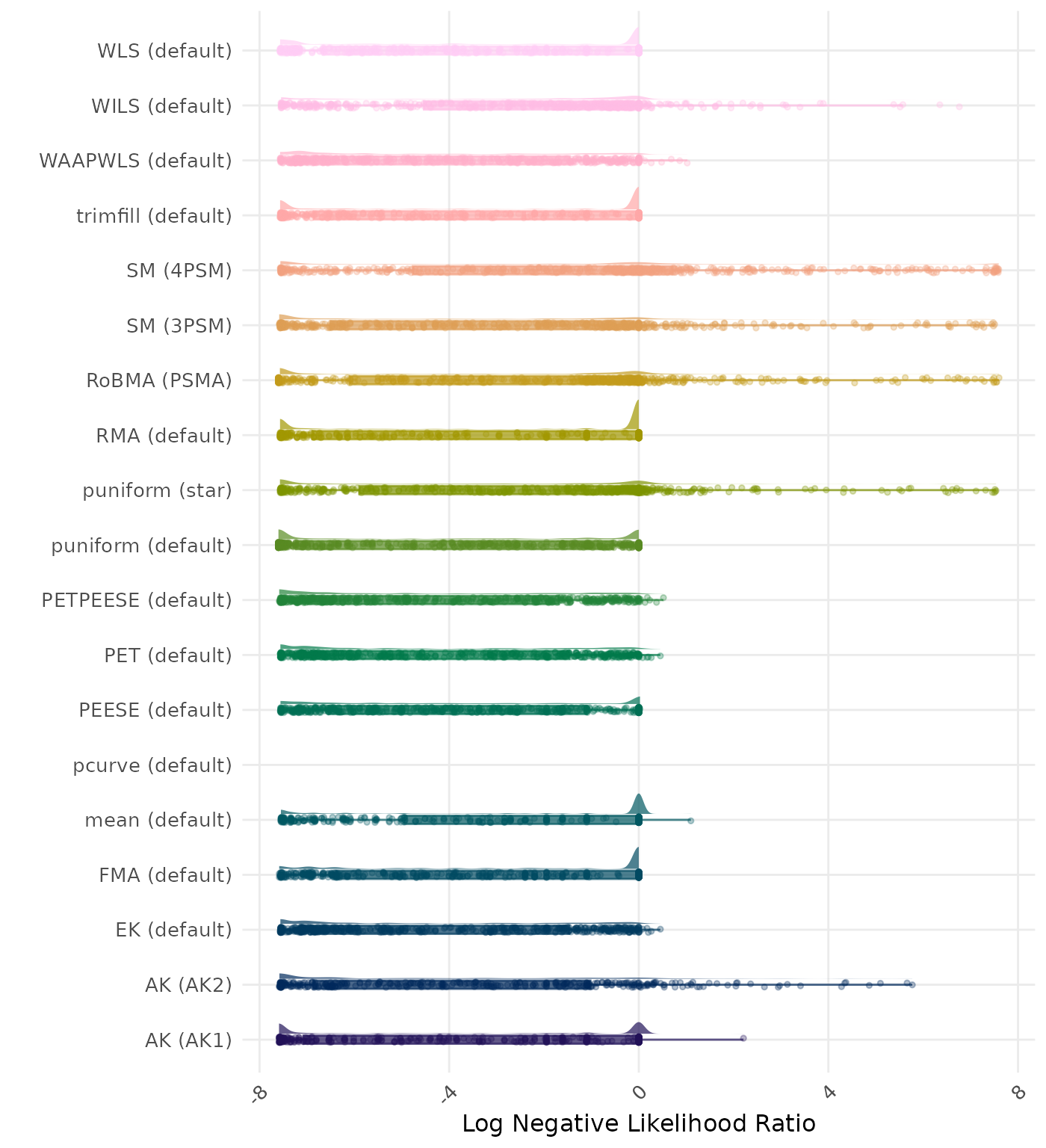

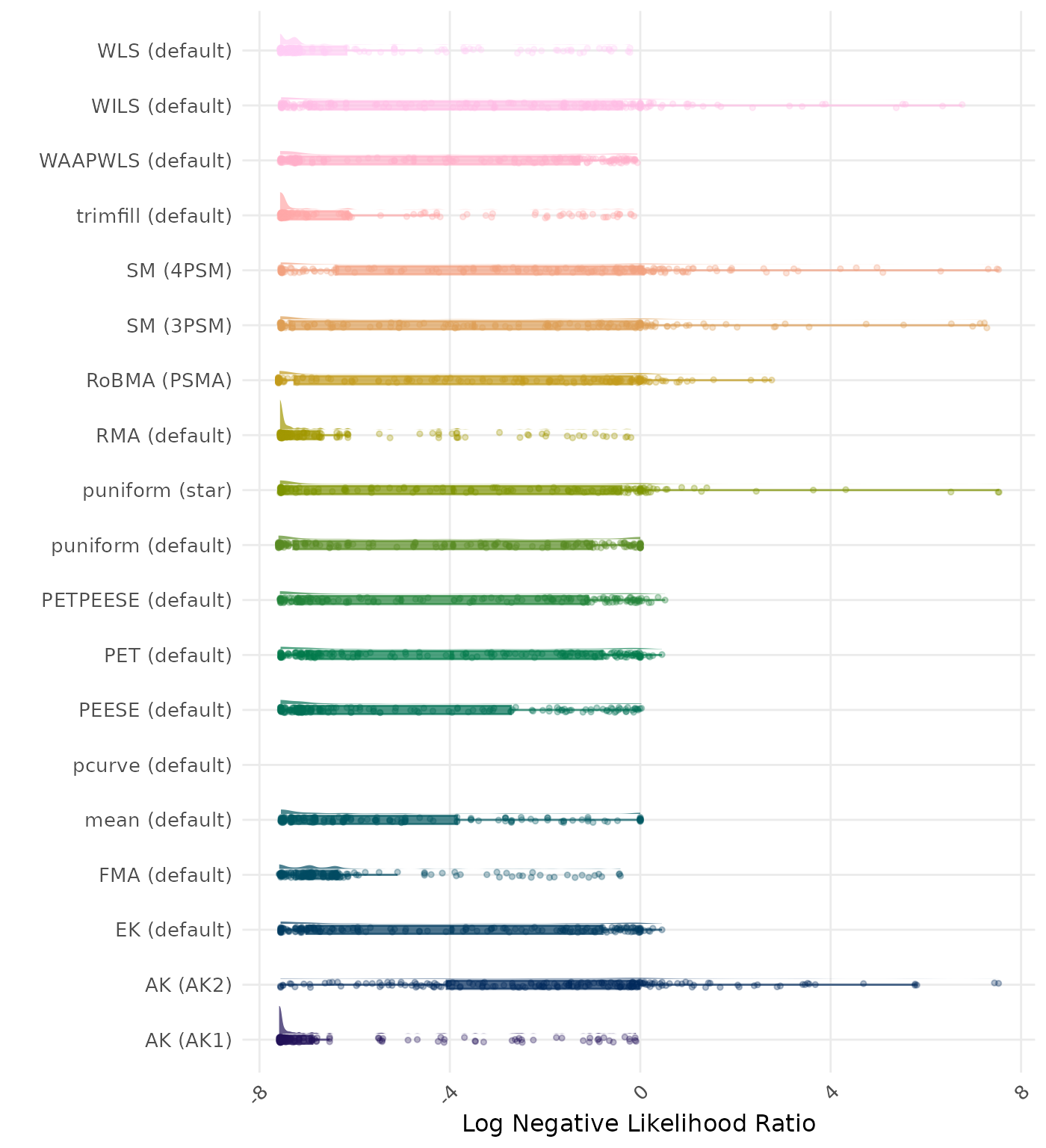

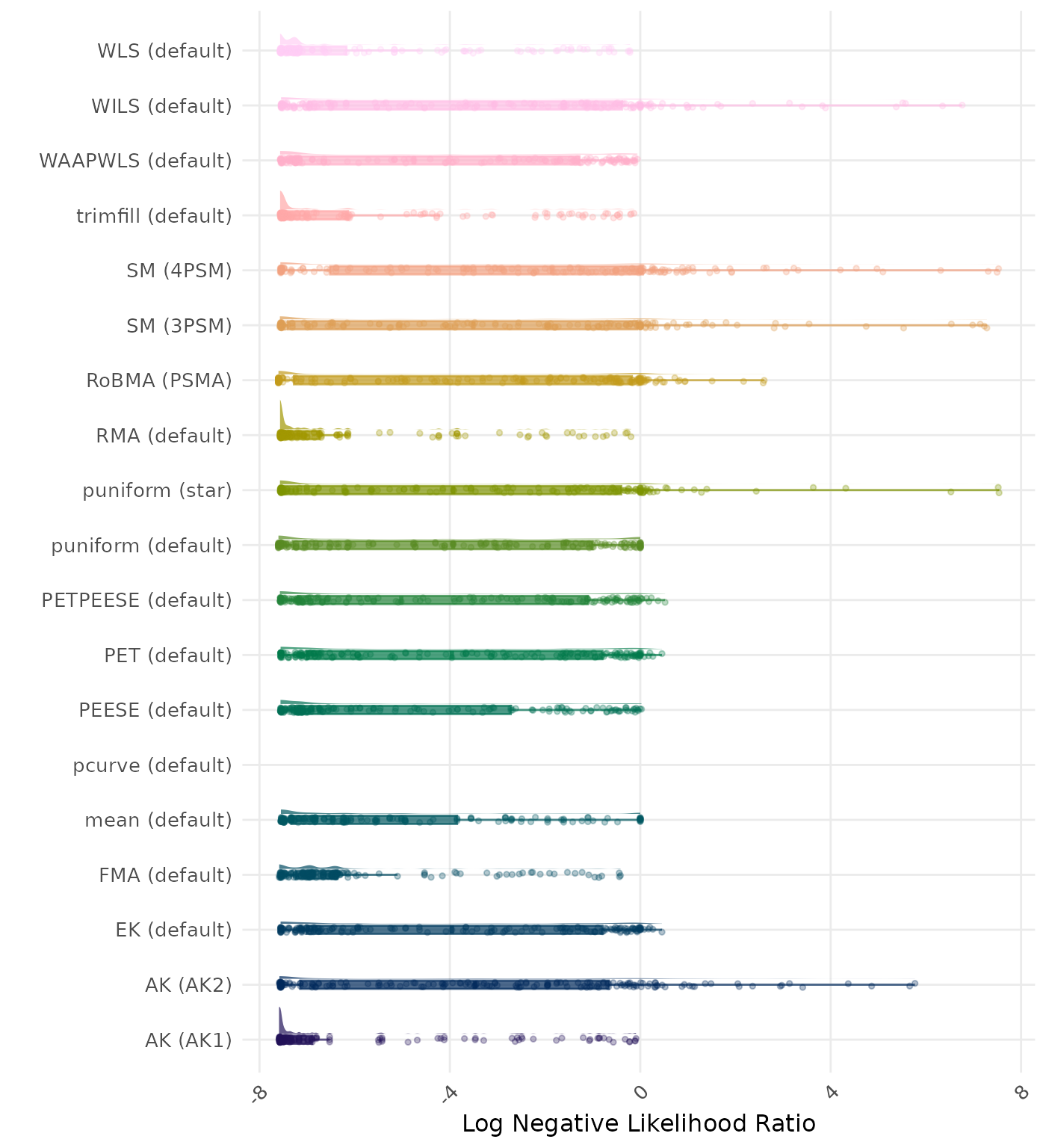

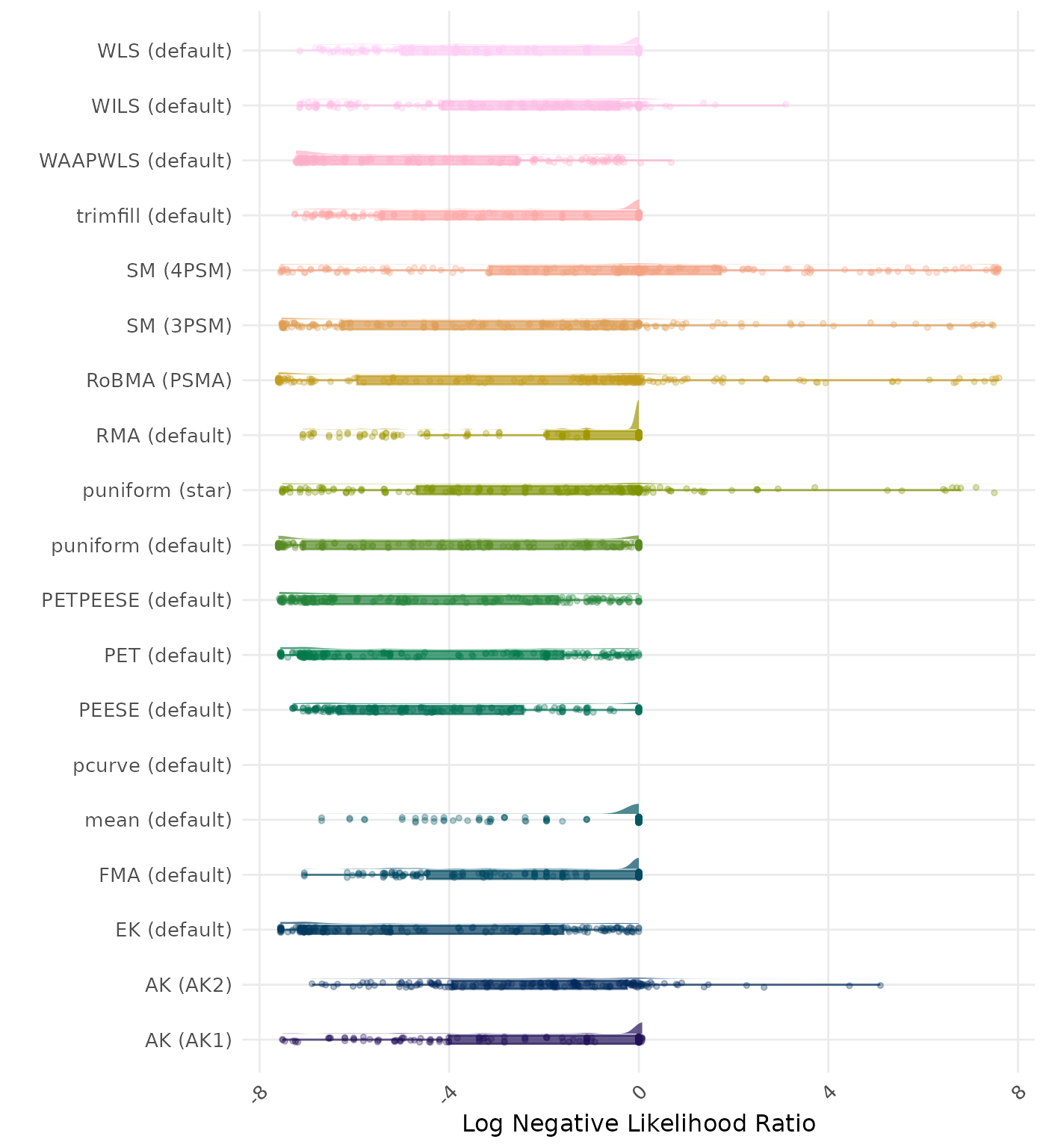

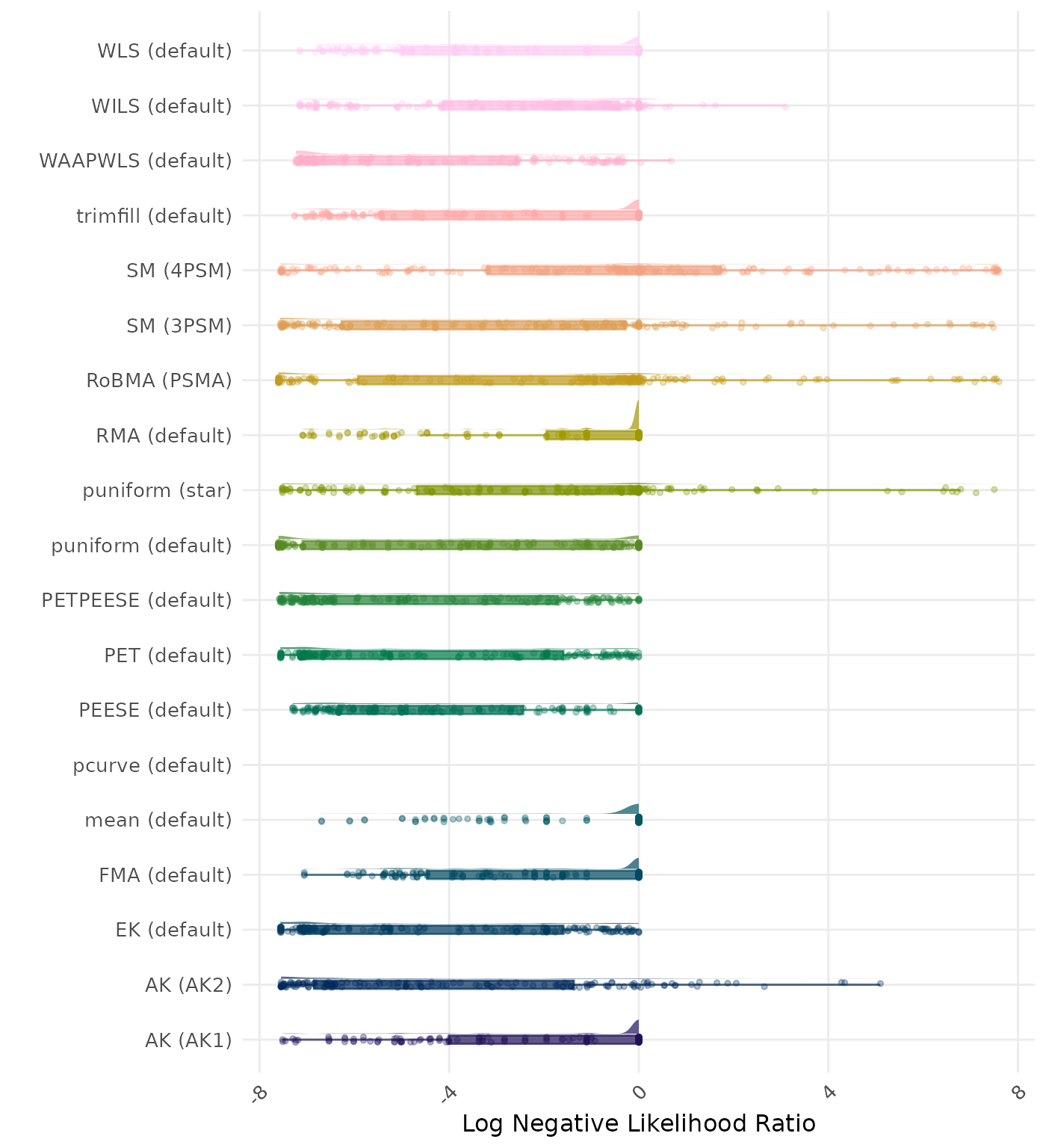

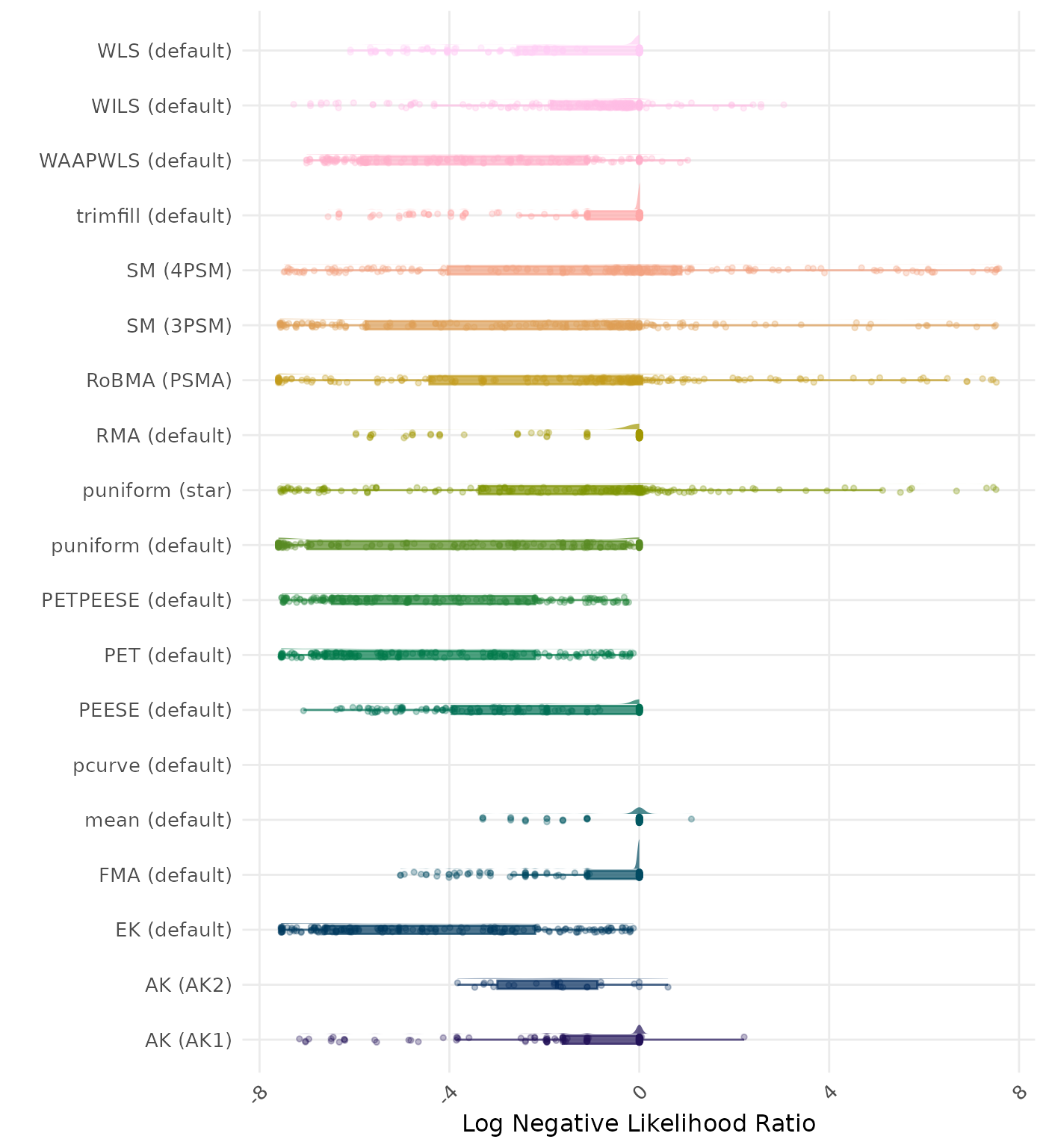

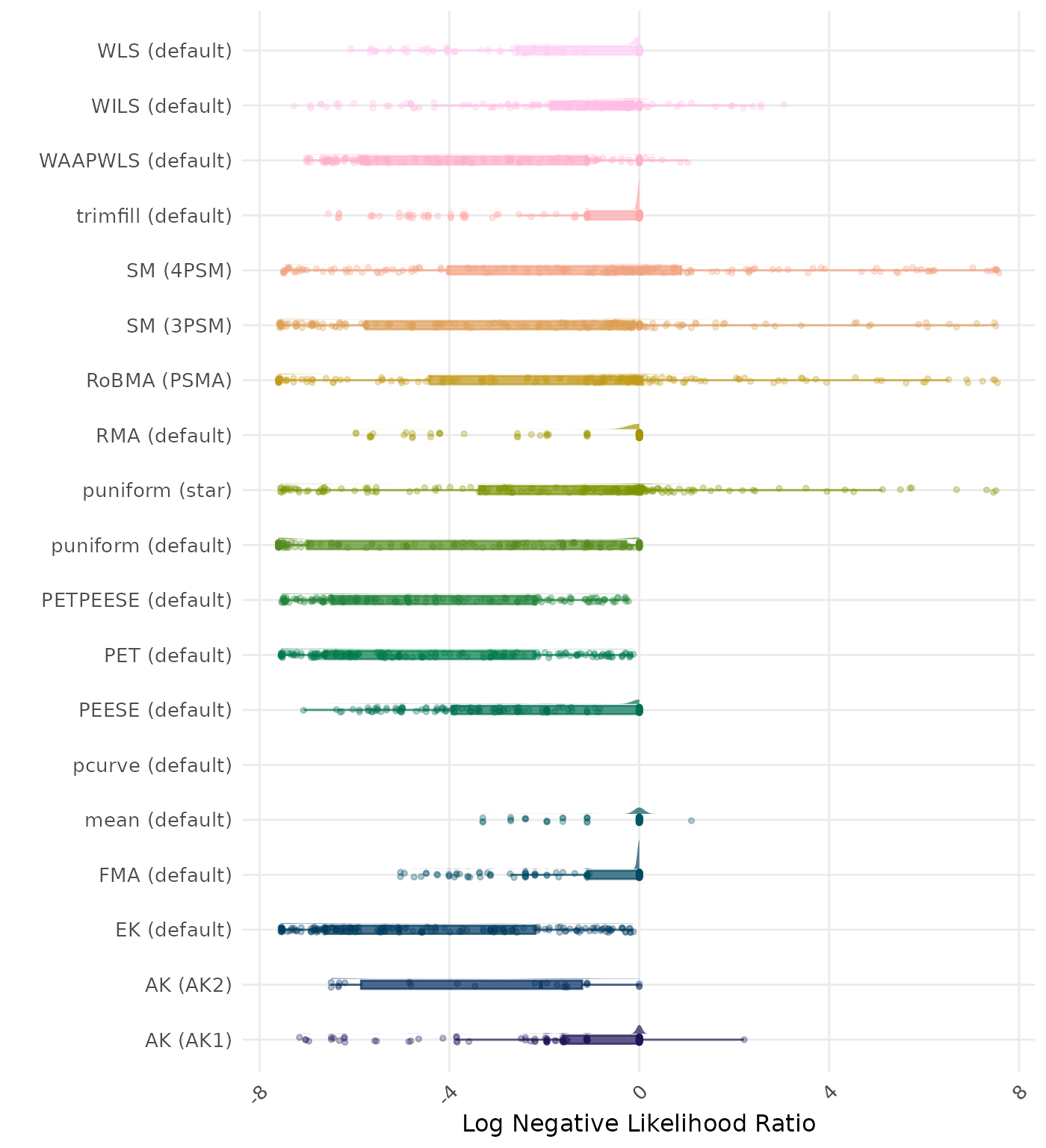

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK2) | 0.276 | 1 | RoBMA (PSMA) | 0.283 |

| 2 | RoBMA (PSMA) | 0.278 | 2 | PETPEESE (default) | 0.403 |

| 3 | PETPEESE (default) | 0.403 | 3 | PET (default) | 0.421 |

| 4 | PET (default) | 0.421 | 4 | EK (default) | 0.421 |

| 5 | EK (default) | 0.421 | 5 | AK (AK2) | 0.439 |

| 6 | puniform (star) | 0.443 | 6 | puniform (star) | 0.443 |

| 7 | puniform (default) | 0.457 | 7 | puniform (default) | 0.457 |

| 8 | SM (3PSM) | 0.468 | 8 | SM (3PSM) | 0.473 |

| 9 | WAAPWLS (default) | 0.506 | 9 | WAAPWLS (default) | 0.506 |

| 10 | SM (4PSM) | 0.543 | 10 | SM (4PSM) | 0.545 |

| 11 | WILS (default) | 0.557 | 11 | WILS (default) | 0.557 |

| 12 | PEESE (default) | 0.638 | 12 | PEESE (default) | 0.638 |

| 13 | AK (AK1) | 0.642 | 13 | AK (AK1) | 0.642 |

| 14 | trimfill (default) | 0.660 | 14 | trimfill (default) | 0.660 |

| 15 | WLS (default) | 0.690 | 15 | WLS (default) | 0.690 |

| 16 | RMA (default) | 0.702 | 16 | RMA (default) | 0.702 |

| 17 | FMA (default) | 0.796 | 17 | FMA (default) | 0.796 |

| 18 | mean (default) | 0.827 | 18 | mean (default) | 0.827 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

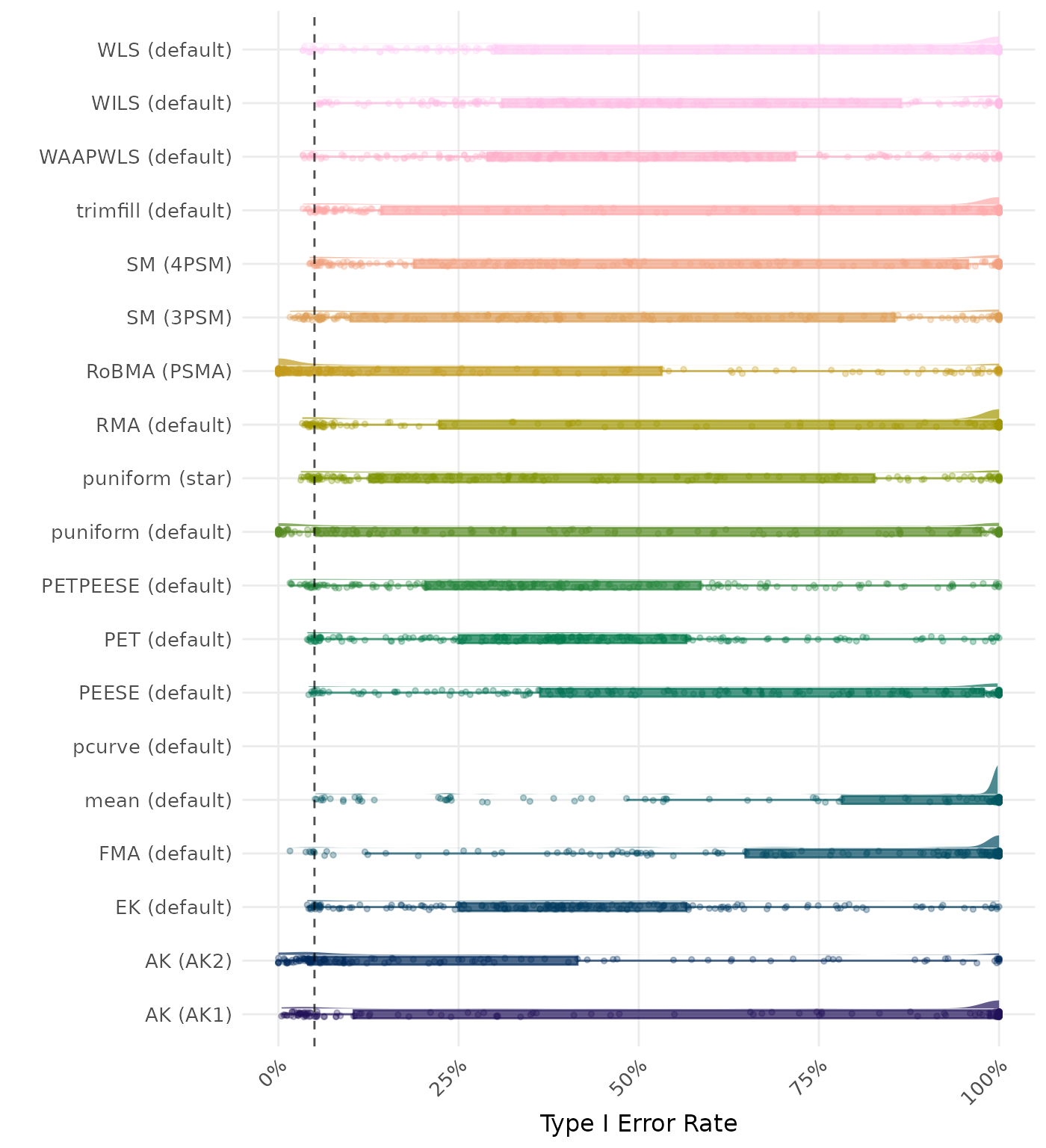

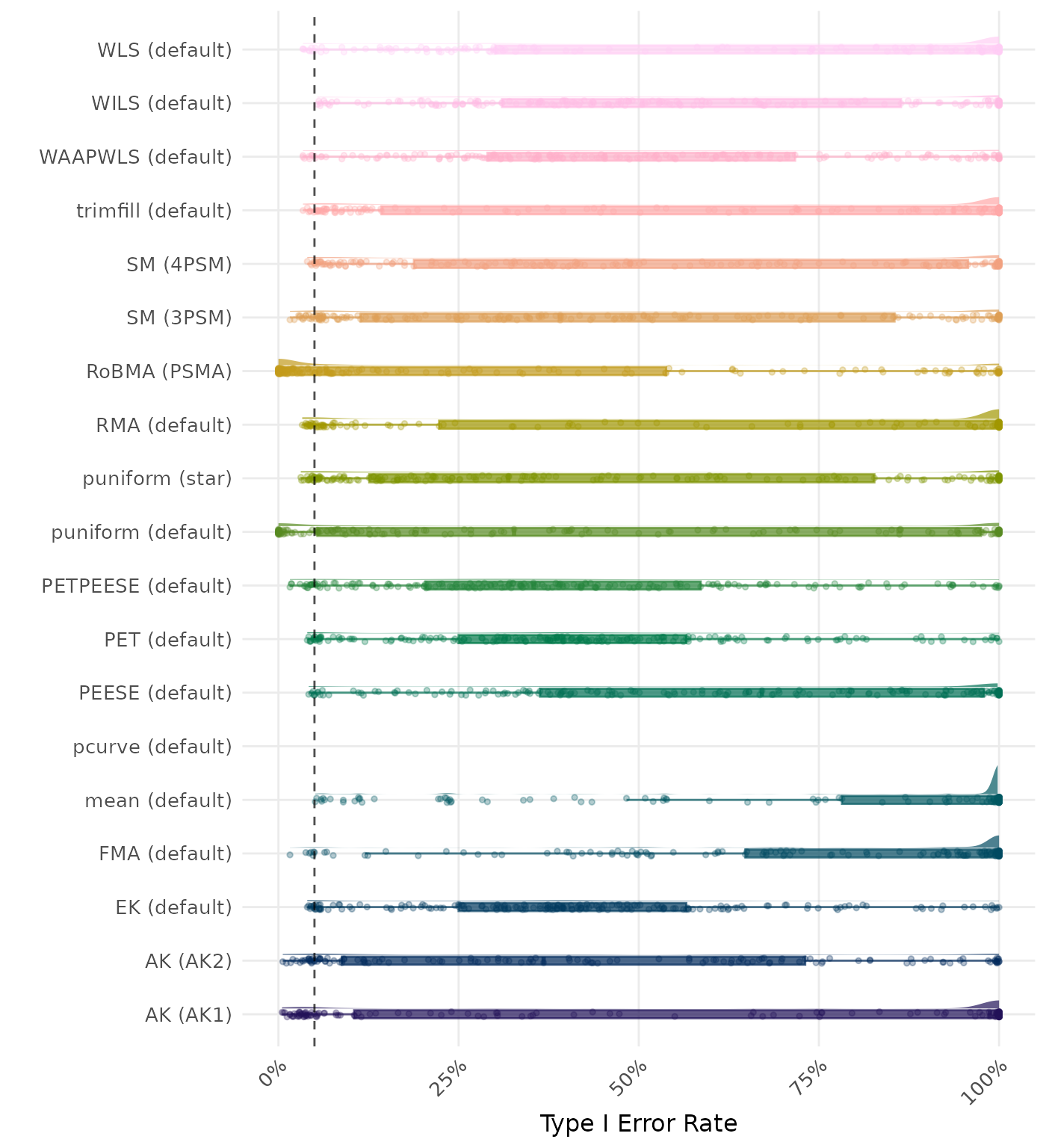

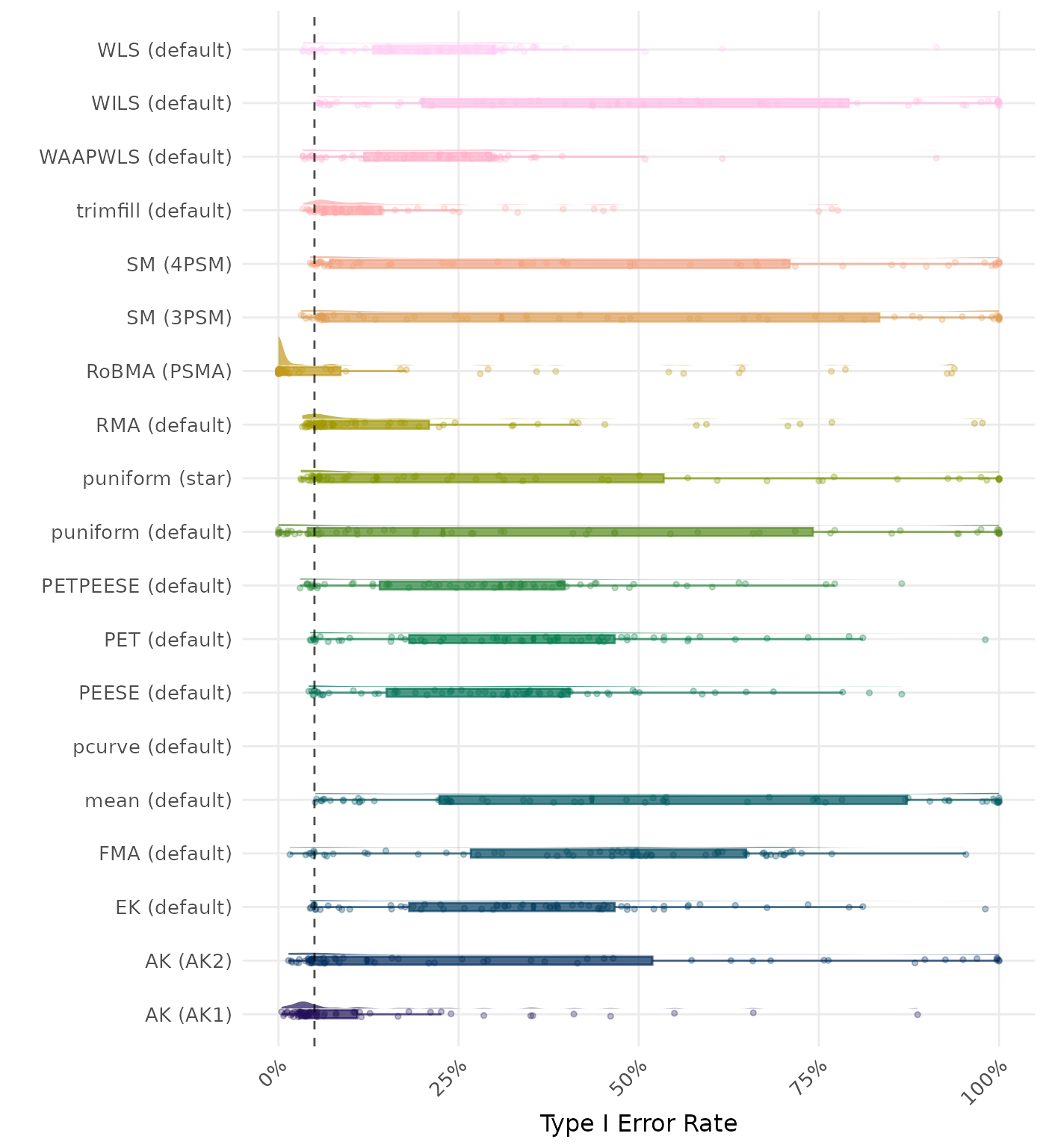

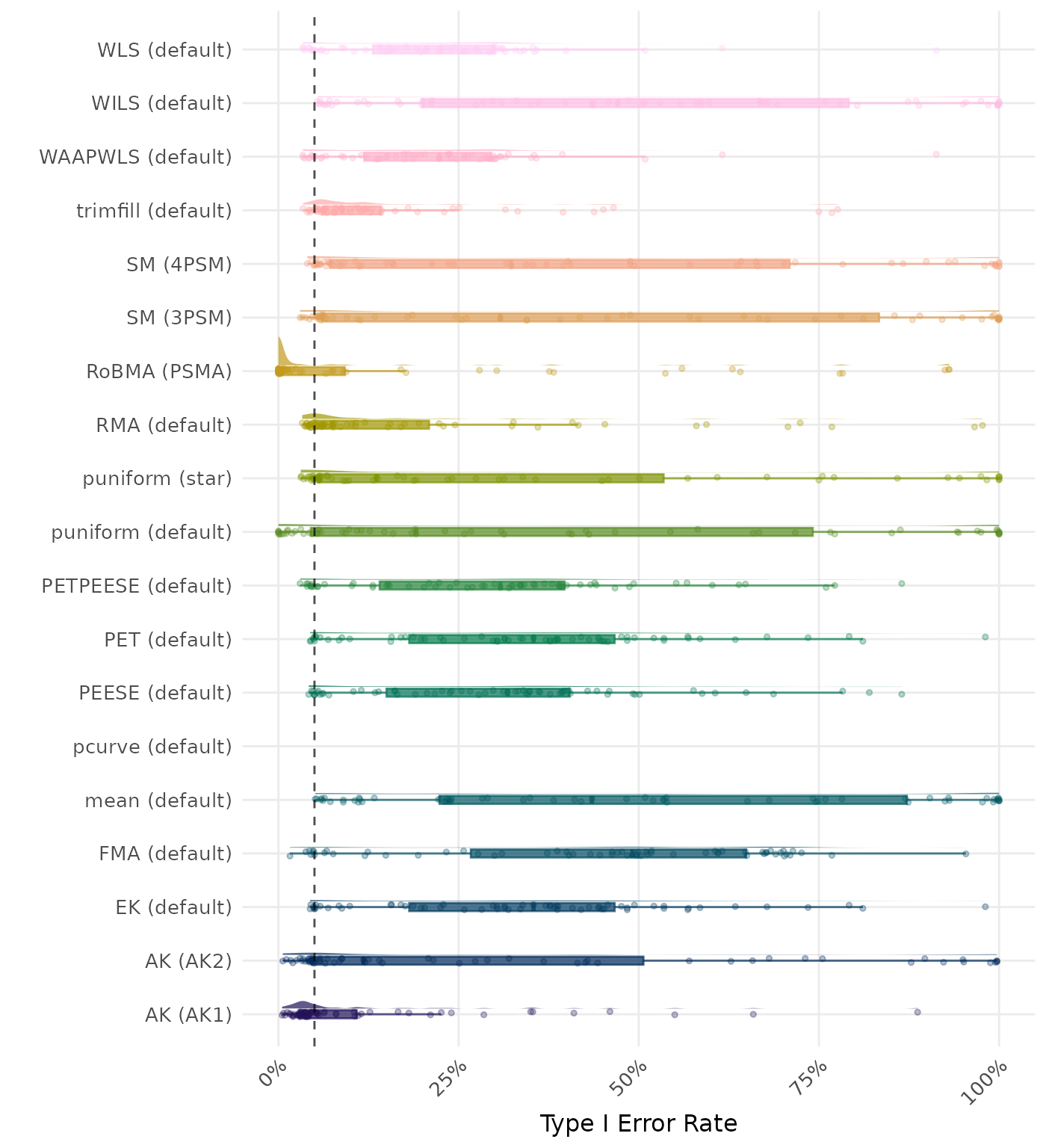

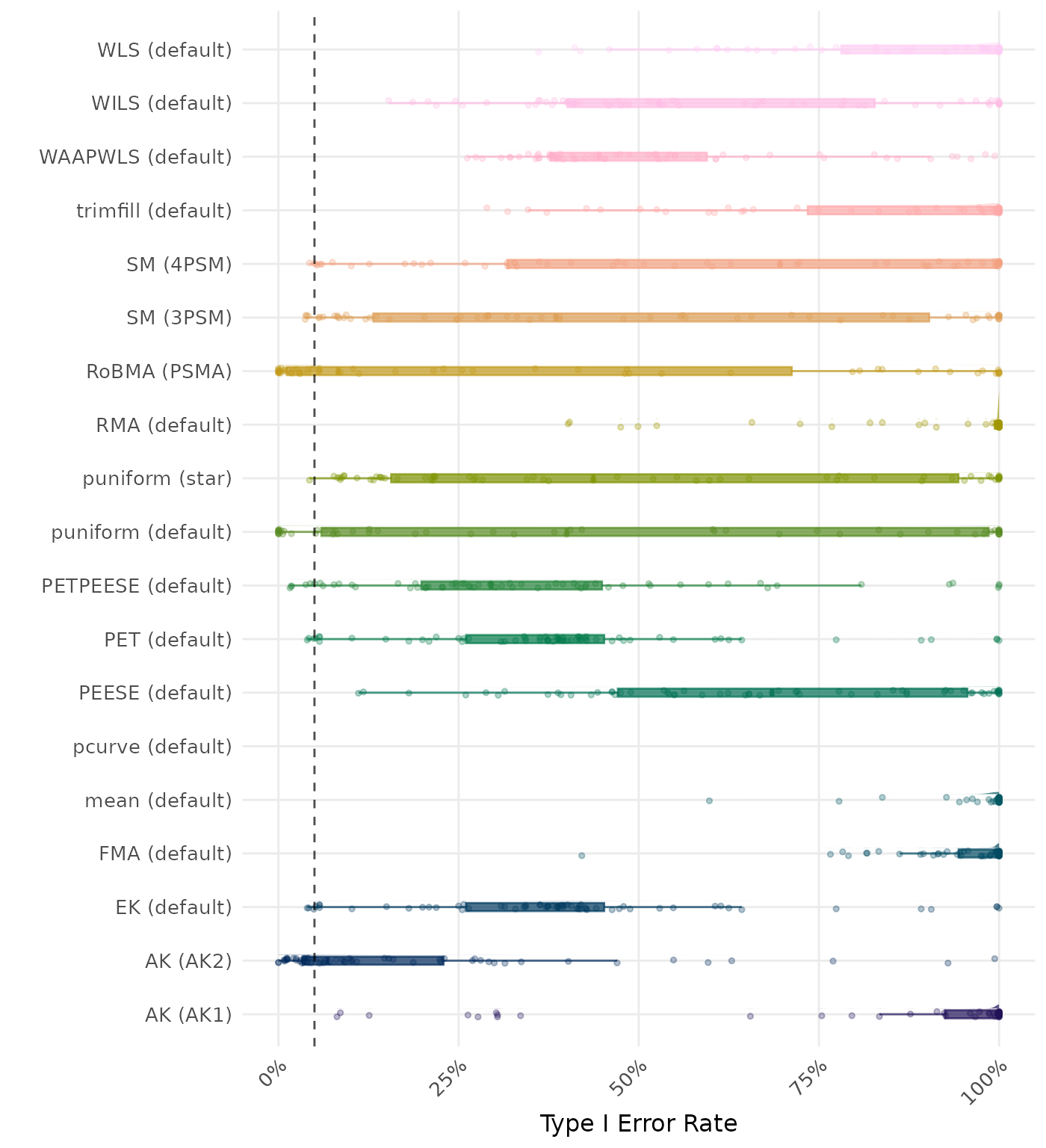

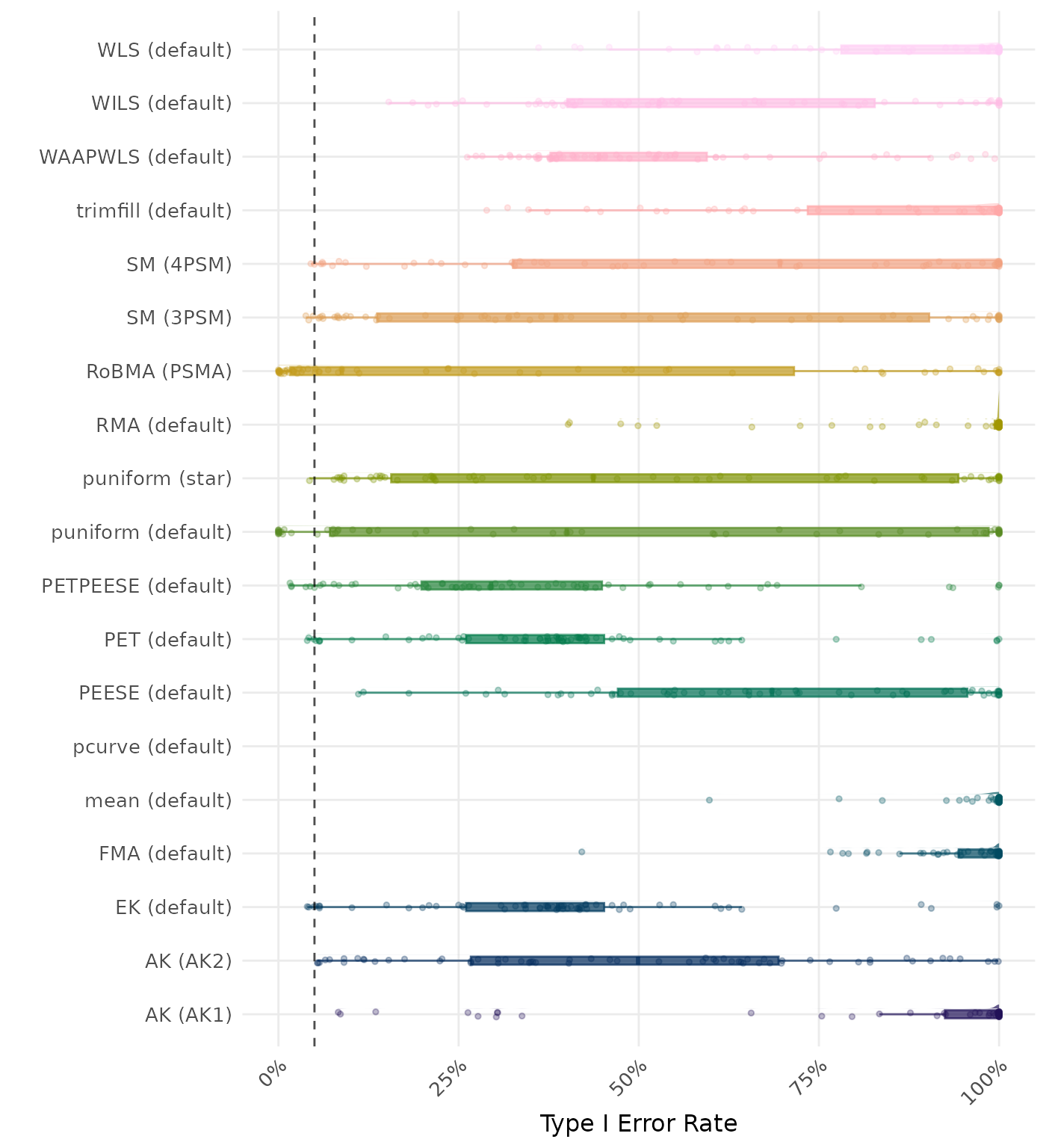

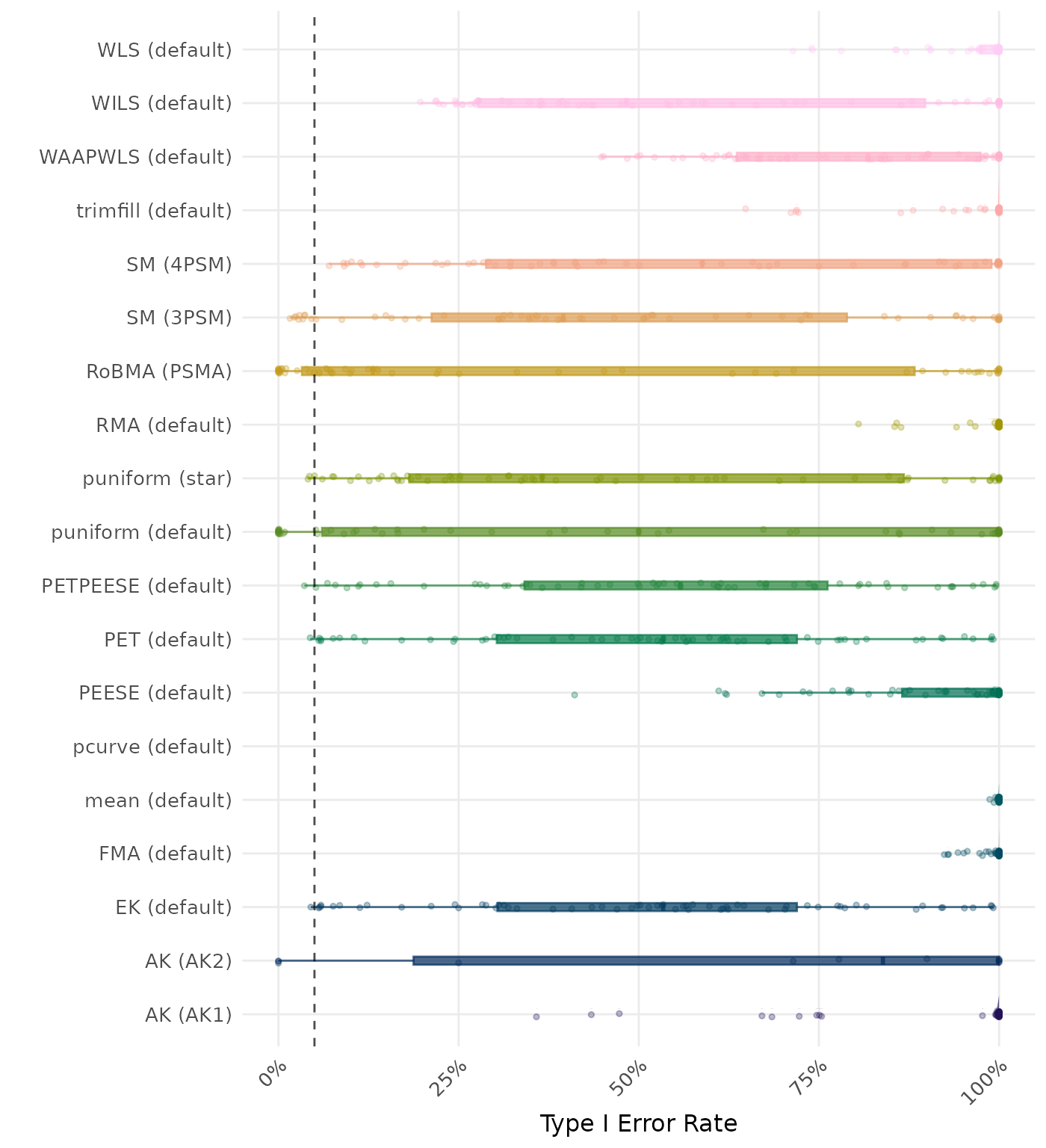

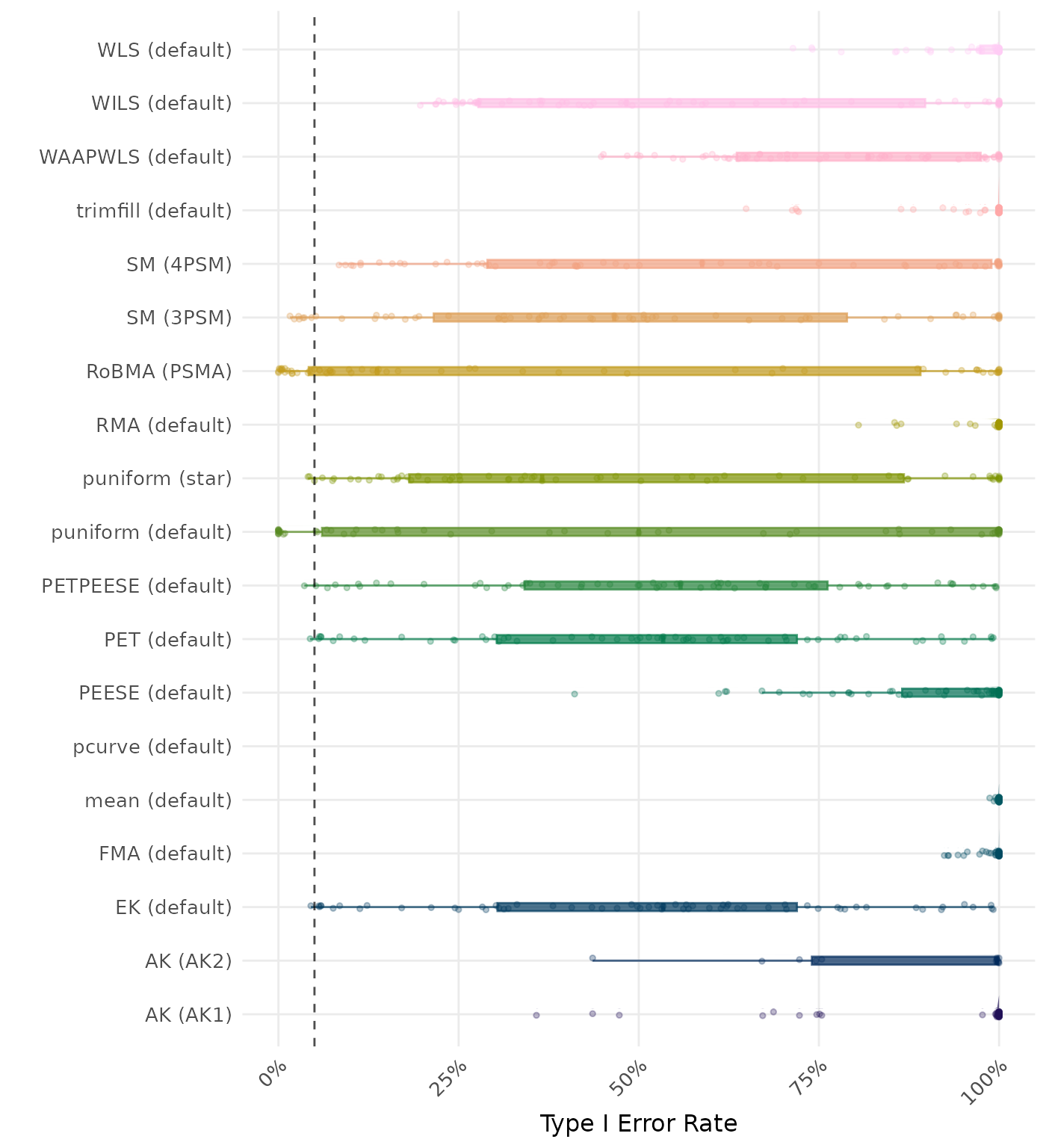

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | mean (default) | 0.995 | 1 | mean (default) | 0.995 |

| 2 | FMA (default) | 0.994 | 2 | FMA (default) | 0.994 |

| 3 | RMA (default) | 0.989 | 3 | RMA (default) | 0.989 |

| 4 | WLS (default) | 0.985 | 4 | WLS (default) | 0.985 |

| 5 | trimfill (default) | 0.980 | 5 | trimfill (default) | 0.980 |

| 6 | AK (AK1) | 0.979 | 6 | AK (AK1) | 0.979 |

| 7 | PEESE (default) | 0.963 | 7 | PEESE (default) | 0.963 |

| 8 | WAAPWLS (default) | 0.925 | 8 | WAAPWLS (default) | 0.925 |

| 9 | puniform (default) | 0.913 | 9 | puniform (default) | 0.913 |

| 10 | PETPEESE (default) | 0.899 | 10 | PETPEESE (default) | 0.899 |

| 11 | EK (default) | 0.885 | 11 | EK (default) | 0.885 |

| 12 | PET (default) | 0.885 | 12 | PET (default) | 0.885 |

| 13 | WILS (default) | 0.846 | 13 | AK (AK2) | 0.858 |

| 14 | SM (3PSM) | 0.806 | 14 | WILS (default) | 0.846 |

| 15 | AK (AK2) | 0.748 | 15 | SM (3PSM) | 0.812 |

| 16 | puniform (star) | 0.746 | 16 | puniform (star) | 0.746 |

| 17 | SM (4PSM) | 0.697 | 17 | SM (4PSM) | 0.709 |

| 18 | RoBMA (PSMA) | 0.642 | 18 | RoBMA (PSMA) | 0.646 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

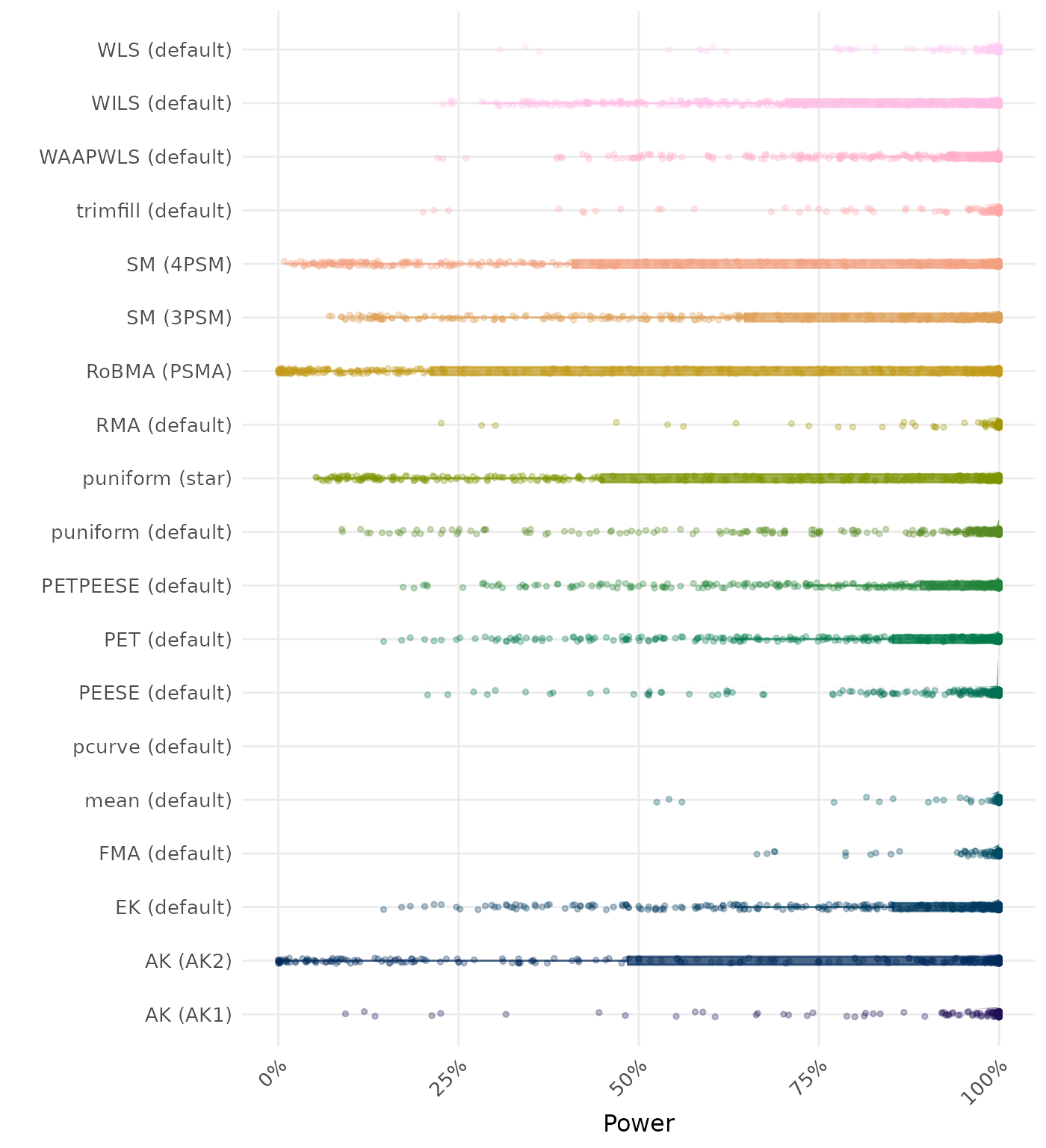

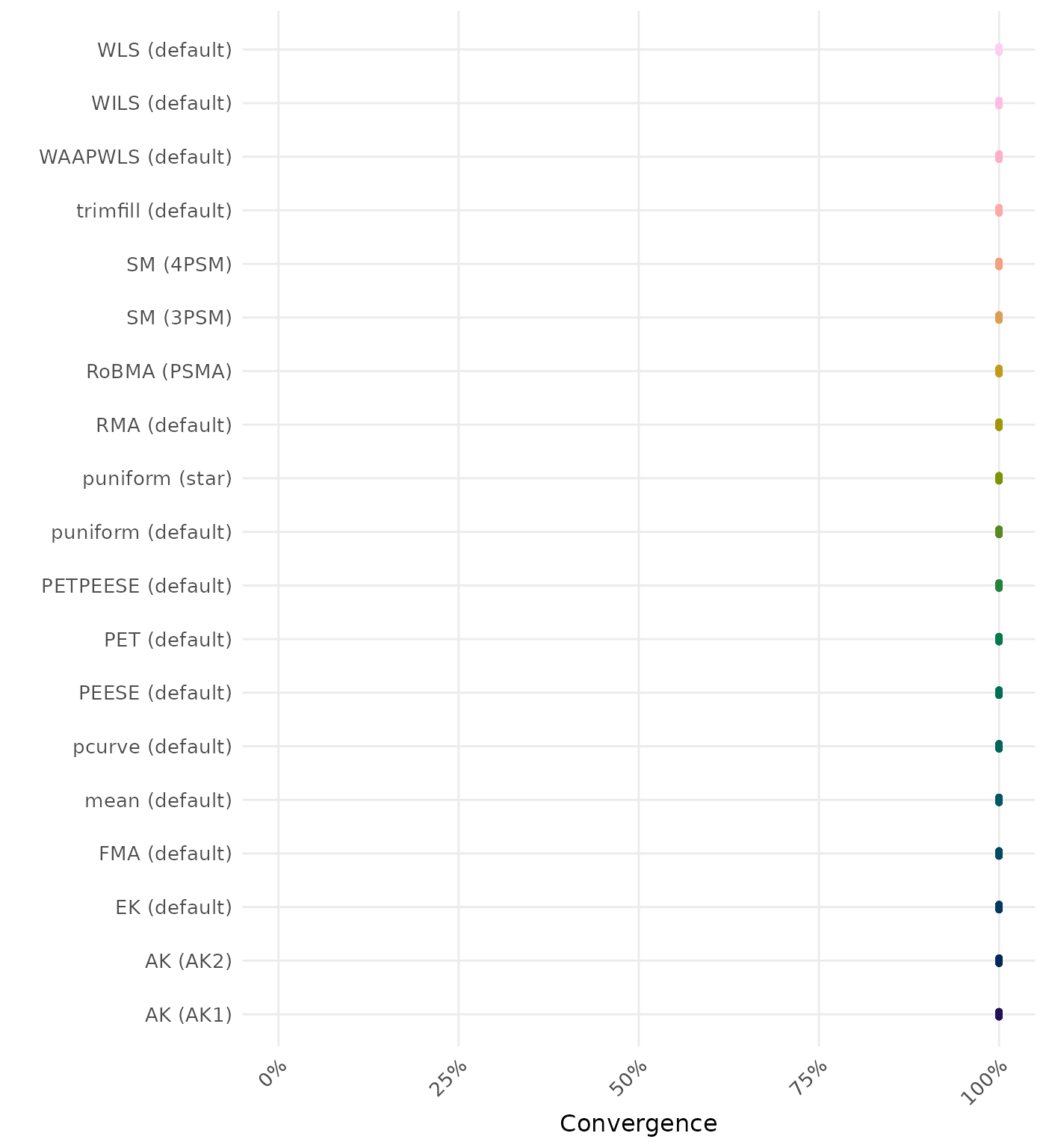

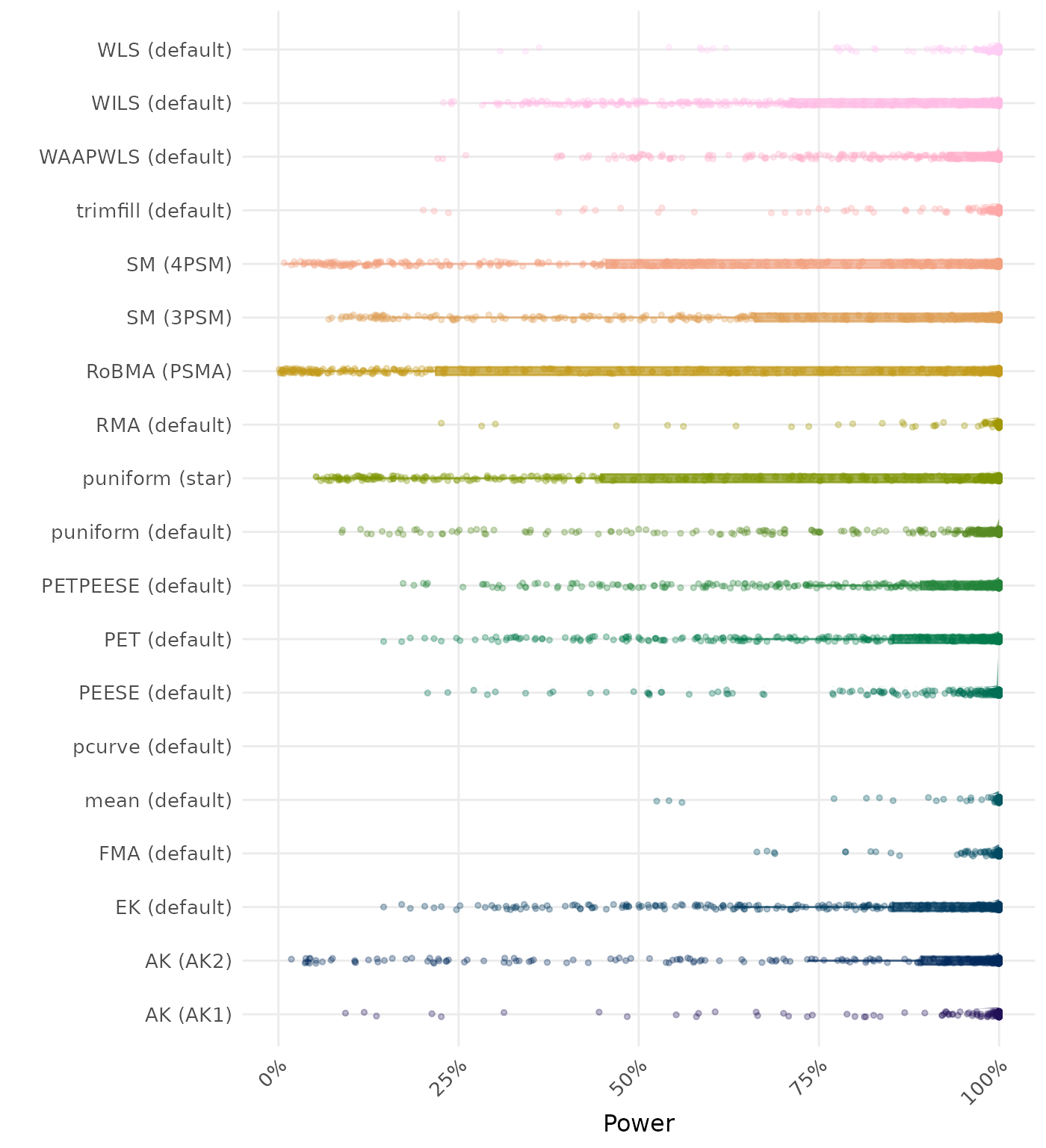

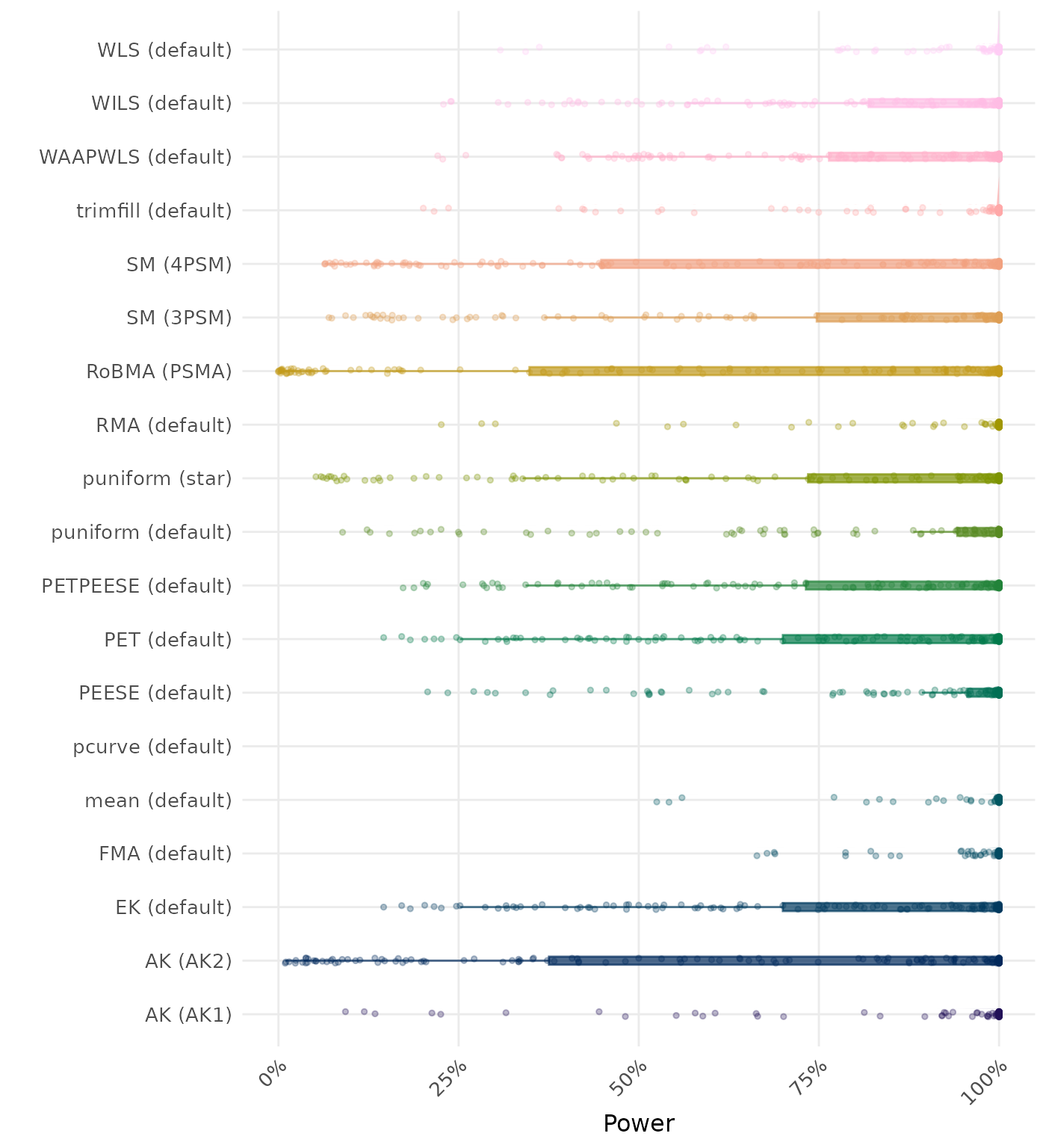

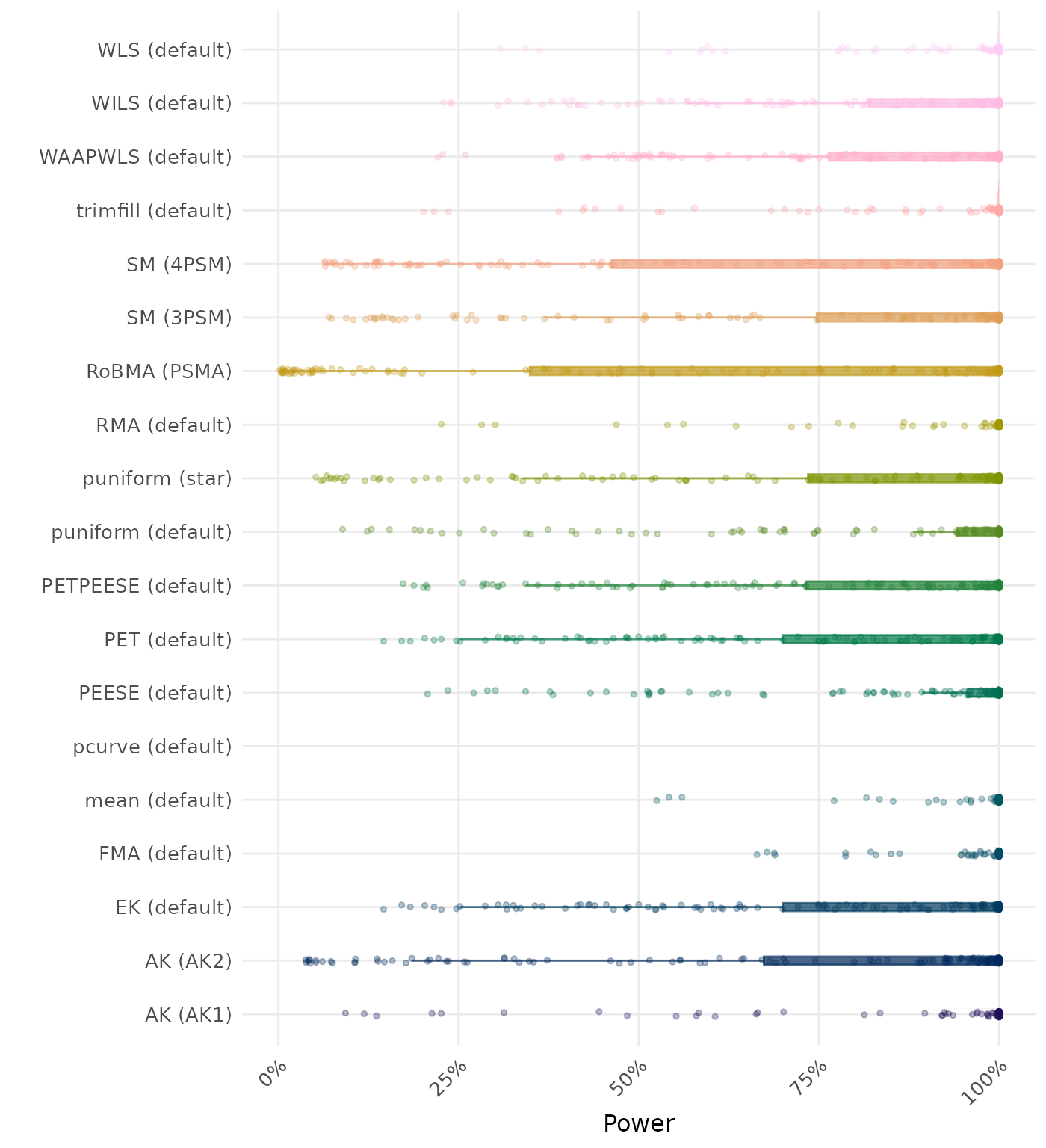

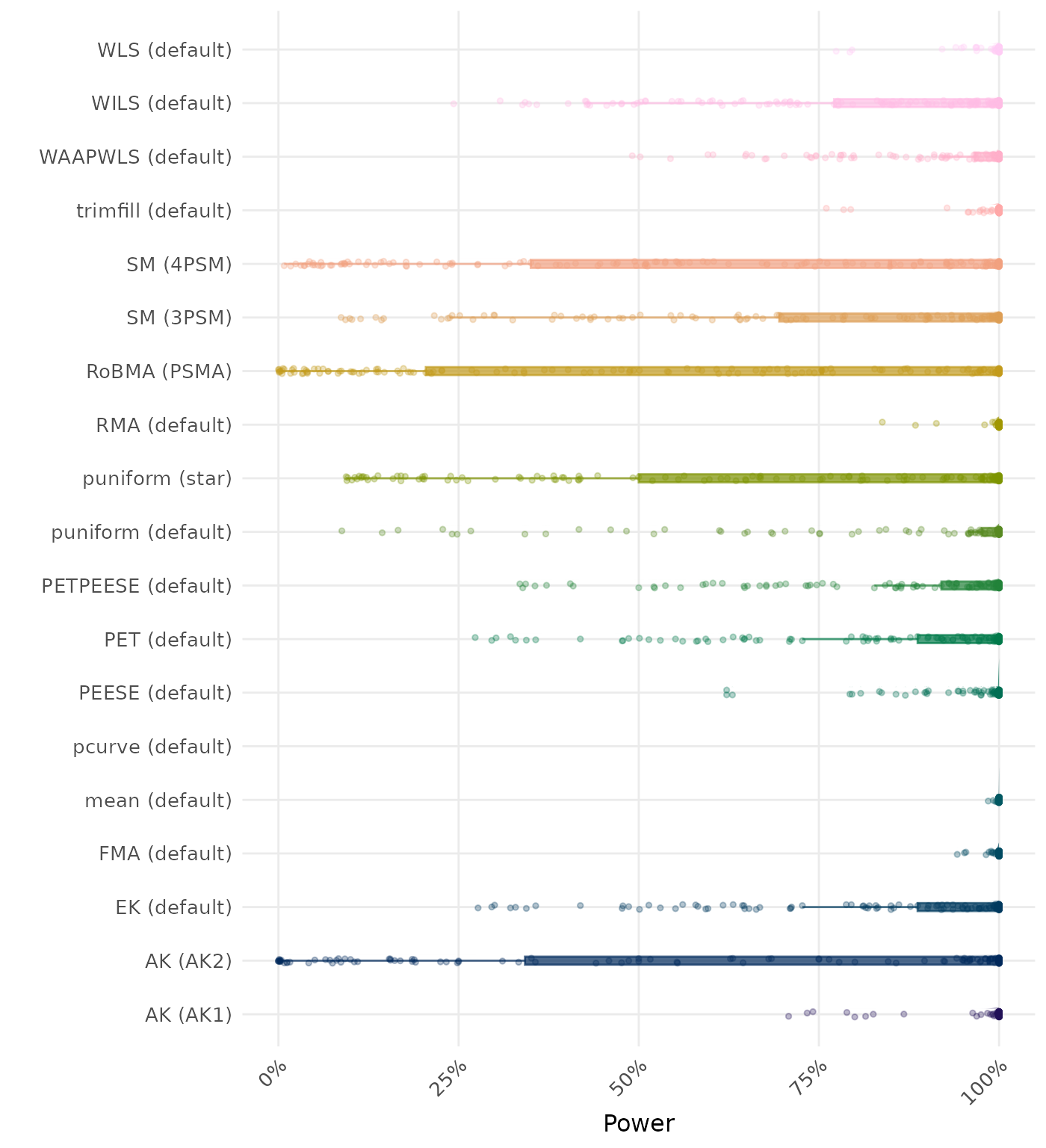

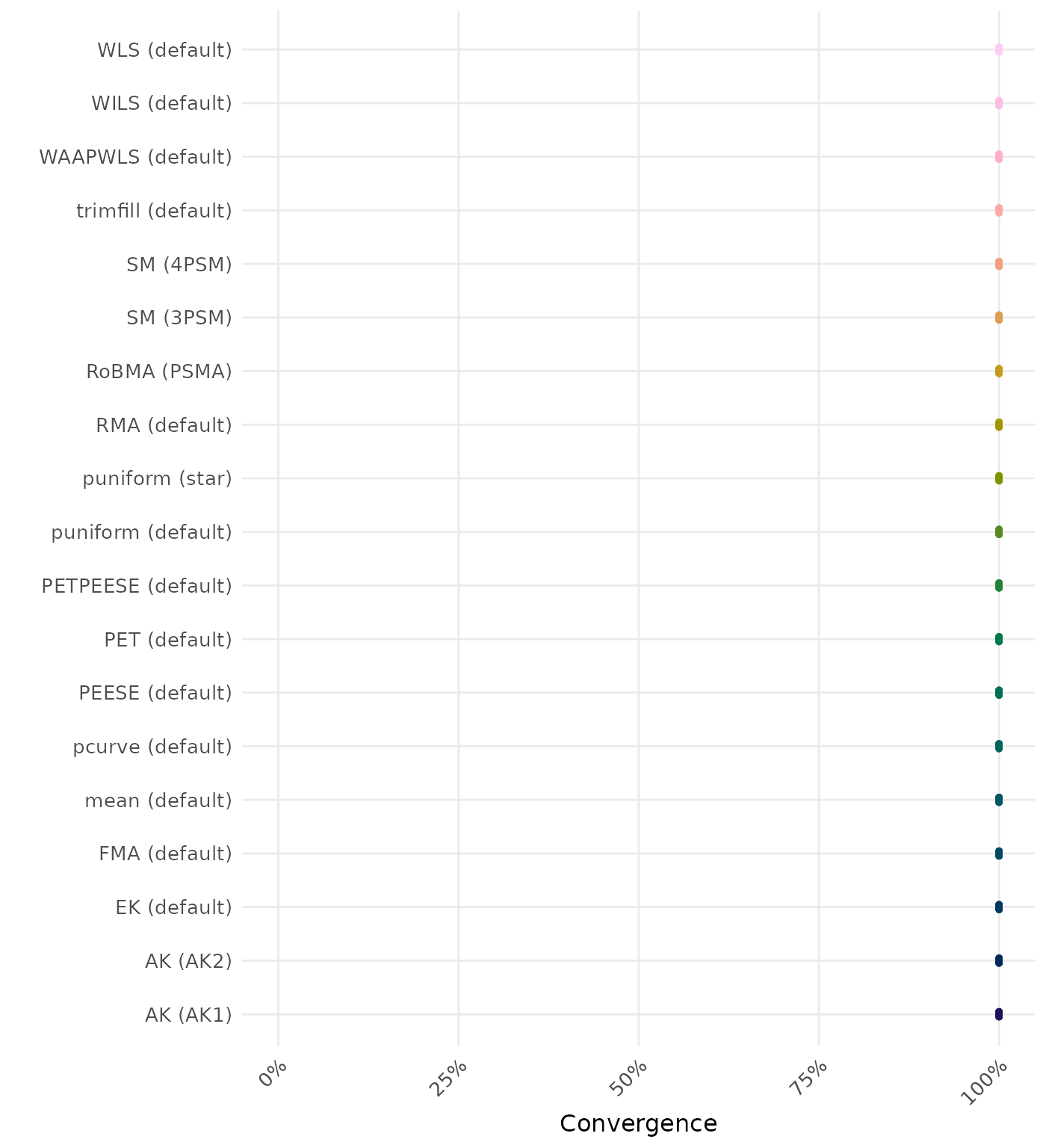

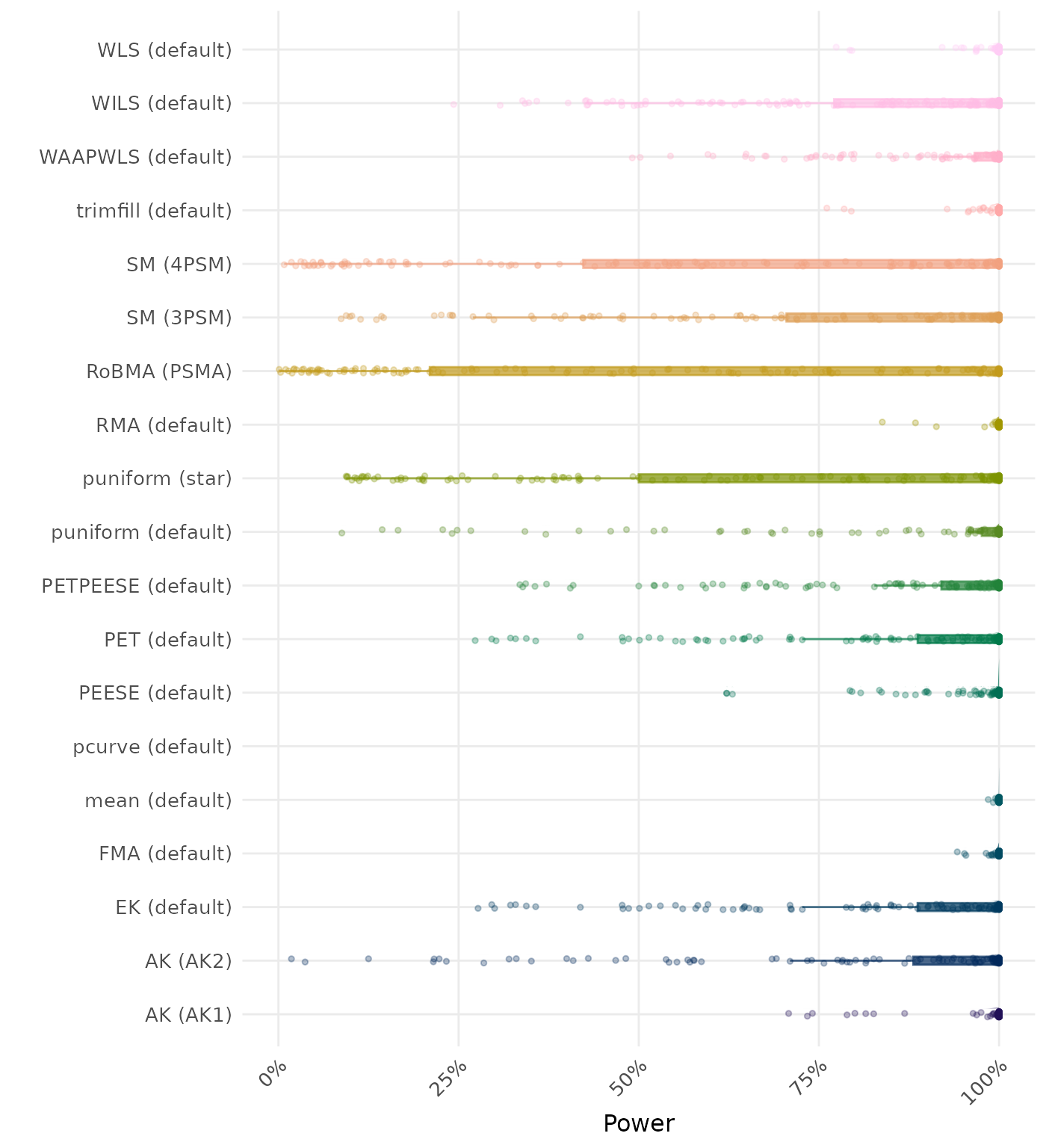

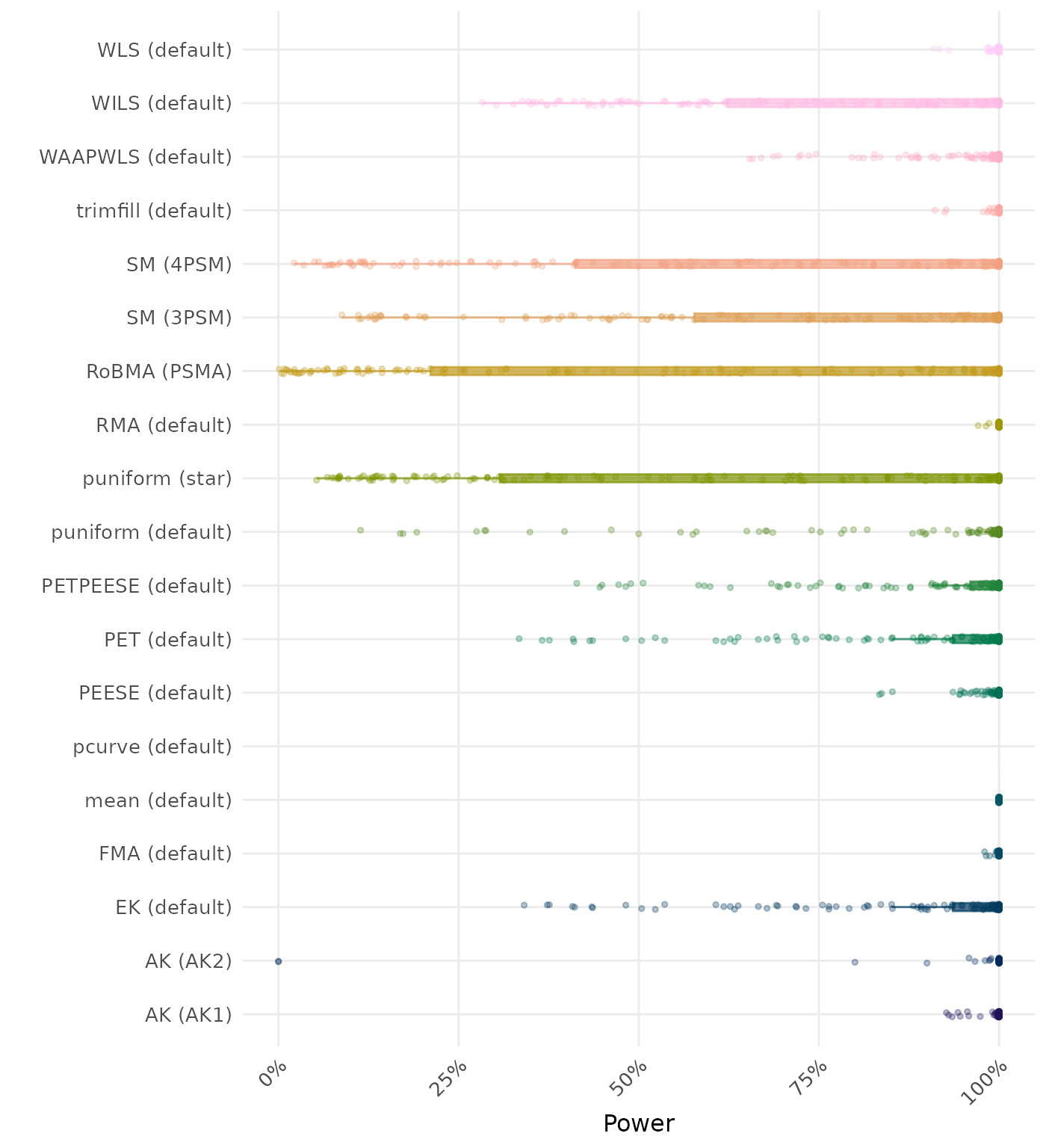

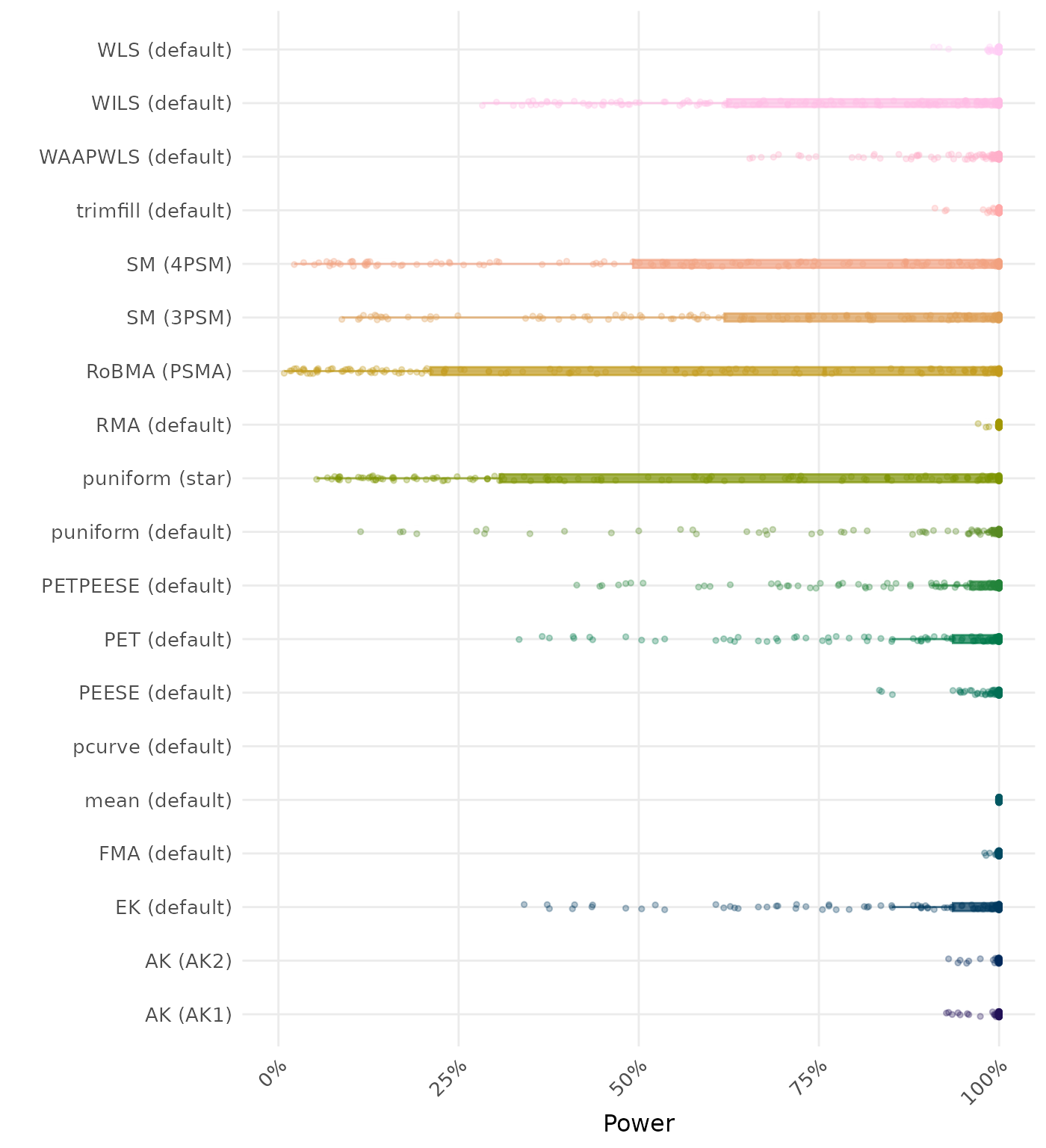

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

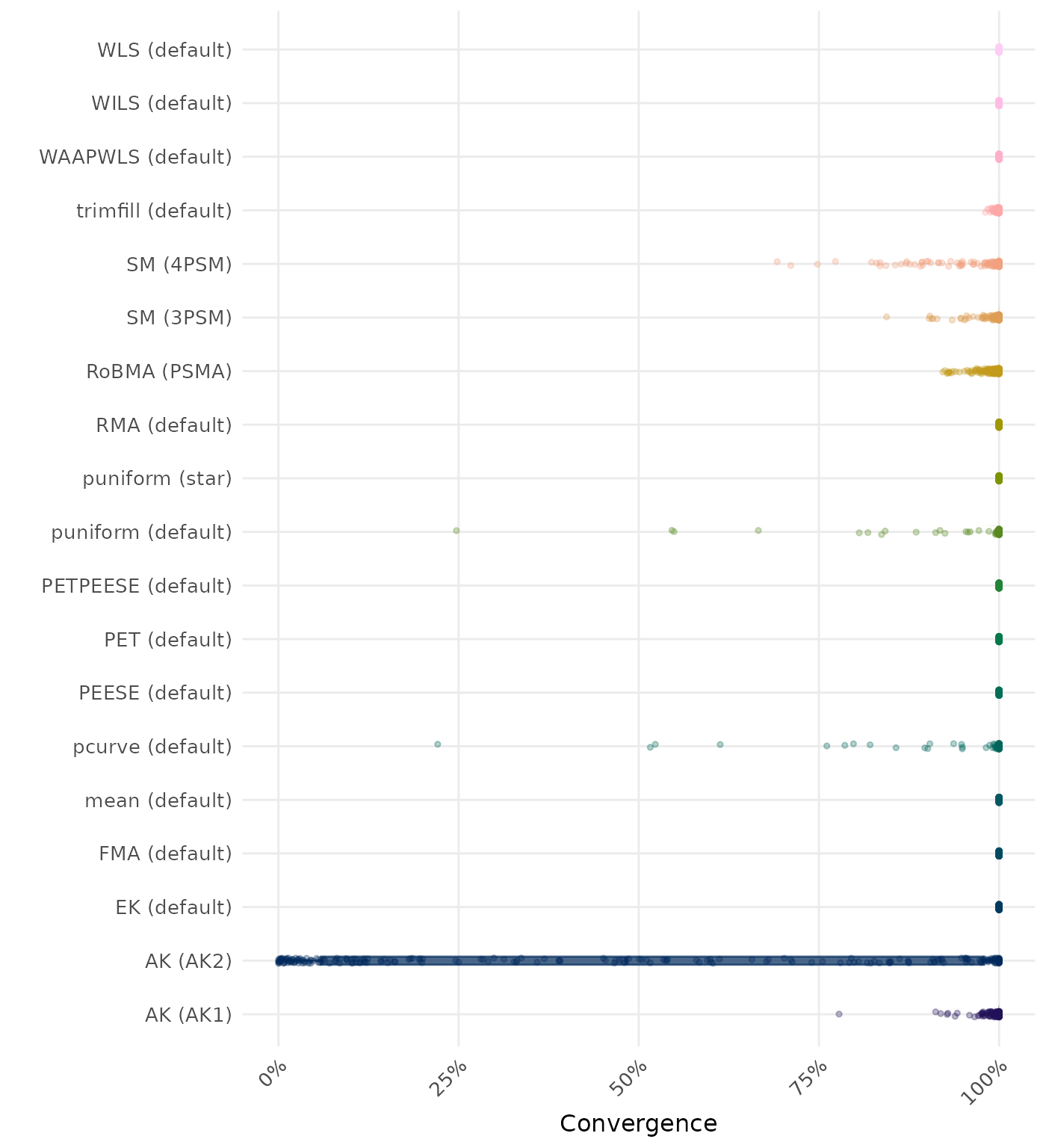

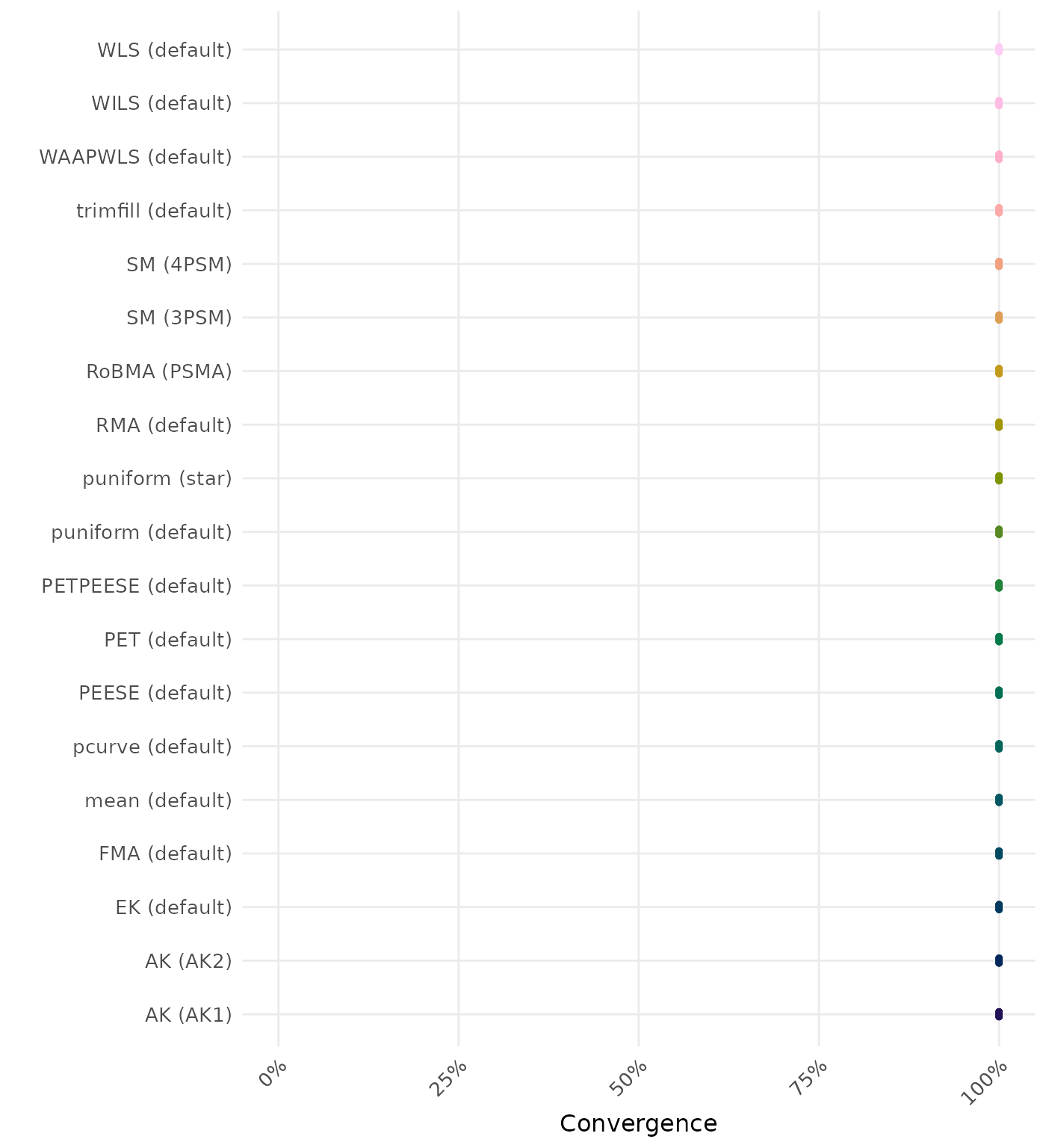

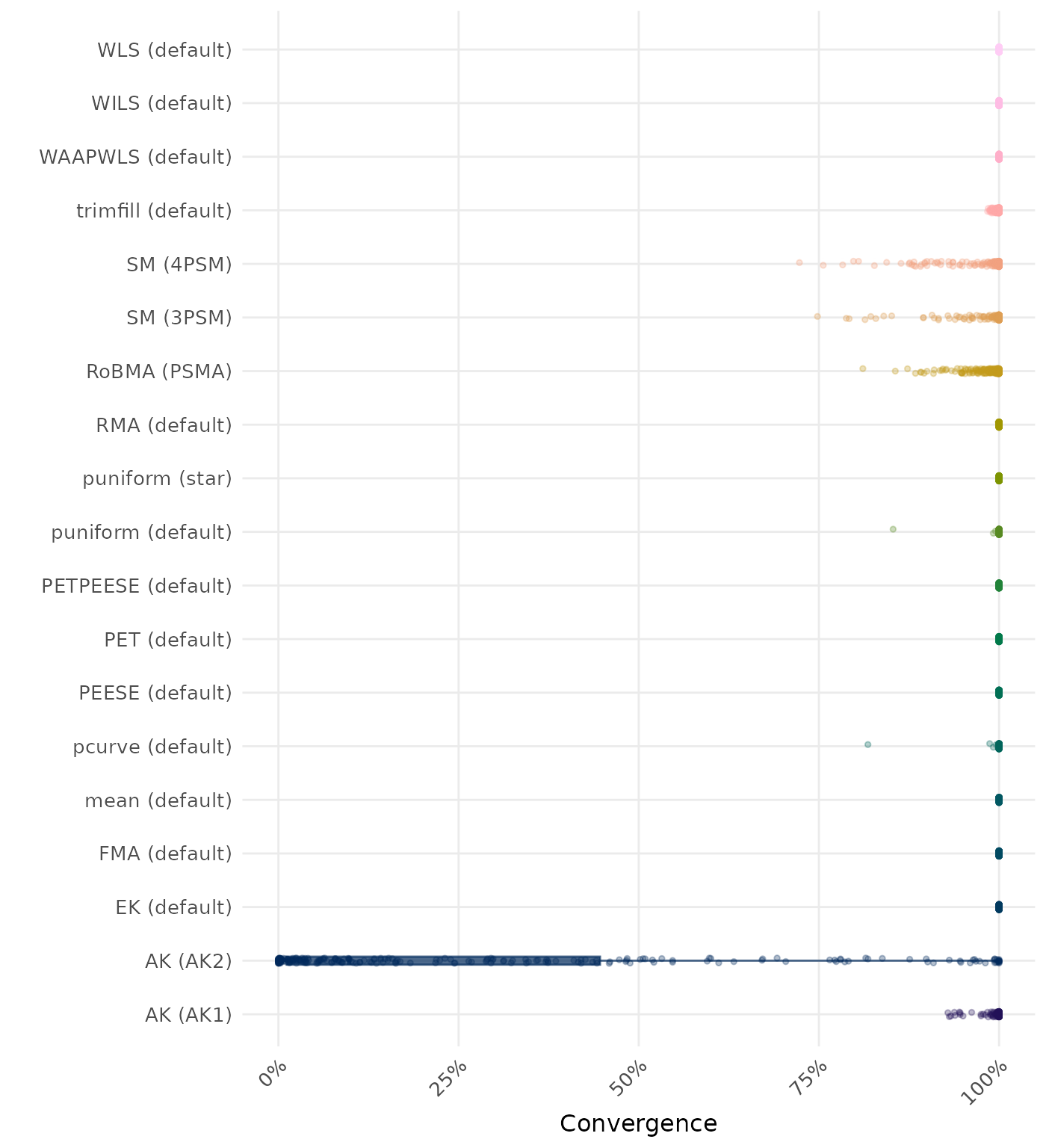

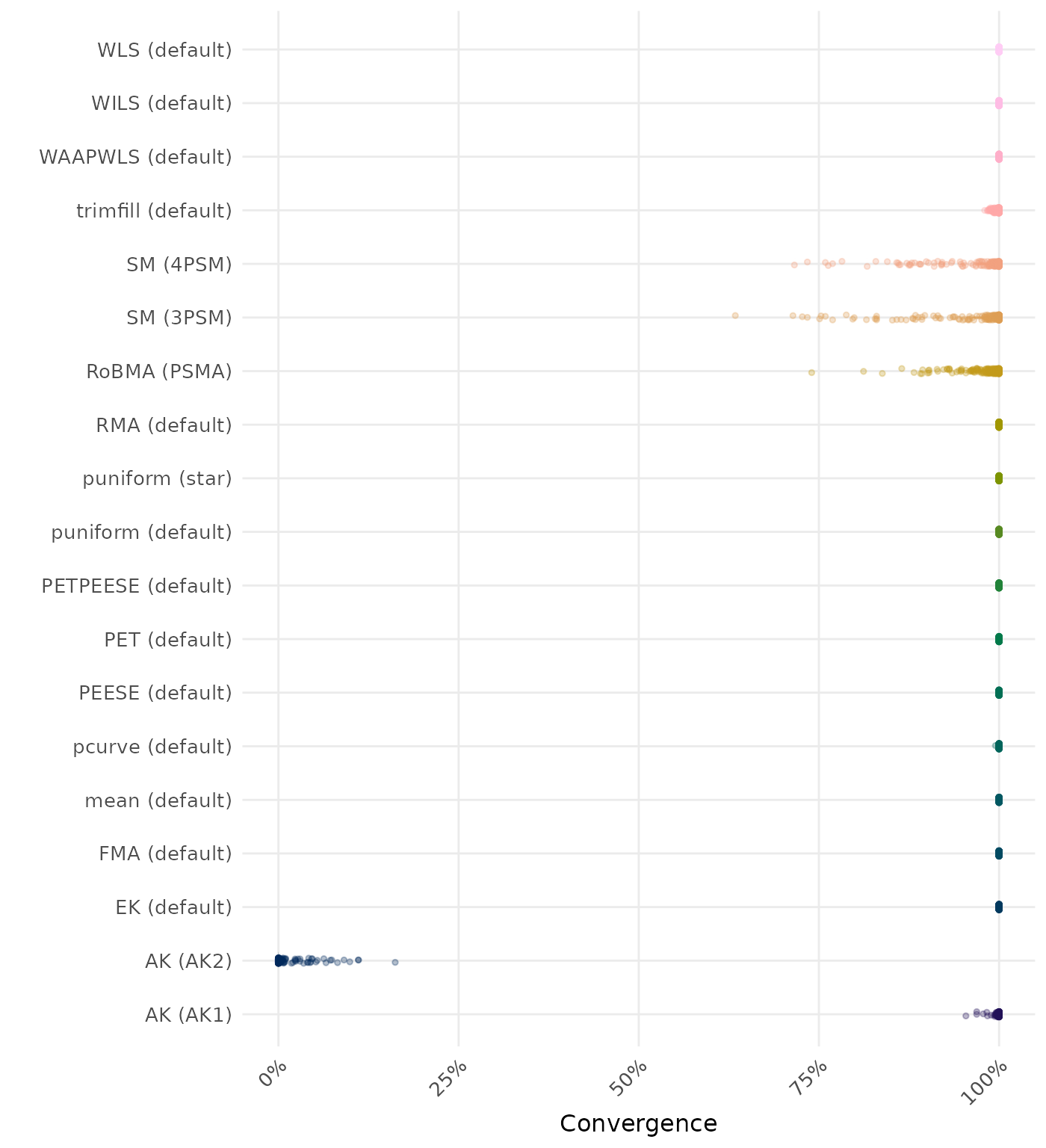

By-Condition Performance (Conditional on Method Convergence)

The results below are conditional on method convergence. Note that the methods might differ in convergence rate and are therefore not compared on the same data sets.

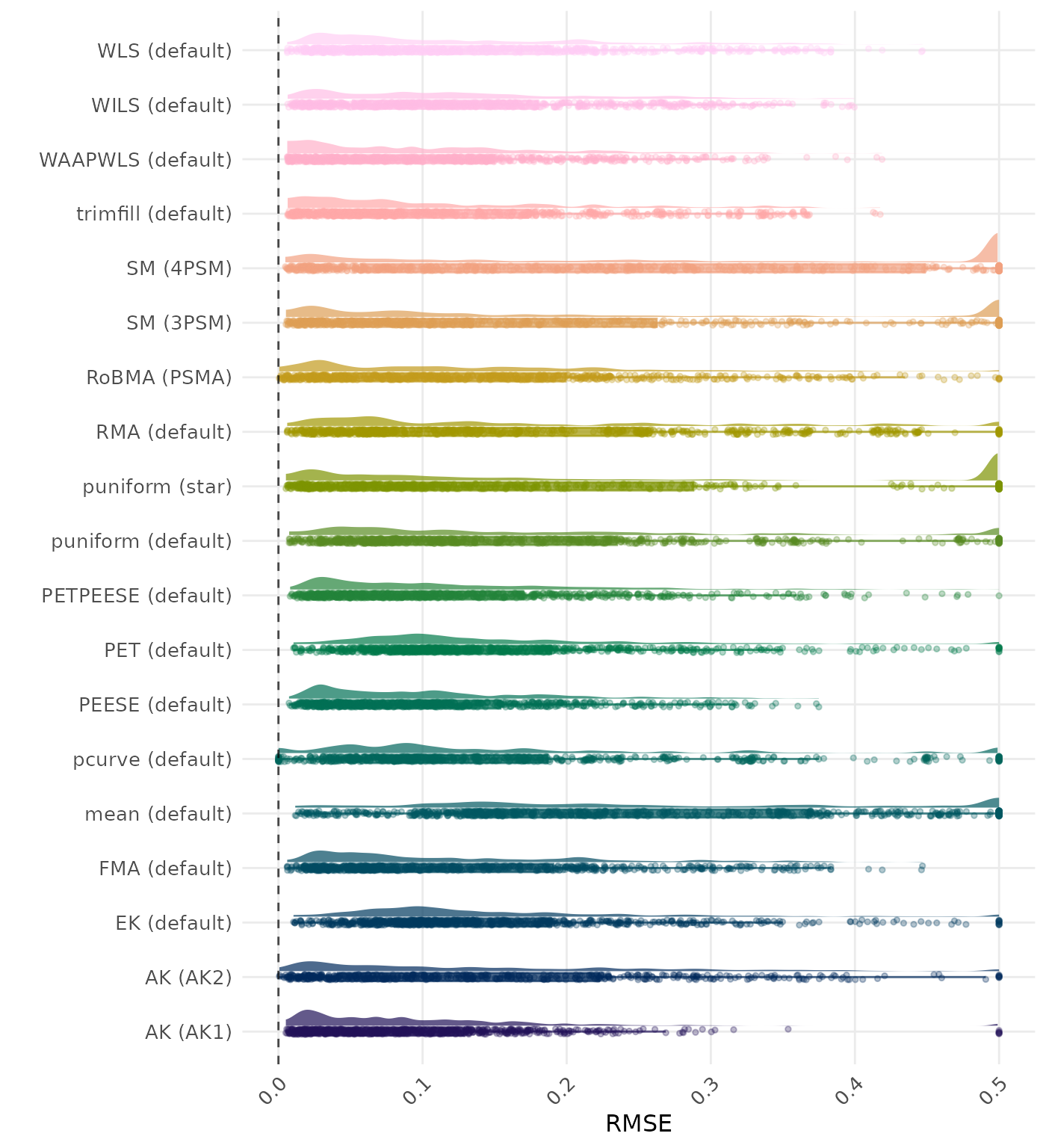

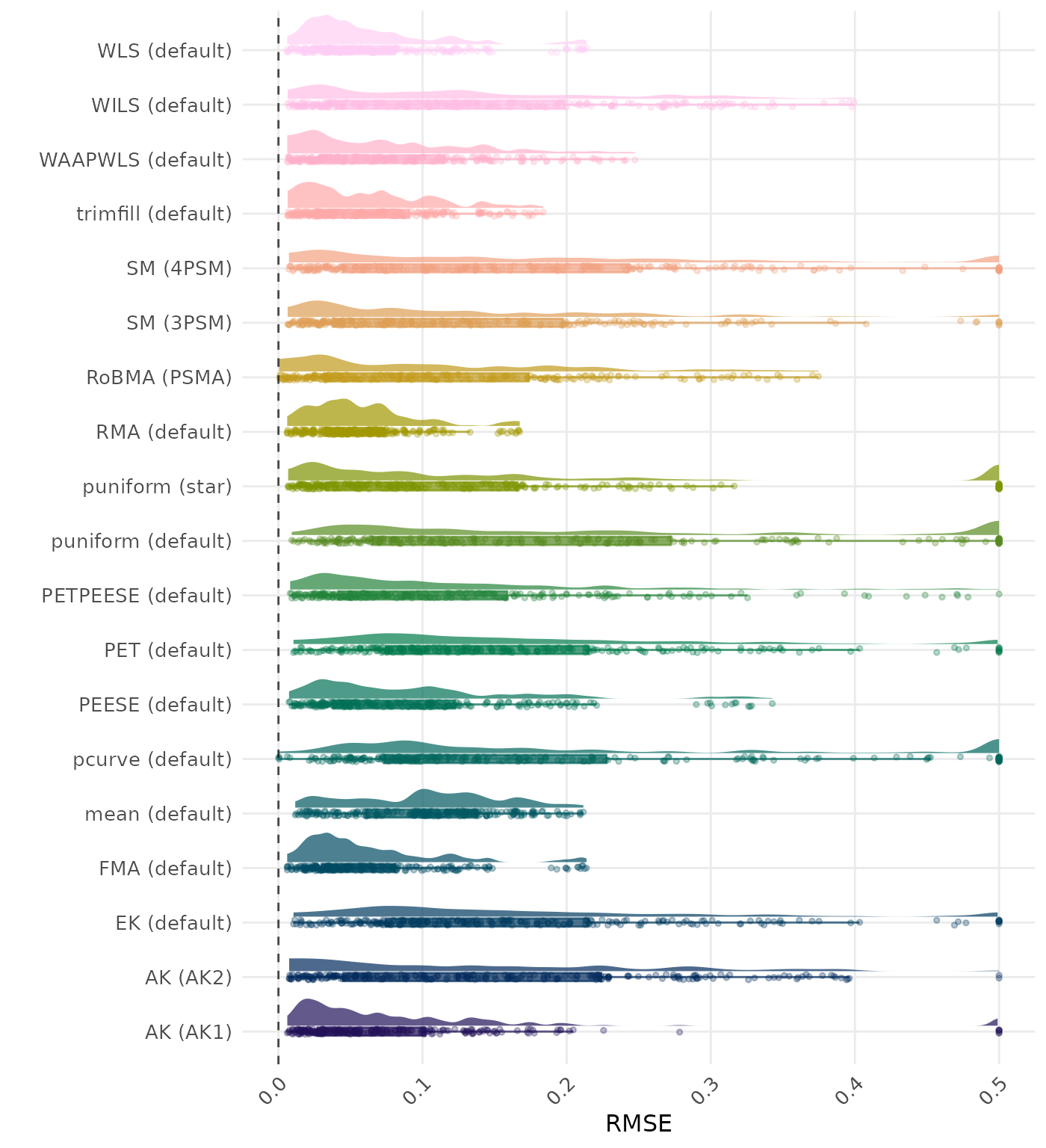

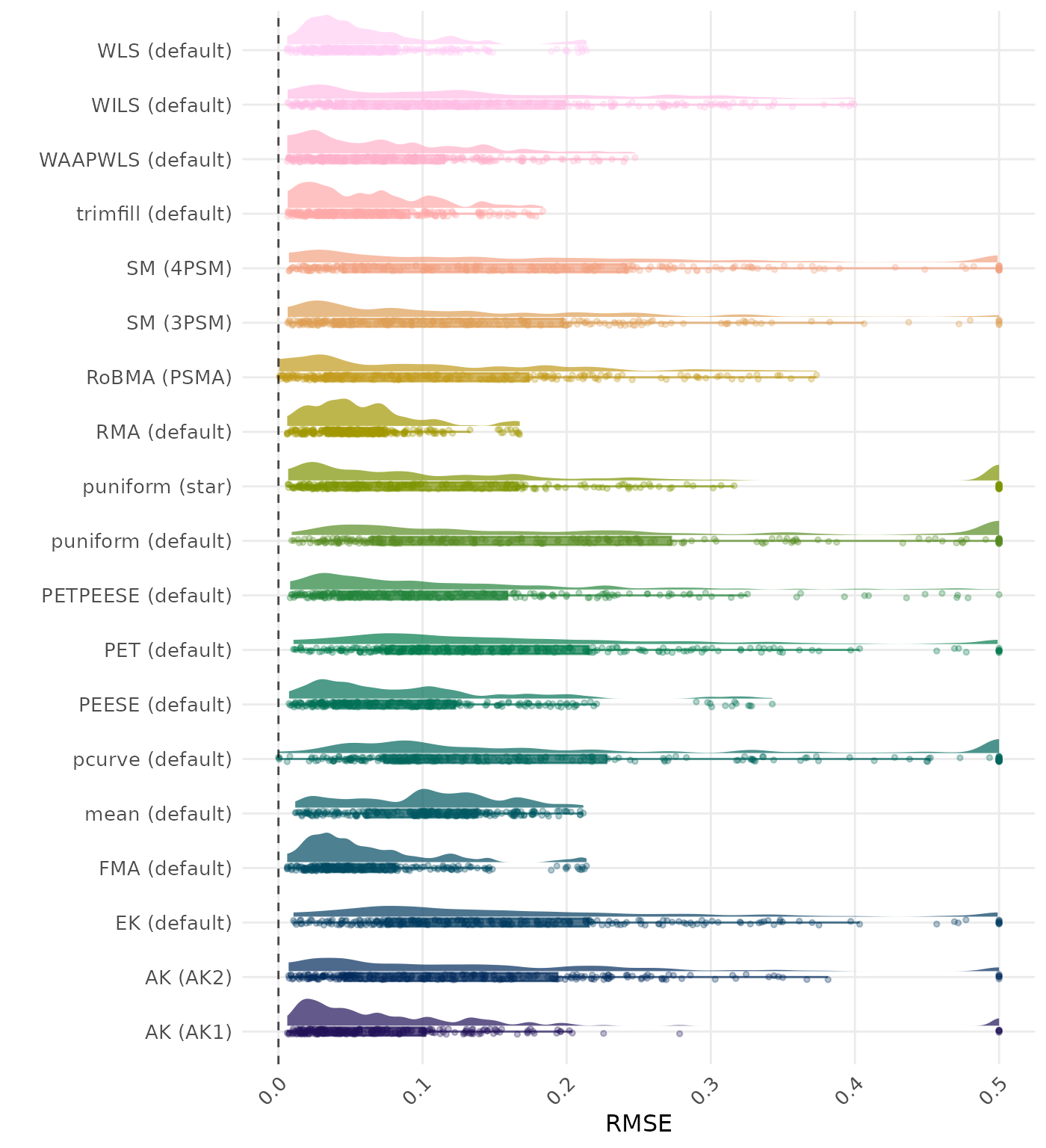

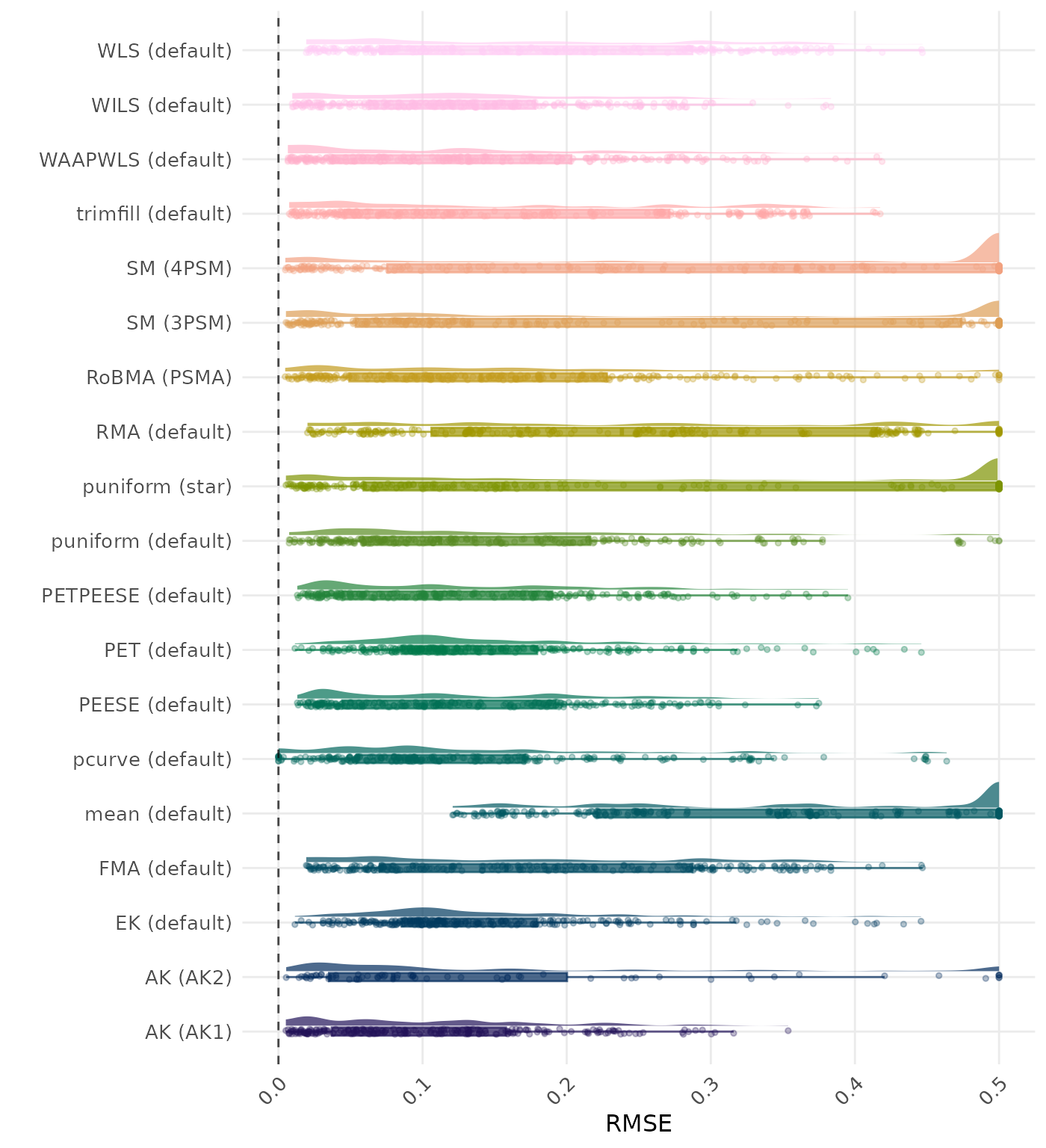

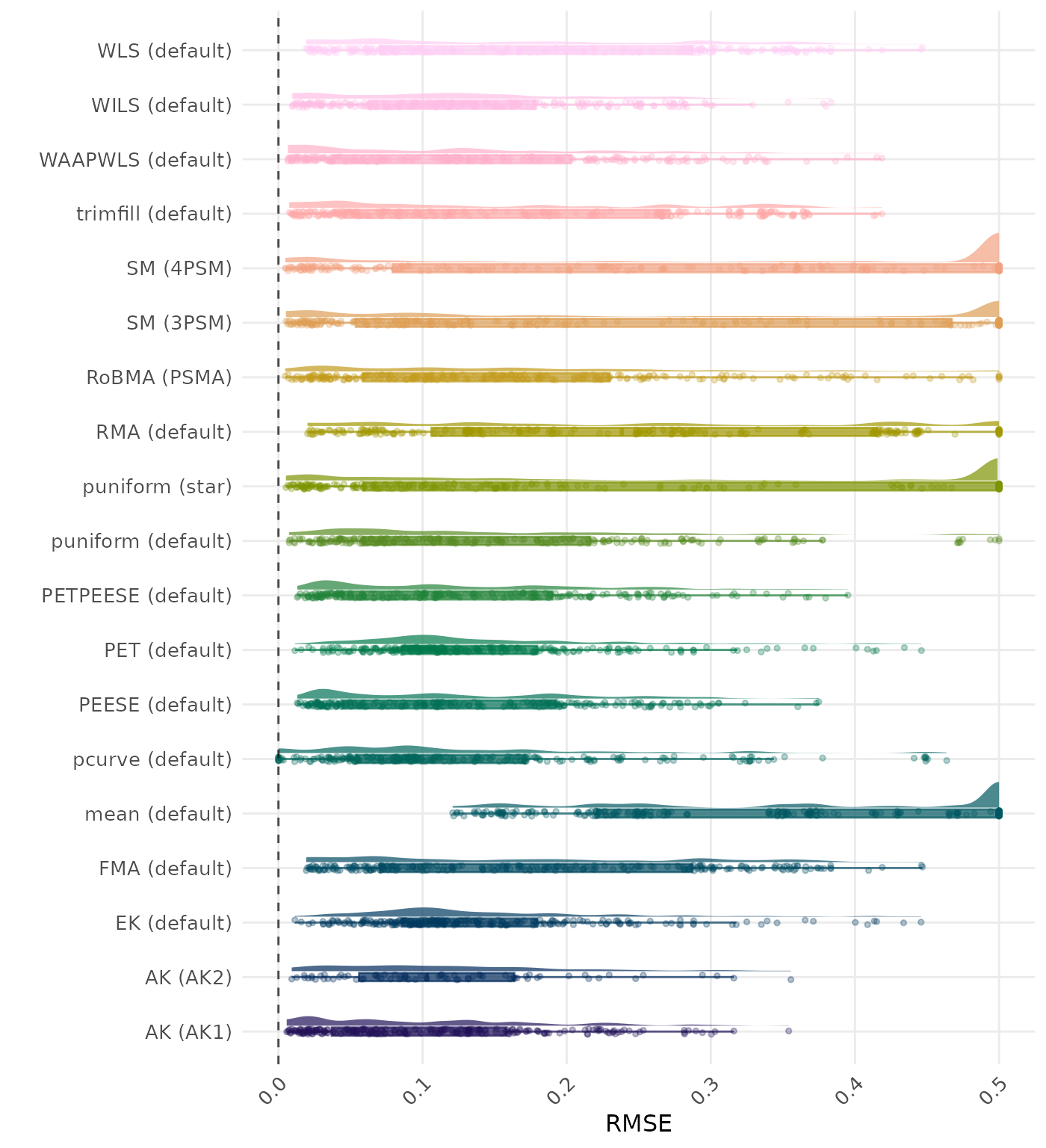

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method. Values larger than 0.5 are visualized as 0.5.

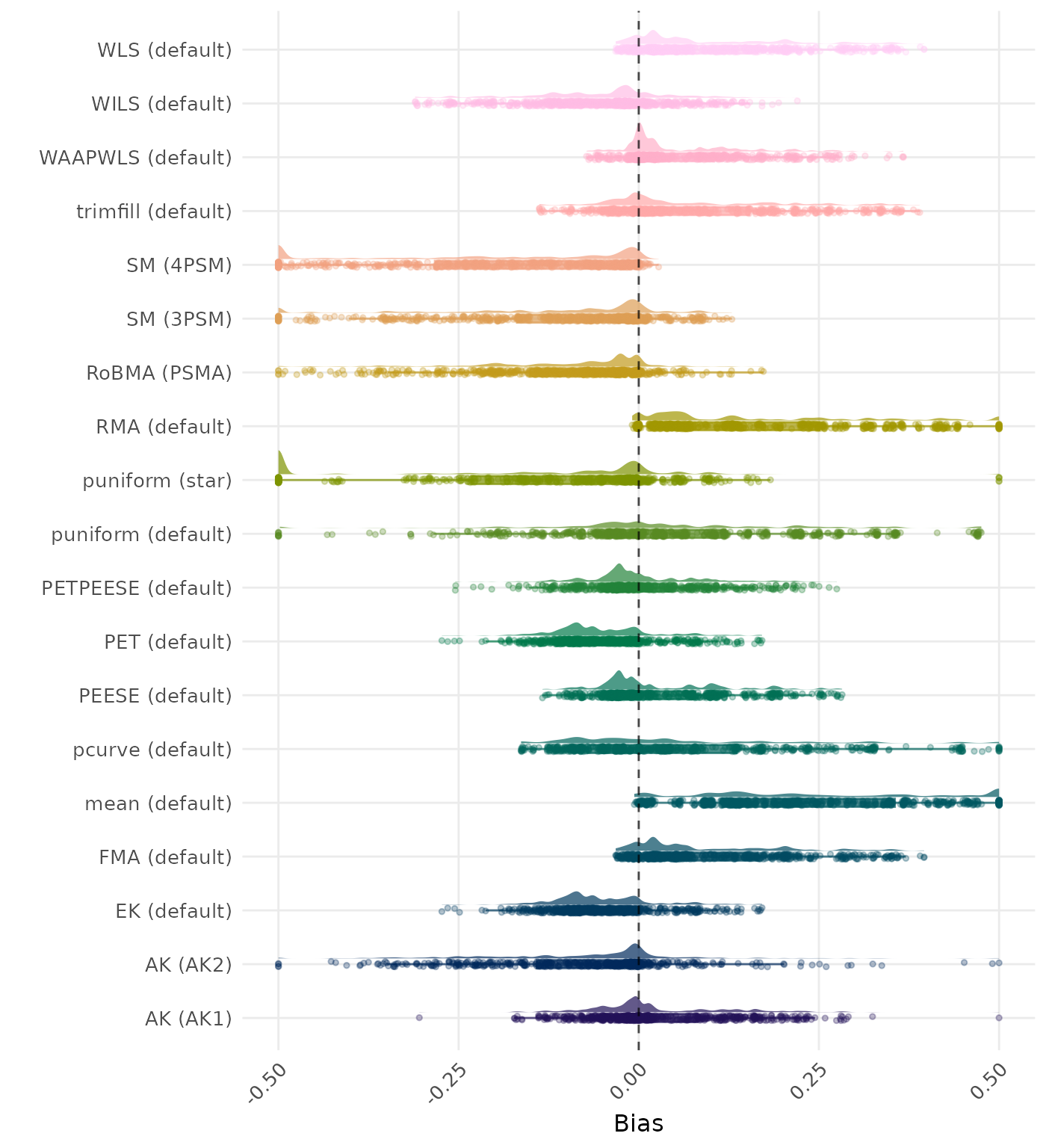

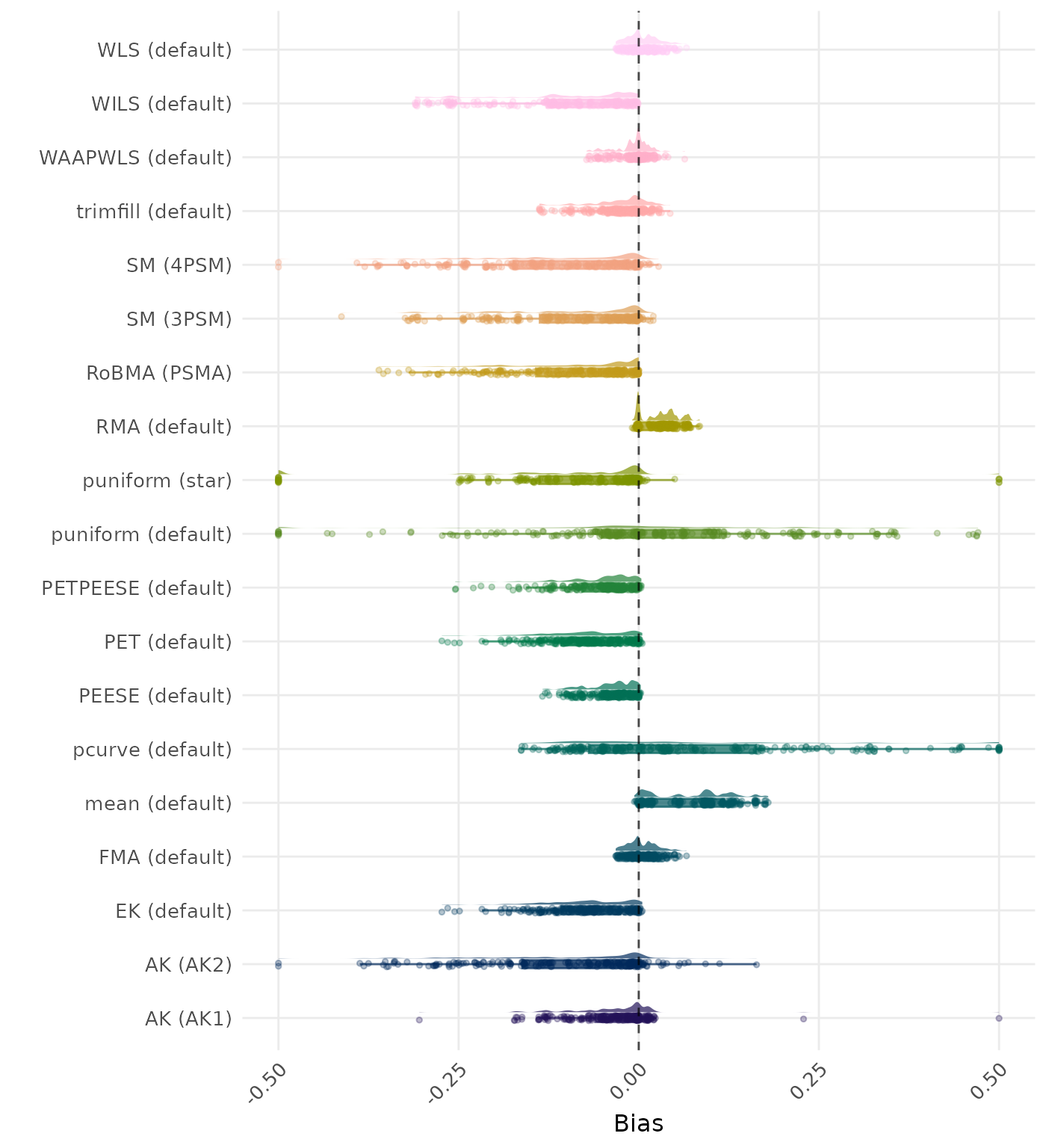

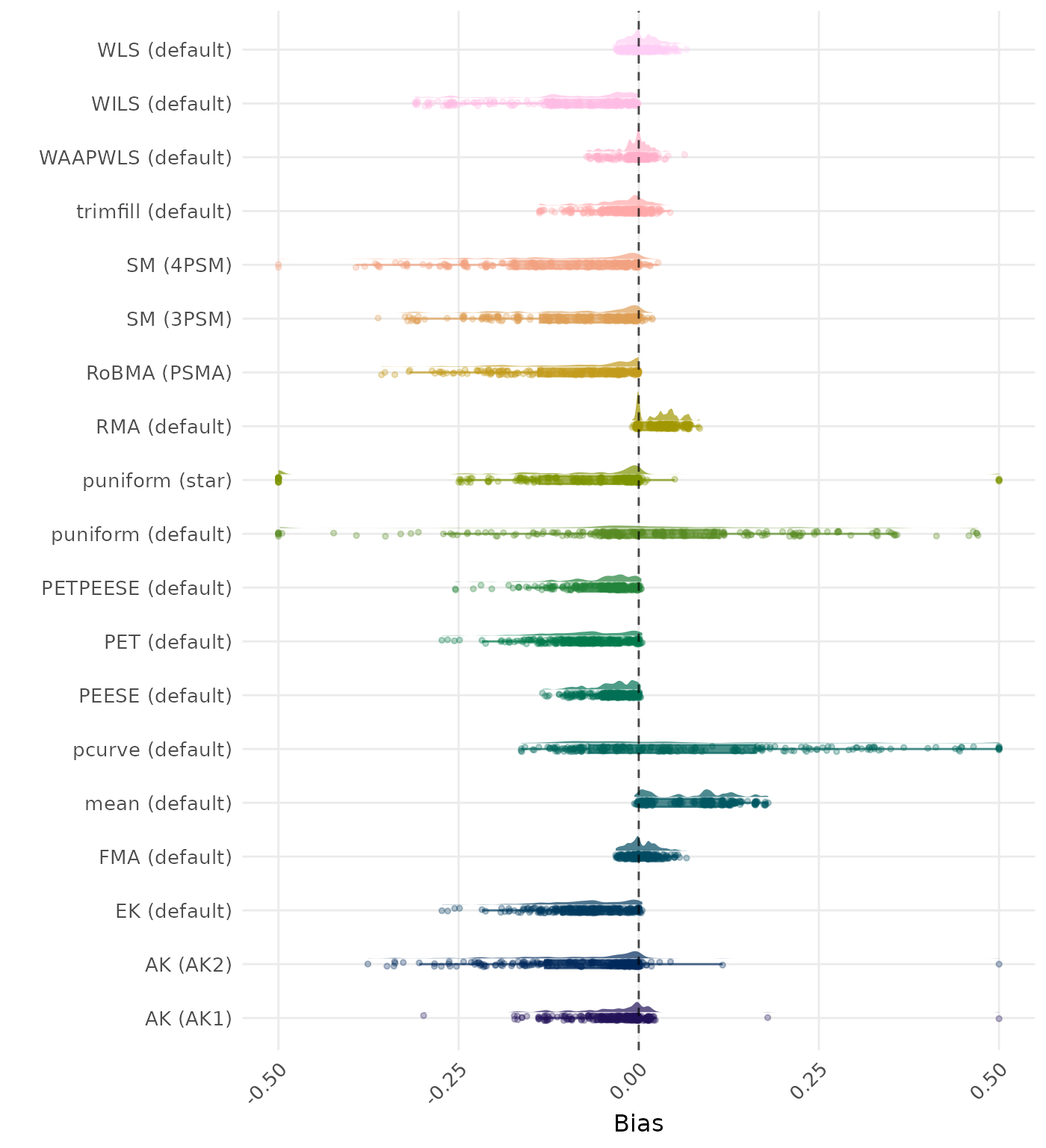

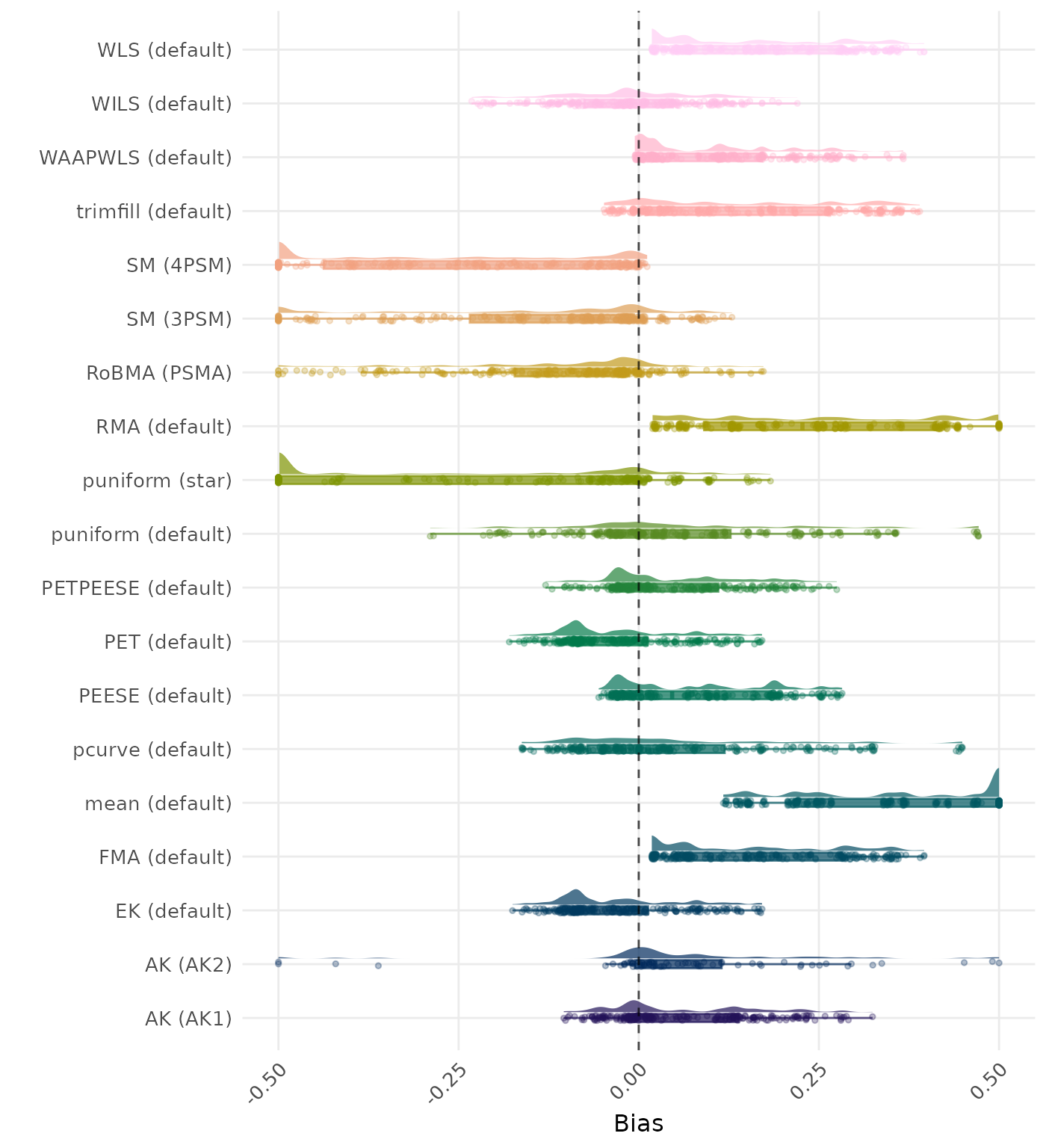

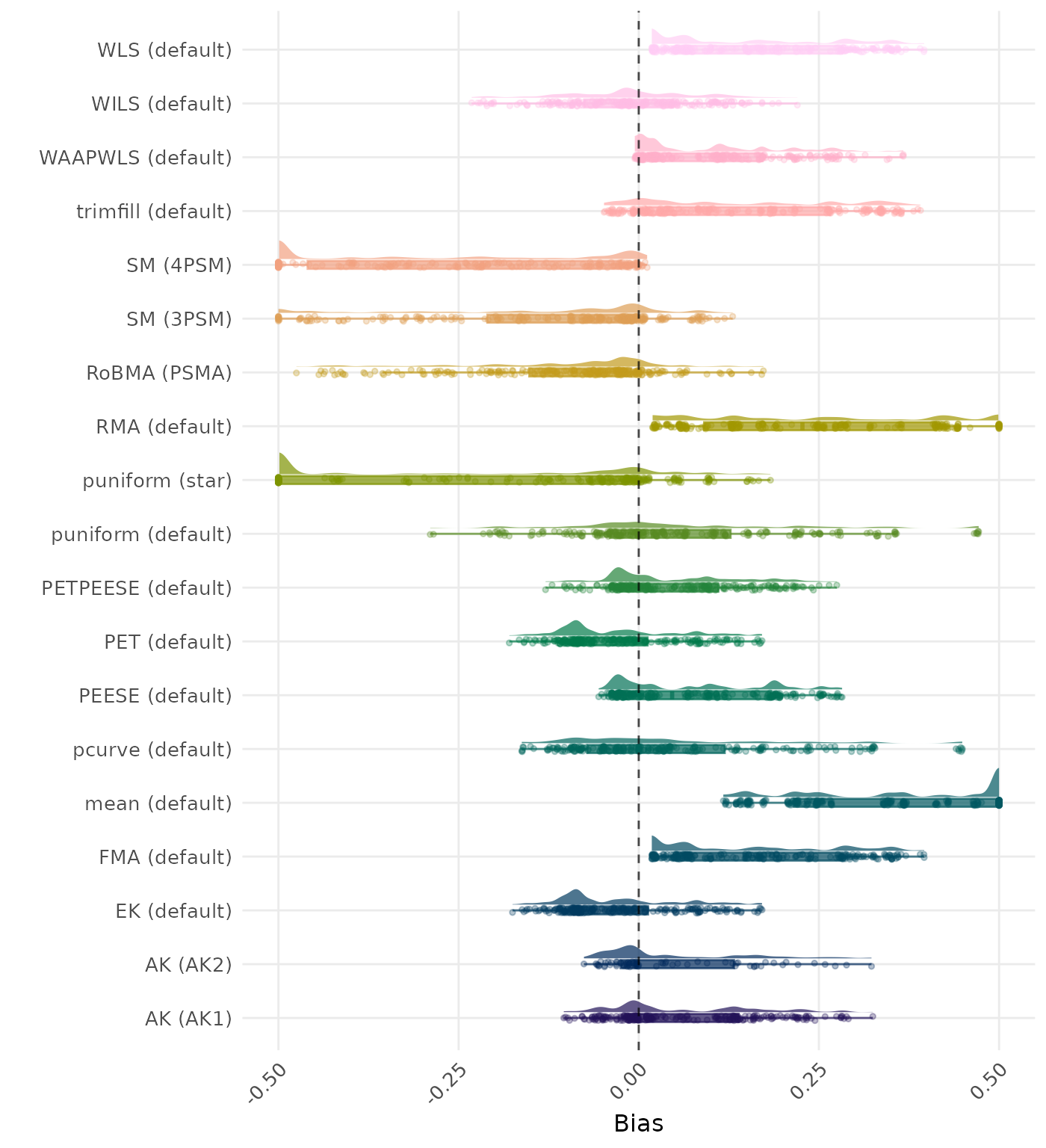

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0. Values lower than -0.5 or larger than 0.5 are visualized as -0.5 and 0.5 respectively.

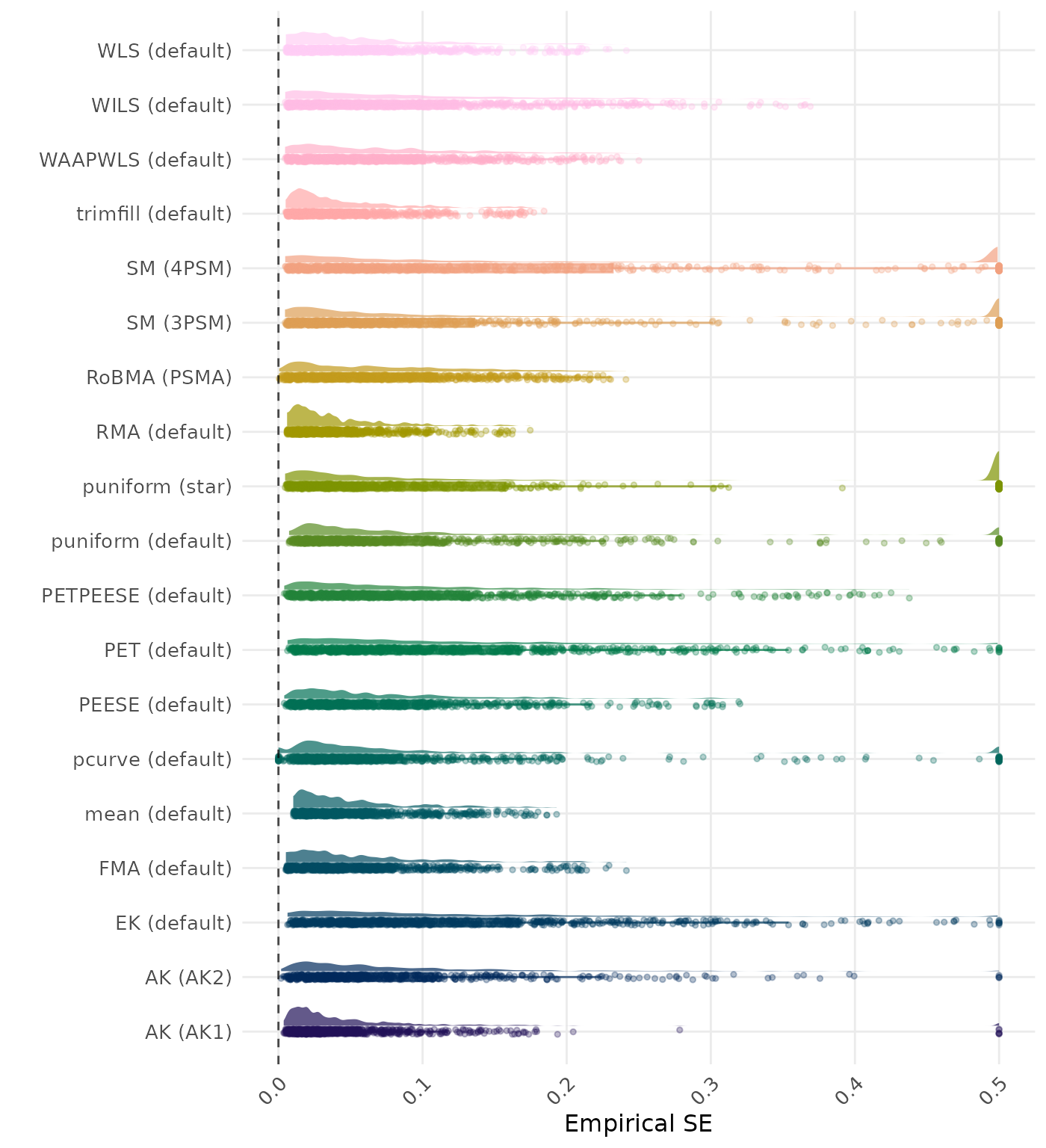

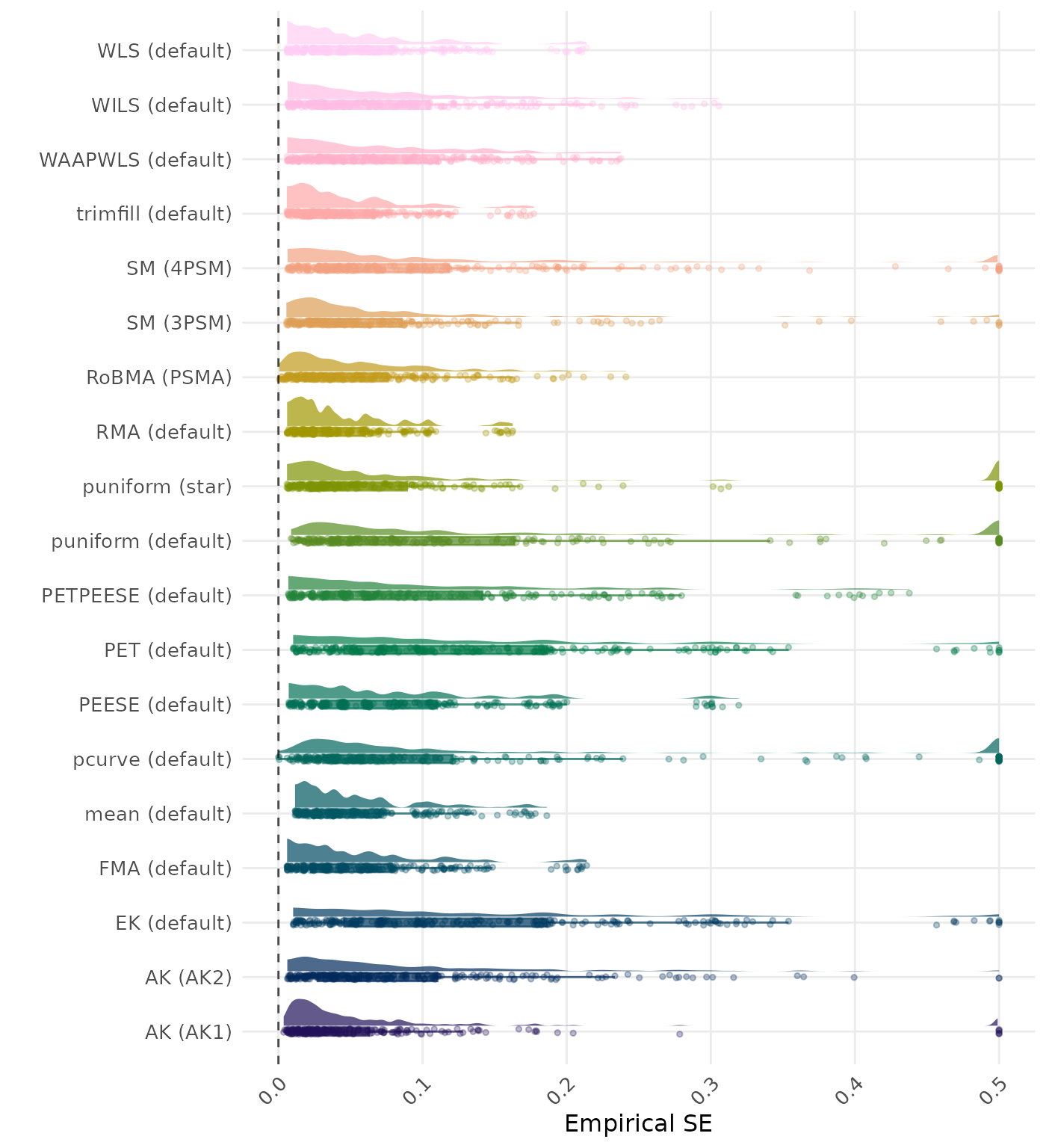

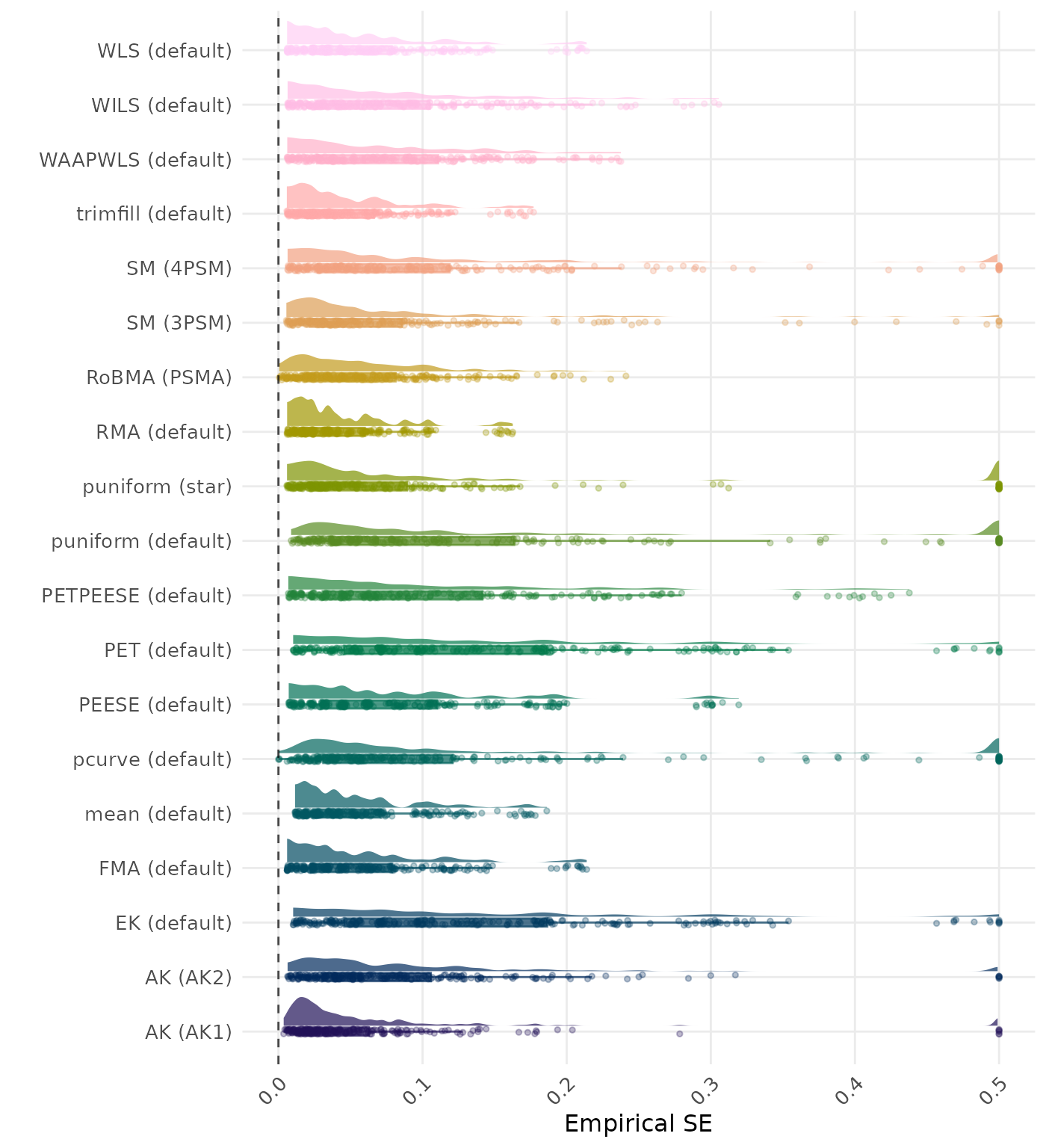

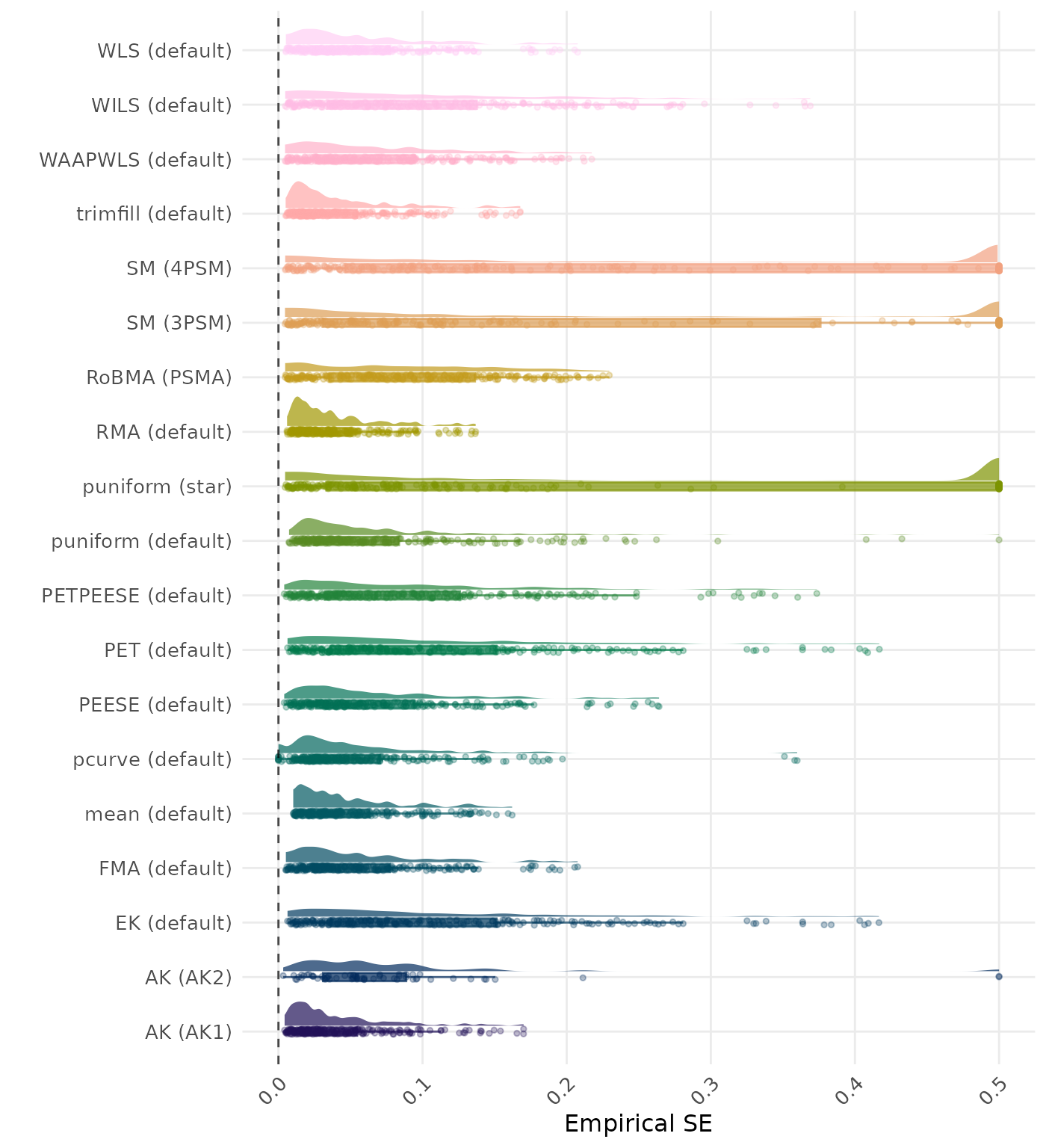

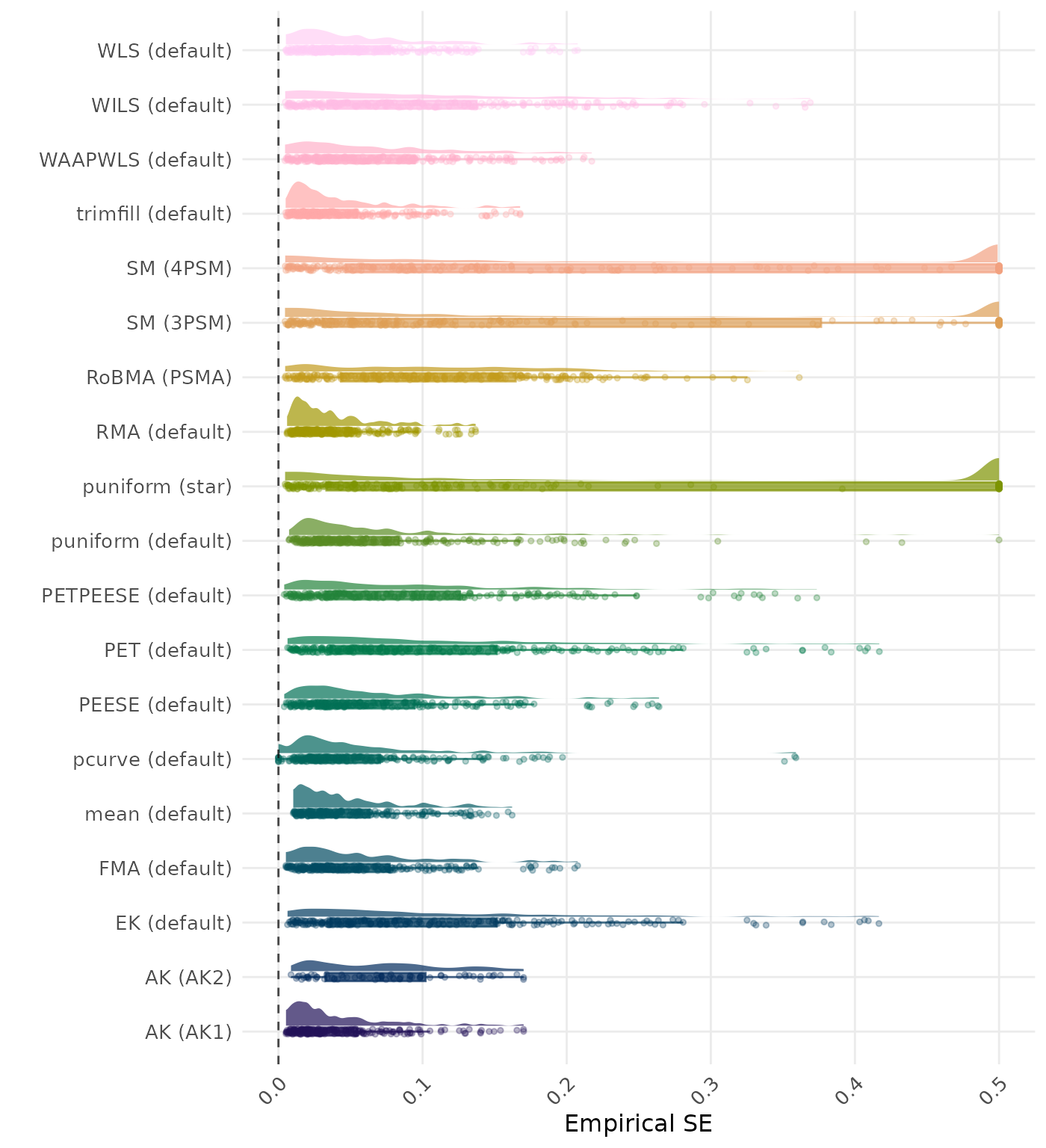

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance. Values larger than 0.5 are visualized as 0.5.

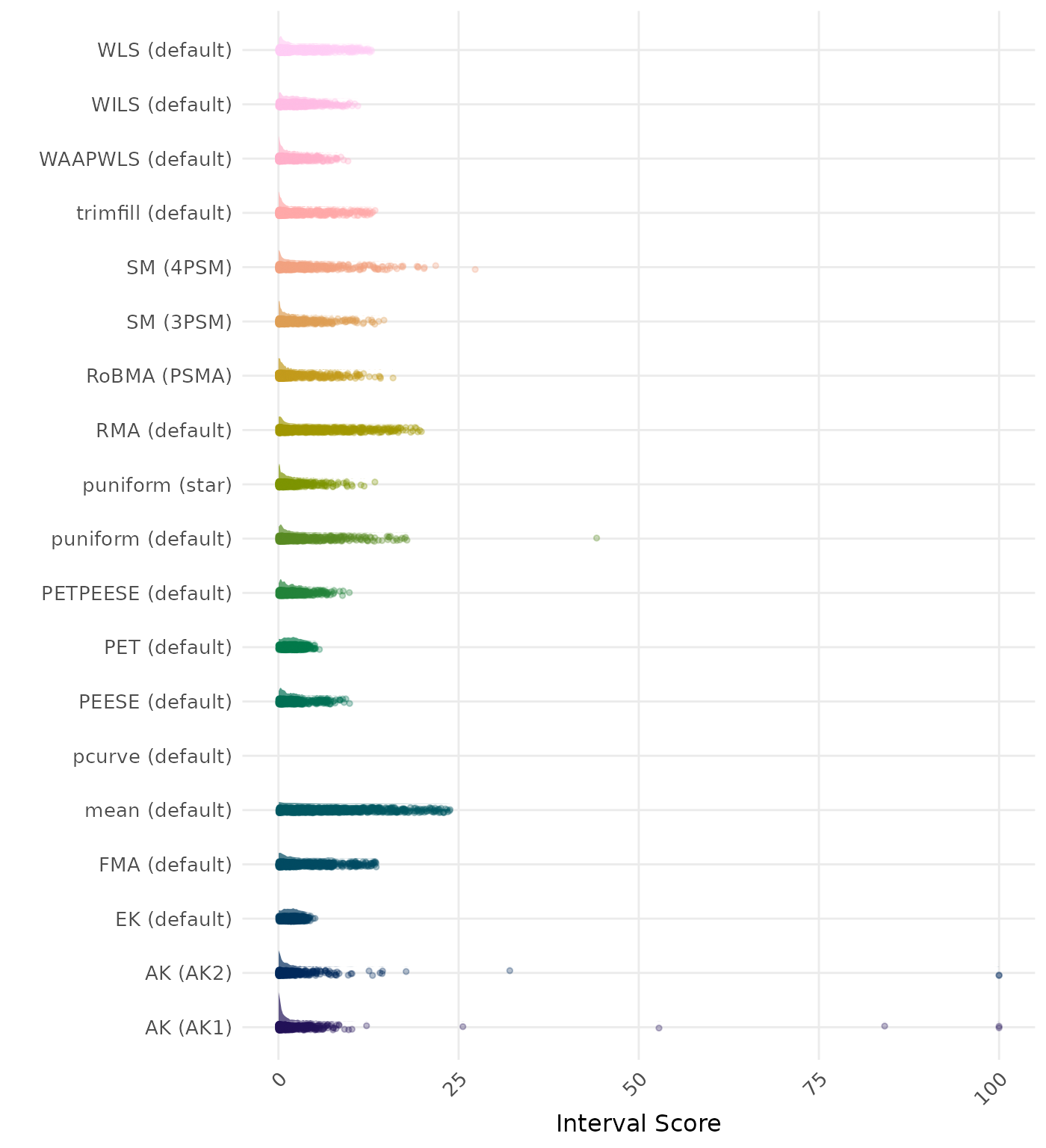

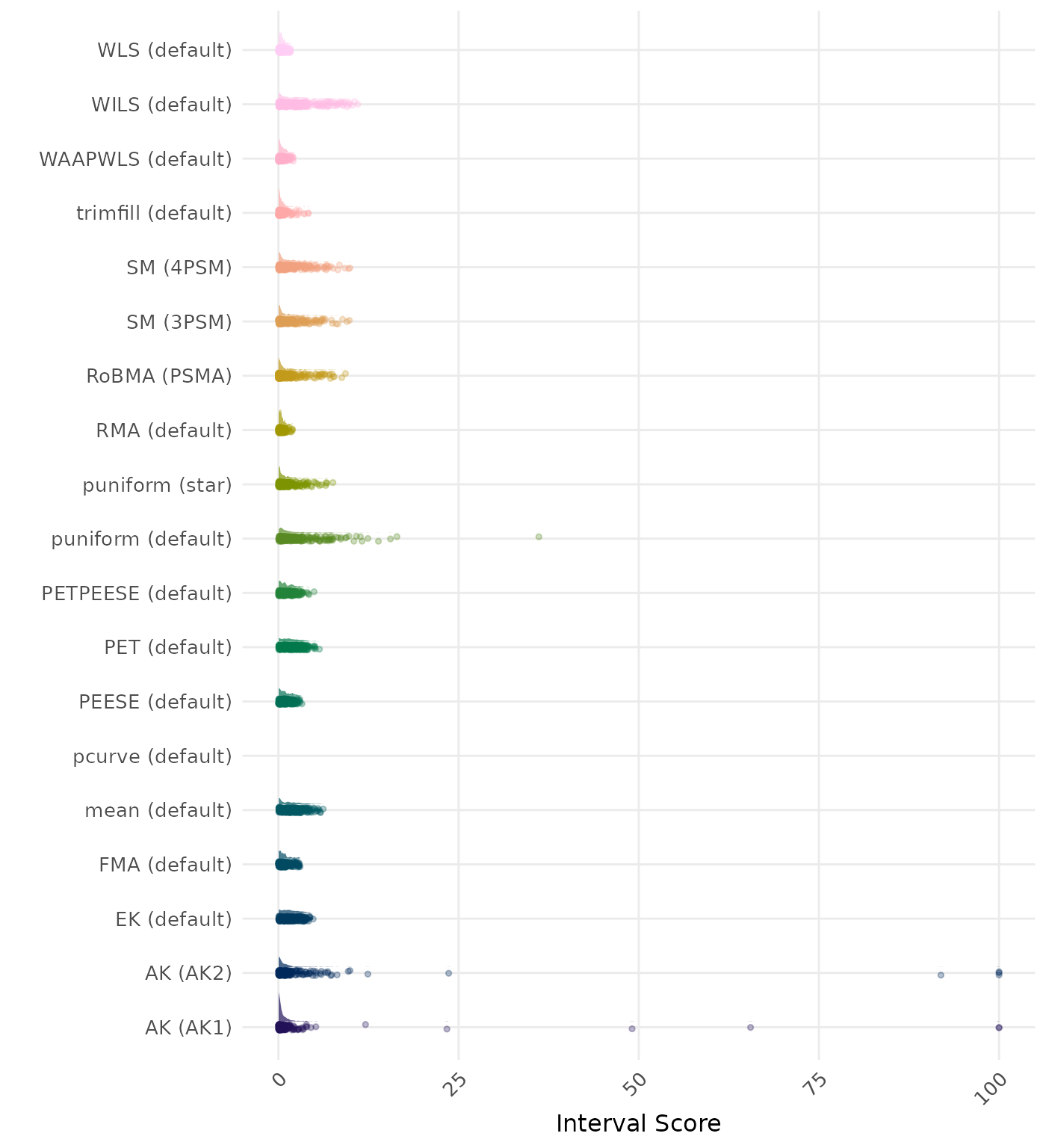

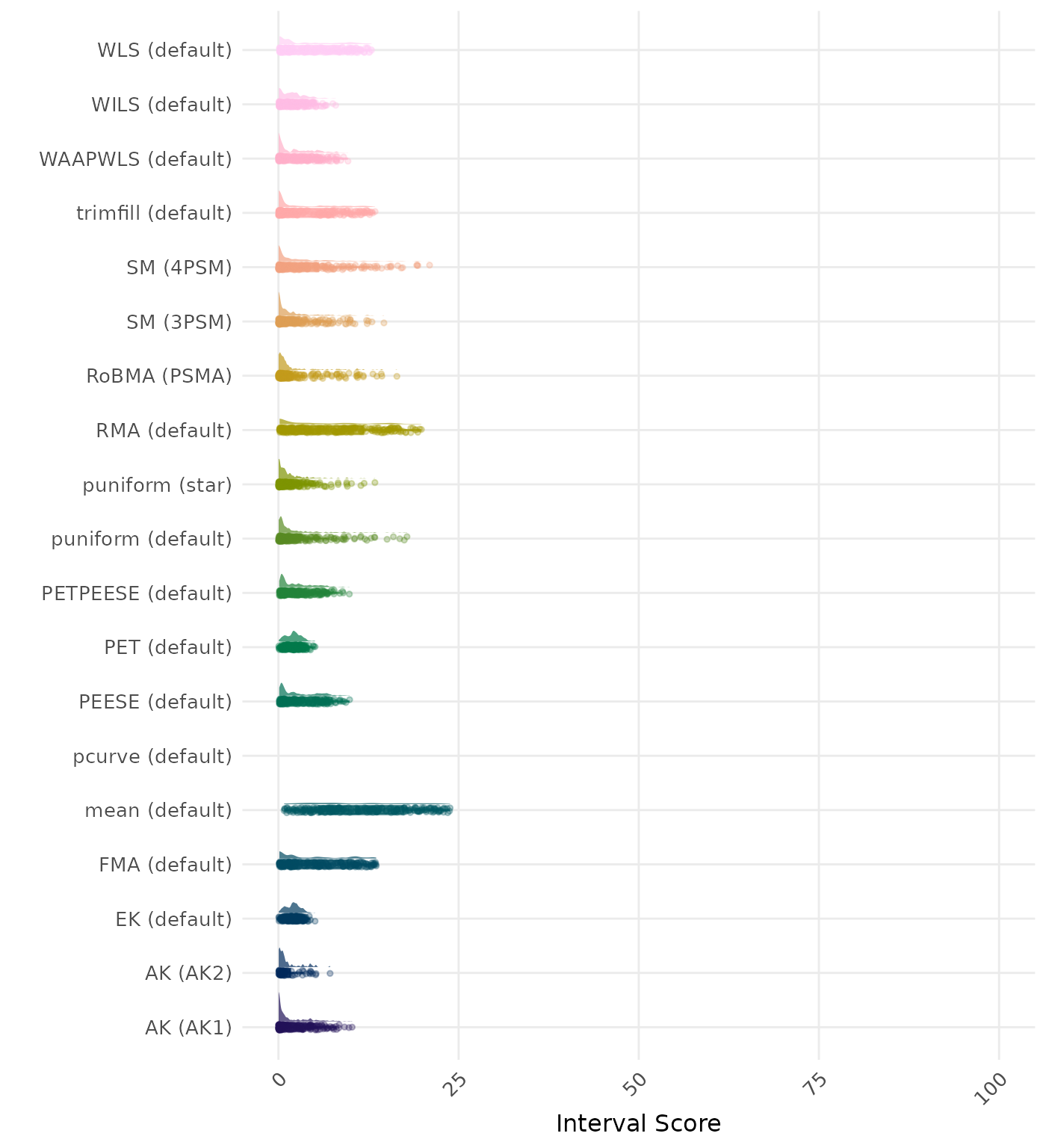

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method. Values larger than 100 are visualized as 100.

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

By-Condition Performance (Replacement in Case of Non-Convergence)

The results below incorporate method replacement to handle non-convergence. If a method fails to converge, its results are replaced with the results from a simpler method (e.g., random-effects meta-analysis without publication bias adjustment). This emulates what a data analyst may do in practice in case a method does not converge. However, note that these results do not correspond to “pure” method performance as they might combine multiple different methods. See Method Replacement Strategy for details of the method replacement specification.

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method. Values larger than 0.5 are visualized as 0.5.

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0. Values lower than -0.5 or larger than 0.5 are visualized as -0.5 and 0.5 respectively.

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance. Values larger than 0.5 are visualized as 0.5.

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method. Values larger than 100 are visualized as 100.

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

Subset: No Questionable Research Practices

These results are based on Carter (2019) data-generating mechanism with a total of 252 conditions.

Average Performance

Method performance measures are aggregated across all simulated conditions to provide an overall impression of method performance. However, keep in mind that a method with a high overall ranking is not necessarily the “best” method for a particular application. To select a suitable method for your application, consider also non-aggregated performance measures in conditions most relevant to your application.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | 0.057 | 1 | RMA (default) | 0.057 |

| 2 | FMA (default) | 0.063 | 2 | FMA (default) | 0.063 |

| 2 | WLS (default) | 0.063 | 2 | WLS (default) | 0.063 |

| 4 | trimfill (default) | 0.063 | 4 | trimfill (default) | 0.063 |

| 5 | WAAPWLS (default) | 0.077 | 5 | WAAPWLS (default) | 0.077 |

| 6 | PEESE (default) | 0.094 | 6 | PEESE (default) | 0.094 |

| 7 | mean (default) | 0.104 | 7 | mean (default) | 0.104 |

| 8 | RoBMA (PSMA) | 0.109 | 8 | RoBMA (PSMA) | 0.108 |

| 9 | PETPEESE (default) | 0.118 | 9 | PETPEESE (default) | 0.118 |

| 10 | WILS (default) | 0.131 | 10 | WILS (default) | 0.131 |

| 11 | SM (3PSM) | 0.134 | 11 | SM (3PSM) | 0.134 |

| 12 | EK (default) | 0.161 | 12 | EK (default) | 0.161 |

| 13 | PET (default) | 0.161 | 13 | PET (default) | 0.161 |

| 14 | SM (4PSM) | 0.196 | 14 | SM (4PSM) | 0.198 |

| 15 | pcurve (default) | 0.235 | 15 | pcurve (default) | 0.222 |

| 16 | AK (AK2) | 0.246 | 16 | AK (AK1) | 0.289 |

| 17 | AK (AK1) | 0.297 | 17 | AK (AK2) | 0.356 |

| 18 | puniform (default) | 0.952 | 18 | puniform (default) | 0.862 |

| 19 | puniform (star) | 46.988 | 19 | puniform (star) | 46.988 |

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | FMA (default) | 0.004 | 1 | puniform (default) | -0.001 |

| 1 | WLS (default) | 0.004 | 2 | FMA (default) | 0.004 |

| 3 | WAAPWLS (default) | -0.007 | 2 | WLS (default) | 0.004 |

| 4 | puniform (default) | -0.014 | 4 | WAAPWLS (default) | -0.007 |

| 5 | trimfill (default) | -0.025 | 5 | trimfill (default) | -0.025 |

| 6 | RMA (default) | 0.028 | 6 | RMA (default) | 0.028 |

| 7 | AK (AK1) | -0.031 | 7 | AK (AK1) | -0.032 |

| 8 | PEESE (default) | -0.038 | 8 | PEESE (default) | -0.038 |

| 9 | PETPEESE (default) | -0.052 | 9 | PETPEESE (default) | -0.052 |

| 10 | EK (default) | -0.073 | 10 | pcurve (default) | 0.063 |

| 11 | PET (default) | -0.073 | 11 | EK (default) | -0.073 |

| 12 | pcurve (default) | 0.075 | 12 | PET (default) | -0.073 |

| 13 | mean (default) | 0.075 | 13 | AK (AK2) | -0.074 |

| 14 | SM (3PSM) | -0.085 | 14 | mean (default) | 0.075 |

| 15 | RoBMA (PSMA) | -0.088 | 15 | SM (3PSM) | -0.085 |

| 16 | WILS (default) | -0.096 | 16 | RoBMA (PSMA) | -0.087 |

| 17 | AK (AK2) | -0.101 | 17 | WILS (default) | -0.096 |

| 18 | SM (4PSM) | -0.106 | 18 | SM (4PSM) | -0.106 |

| 19 | puniform (star) | -4.095 | 19 | puniform (star) | -4.095 |

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | 0.043 | 1 | RMA (default) | 0.043 |

| 2 | trimfill (default) | 0.048 | 2 | trimfill (default) | 0.048 |

| 3 | RoBMA (PSMA) | 0.054 | 3 | mean (default) | 0.055 |

| 4 | mean (default) | 0.055 | 4 | RoBMA (PSMA) | 0.057 |

| 5 | FMA (default) | 0.058 | 5 | FMA (default) | 0.058 |

| 5 | WLS (default) | 0.058 | 5 | WLS (default) | 0.058 |

| 7 | WAAPWLS (default) | 0.074 | 7 | WAAPWLS (default) | 0.074 |

| 8 | WILS (default) | 0.076 | 8 | WILS (default) | 0.076 |

| 9 | SM (3PSM) | 0.081 | 9 | SM (3PSM) | 0.080 |

| 10 | PEESE (default) | 0.082 | 10 | PEESE (default) | 0.082 |

| 11 | PETPEESE (default) | 0.101 | 11 | PETPEESE (default) | 0.101 |

| 12 | EK (default) | 0.135 | 12 | EK (default) | 0.135 |

| 13 | PET (default) | 0.135 | 13 | PET (default) | 0.135 |

| 14 | SM (4PSM) | 0.136 | 14 | SM (4PSM) | 0.137 |

| 15 | pcurve (default) | 0.164 | 15 | pcurve (default) | 0.156 |

| 16 | AK (AK2) | 0.187 | 16 | AK (AK1) | 0.268 |

| 17 | AK (AK1) | 0.275 | 17 | AK (AK2) | 0.310 |

| 18 | puniform (default) | 0.888 | 18 | puniform (default) | 0.800 |

| 19 | puniform (star) | 46.707 | 19 | puniform (star) | 46.707 |

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | 0.419 | 1 | RMA (default) | 0.419 |

| 2 | WLS (default) | 0.511 | 2 | WLS (default) | 0.511 |

| 3 | trimfill (default) | 0.533 | 3 | trimfill (default) | 0.533 |

| 4 | WAAPWLS (default) | 0.539 | 4 | WAAPWLS (default) | 0.539 |

| 5 | FMA (default) | 0.863 | 5 | FMA (default) | 0.863 |

| 6 | PEESE (default) | 0.977 | 6 | PEESE (default) | 0.977 |

| 7 | puniform (star) | 1.130 | 7 | puniform (star) | 1.130 |

| 8 | PETPEESE (default) | 1.153 | 8 | PETPEESE (default) | 1.153 |

| 9 | RoBMA (PSMA) | 1.385 | 9 | RoBMA (PSMA) | 1.363 |

| 10 | SM (3PSM) | 1.563 | 10 | SM (3PSM) | 1.559 |

| 11 | EK (default) | 1.711 | 11 | EK (default) | 1.711 |

| 12 | mean (default) | 1.735 | 12 | SM (4PSM) | 1.717 |

| 13 | SM (4PSM) | 1.762 | 13 | mean (default) | 1.735 |

| 14 | PET (default) | 1.784 | 14 | PET (default) | 1.784 |

| 15 | WILS (default) | 2.636 | 15 | puniform (default) | 2.538 |

| 16 | puniform (default) | 2.690 | 16 | WILS (default) | 2.636 |

| 17 | AK (AK1) | 5.500 | 17 | AK (AK1) | 5.273 |

| 18 | AK (AK2) | 5.978 | 18 | AK (AK2) | 6.407 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | WAAPWLS (default) | 0.798 | 1 | WAAPWLS (default) | 0.798 |

| 2 | trimfill (default) | 0.749 | 2 | trimfill (default) | 0.748 |

| 3 | RMA (default) | 0.736 | 3 | RMA (default) | 0.736 |

| 4 | AK (AK1) | 0.711 | 4 | AK (AK1) | 0.711 |

| 5 | WLS (default) | 0.698 | 5 | WLS (default) | 0.698 |

| 6 | puniform (star) | 0.656 | 6 | AK (AK2) | 0.659 |

| 7 | AK (AK2) | 0.653 | 7 | puniform (star) | 0.656 |

| 8 | RoBMA (PSMA) | 0.639 | 8 | RoBMA (PSMA) | 0.640 |

| 9 | SM (4PSM) | 0.619 | 9 | SM (4PSM) | 0.619 |

| 10 | PEESE (default) | 0.618 | 10 | PEESE (default) | 0.618 |

| 11 | PETPEESE (default) | 0.615 | 11 | PETPEESE (default) | 0.615 |

| 12 | puniform (default) | 0.606 | 12 | puniform (default) | 0.607 |

| 13 | SM (3PSM) | 0.595 | 13 | SM (3PSM) | 0.595 |

| 14 | EK (default) | 0.568 | 14 | EK (default) | 0.568 |

| 15 | PET (default) | 0.554 | 15 | PET (default) | 0.554 |

| 16 | FMA (default) | 0.530 | 16 | FMA (default) | 0.530 |

| 17 | mean (default) | 0.437 | 17 | mean (default) | 0.437 |

| 18 | WILS (default) | 0.402 | 18 | WILS (default) | 0.402 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | FMA (default) | 0.096 | 1 | FMA (default) | 0.096 |

| 2 | WILS (default) | 0.138 | 2 | WILS (default) | 0.138 |

| 3 | WLS (default) | 0.143 | 3 | WLS (default) | 0.143 |

| 4 | mean (default) | 0.149 | 4 | mean (default) | 0.149 |

| 5 | trimfill (default) | 0.175 | 5 | trimfill (default) | 0.175 |

| 6 | RMA (default) | 0.177 | 6 | RMA (default) | 0.177 |

| 7 | PEESE (default) | 0.184 | 7 | PEESE (default) | 0.184 |

| 8 | WAAPWLS (default) | 0.206 | 8 | WAAPWLS (default) | 0.206 |

| 9 | RoBMA (PSMA) | 0.211 | 9 | RoBMA (PSMA) | 0.210 |

| 10 | PETPEESE (default) | 0.237 | 10 | PETPEESE (default) | 0.237 |

| 11 | SM (3PSM) | 0.268 | 11 | SM (3PSM) | 0.264 |

| 12 | puniform (star) | 0.270 | 12 | puniform (star) | 0.270 |

| 13 | PET (default) | 0.296 | 13 | PET (default) | 0.296 |

| 14 | EK (default) | 0.322 | 14 | EK (default) | 0.322 |

| 15 | SM (4PSM) | 0.455 | 15 | SM (4PSM) | 0.410 |

| 16 | puniform (default) | 0.976 | 16 | puniform (default) | 0.845 |

| 17 | AK (AK2) | 4.827 | 17 | AK (AK1) | 4.810 |

| 18 | AK (AK1) | 5.038 | 18 | AK (AK2) | 5.426 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | RoBMA (PSMA) | 3.582 | 1 | RoBMA (PSMA) | 3.669 |

| 2 | AK (AK1) | 2.778 | 2 | AK (AK1) | 2.770 |

| 3 | RMA (default) | 2.193 | 3 | RMA (default) | 2.193 |

| 4 | trimfill (default) | 2.152 | 4 | trimfill (default) | 2.152 |

| 5 | puniform (default) | 1.977 | 5 | puniform (default) | 1.961 |

| 6 | WLS (default) | 1.676 | 6 | WLS (default) | 1.676 |

| 7 | WAAPWLS (default) | 1.563 | 7 | WAAPWLS (default) | 1.563 |

| 8 | puniform (star) | 1.441 | 8 | AK (AK2) | 1.556 |

| 9 | PEESE (default) | 1.327 | 9 | puniform (star) | 1.441 |

| 10 | PETPEESE (default) | 1.272 | 10 | PEESE (default) | 1.327 |

| 11 | AK (AK2) | 1.240 | 11 | PETPEESE (default) | 1.272 |

| 12 | SM (3PSM) | 1.112 | 12 | SM (3PSM) | 1.120 |

| 13 | FMA (default) | 1.095 | 13 | FMA (default) | 1.095 |

| 14 | EK (default) | 1.066 | 14 | EK (default) | 1.066 |

| 14 | PET (default) | 1.066 | 14 | PET (default) | 1.066 |

| 16 | mean (default) | 1.033 | 16 | mean (default) | 1.033 |

| 17 | SM (4PSM) | 0.893 | 17 | SM (4PSM) | 0.915 |

| 18 | WILS (default) | 0.856 | 18 | WILS (default) | 0.856 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | -6.535 | 1 | RMA (default) | -6.535 |

| 2 | AK (AK1) | -6.401 | 2 | AK (AK1) | -6.404 |

| 3 | WLS (default) | -6.229 | 3 | WLS (default) | -6.229 |

| 4 | trimfill (default) | -6.222 | 4 | trimfill (default) | -6.224 |

| 5 | FMA (default) | -6.193 | 5 | FMA (default) | -6.193 |

| 6 | mean (default) | -5.430 | 6 | mean (default) | -5.430 |

| 7 | PEESE (default) | -5.202 | 7 | PEESE (default) | -5.202 |

| 8 | WAAPWLS (default) | -4.263 | 8 | WAAPWLS (default) | -4.263 |

| 9 | PETPEESE (default) | -4.199 | 9 | PETPEESE (default) | -4.199 |

| 10 | puniform (default) | -4.028 | 10 | puniform (default) | -4.030 |

| 11 | EK (default) | -3.956 | 11 | EK (default) | -3.956 |

| 11 | PET (default) | -3.956 | 11 | PET (default) | -3.956 |

| 13 | puniform (star) | -3.690 | 13 | puniform (star) | -3.690 |

| 14 | RoBMA (PSMA) | -3.314 | 14 | AK (AK2) | -3.483 |

| 15 | SM (3PSM) | -3.252 | 15 | RoBMA (PSMA) | -3.326 |

| 16 | WILS (default) | -3.212 | 16 | SM (3PSM) | -3.259 |

| 17 | SM (4PSM) | -2.447 | 17 | WILS (default) | -3.212 |

| 18 | AK (AK2) | -1.757 | 18 | SM (4PSM) | -2.519 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK1) | 0.114 | 1 | AK (AK1) | 0.114 |

| 2 | RoBMA (PSMA) | 0.143 | 2 | RoBMA (PSMA) | 0.143 |

| 3 | trimfill (default) | 0.155 | 3 | trimfill (default) | 0.155 |

| 4 | RMA (default) | 0.186 | 4 | RMA (default) | 0.186 |

| 5 | WAAPWLS (default) | 0.220 | 5 | WAAPWLS (default) | 0.220 |

| 6 | WLS (default) | 0.227 | 6 | WLS (default) | 0.227 |

| 7 | PETPEESE (default) | 0.302 | 7 | PETPEESE (default) | 0.302 |

| 8 | AK (AK2) | 0.312 | 8 | AK (AK2) | 0.307 |

| 9 | PEESE (default) | 0.312 | 9 | PEESE (default) | 0.312 |

| 10 | puniform (star) | 0.319 | 10 | puniform (star) | 0.319 |

| 11 | EK (default) | 0.348 | 11 | EK (default) | 0.348 |

| 11 | PET (default) | 0.348 | 11 | PET (default) | 0.348 |

| 13 | puniform (default) | 0.378 | 13 | puniform (default) | 0.377 |

| 14 | SM (4PSM) | 0.413 | 14 | SM (4PSM) | 0.413 |

| 15 | SM (3PSM) | 0.428 | 15 | SM (3PSM) | 0.428 |

| 16 | FMA (default) | 0.442 | 16 | FMA (default) | 0.442 |

| 17 | mean (default) | 0.499 | 17 | mean (default) | 0.499 |

| 18 | WILS (default) | 0.501 | 18 | WILS (default) | 0.501 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | mean (default) | 0.986 | 1 | mean (default) | 0.986 |

| 2 | FMA (default) | 0.985 | 2 | FMA (default) | 0.985 |

| 3 | RMA (default) | 0.969 | 3 | RMA (default) | 0.969 |

| 4 | WLS (default) | 0.964 | 4 | WLS (default) | 0.964 |

| 5 | trimfill (default) | 0.949 | 5 | trimfill (default) | 0.949 |

| 6 | AK (AK1) | 0.949 | 6 | AK (AK1) | 0.948 |

| 7 | PEESE (default) | 0.915 | 7 | PEESE (default) | 0.915 |

| 8 | puniform (default) | 0.885 | 8 | puniform (default) | 0.885 |

| 9 | WILS (default) | 0.876 | 9 | WILS (default) | 0.876 |

| 10 | WAAPWLS (default) | 0.858 | 10 | WAAPWLS (default) | 0.858 |

| 11 | PETPEESE (default) | 0.845 | 11 | PETPEESE (default) | 0.845 |

| 12 | EK (default) | 0.831 | 12 | EK (default) | 0.831 |

| 12 | PET (default) | 0.831 | 12 | PET (default) | 0.831 |

| 14 | SM (3PSM) | 0.819 | 14 | SM (3PSM) | 0.821 |

| 15 | puniform (star) | 0.811 | 15 | puniform (star) | 0.811 |

| 16 | SM (4PSM) | 0.732 | 16 | AK (AK2) | 0.795 |

| 17 | AK (AK2) | 0.711 | 17 | SM (4PSM) | 0.739 |

| 18 | RoBMA (PSMA) | 0.674 | 18 | RoBMA (PSMA) | 0.676 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

By-Condition Performance (Conditional on Method Convergence)

The results below are conditional on method convergence. Note that the methods might differ in convergence rate and are therefore not compared on the same data sets.

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method. Values larger than 0.5 are visualized as 0.5.

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0. Values lower than -0.5 or larger than 0.5 are visualized as -0.5 and 0.5 respectively.

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance. Values larger than 0.5 are visualized as 0.5.

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method. Values larger than 100 are visualized as 100.

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

By-Condition Performance (Replacement in Case of Non-Convergence)

The results below incorporate method replacement to handle non-convergence. If a method fails to converge, its results are replaced with the results from a simpler method (e.g., random-effects meta-analysis without publication bias adjustment). This emulates what a data analyst may do in practice in case a method does not converge. However, note that these results do not correspond to “pure” method performance as they might combine multiple different methods. See Method Replacement Strategy for details of the method replacement specification.

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method. Values larger than 0.5 are visualized as 0.5.

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0. Values lower than -0.5 or larger than 0.5 are visualized as -0.5 and 0.5 respectively.

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance. Values larger than 0.5 are visualized as 0.5.

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method. Values larger than 100 are visualized as 100.

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

Subset: Medium Questionable Research Practices

These results are based on Carter (2019) data-generating mechanism with a total of 252 conditions.

Average Performance

Method performance measures are aggregated across all simulated conditions to provide an overall impression of method performance. However, keep in mind that a method with a high overall ranking is not necessarily the “best” method for a particular application. To select a suitable method for your application, consider also non-aggregated performance measures in conditions most relevant to your application.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK1) | 0.087 | 1 | AK (AK1) | 0.087 |

| 2 | PEESE (default) | 0.101 | 2 | PEESE (default) | 0.101 |

| 3 | WAAPWLS (default) | 0.107 | 3 | AK (AK2) | 0.105 |

| 4 | PETPEESE (default) | 0.111 | 4 | WAAPWLS (default) | 0.107 |

| 5 | trimfill (default) | 0.117 | 5 | PETPEESE (default) | 0.111 |

| 6 | WILS (default) | 0.122 | 6 | trimfill (default) | 0.117 |

| 7 | FMA (default) | 0.133 | 7 | WILS (default) | 0.122 |

| 7 | WLS (default) | 0.133 | 8 | FMA (default) | 0.133 |

| 9 | RoBMA (PSMA) | 0.135 | 8 | WLS (default) | 0.133 |

| 10 | AK (AK2) | 0.136 | 10 | RoBMA (PSMA) | 0.137 |

| 11 | pcurve (default) | 0.143 | 11 | pcurve (default) | 0.142 |

| 12 | EK (default) | 0.145 | 12 | EK (default) | 0.145 |

| 13 | PET (default) | 0.145 | 13 | PET (default) | 0.145 |

| 14 | RMA (default) | 0.191 | 14 | RMA (default) | 0.191 |

| 15 | puniform (default) | 0.259 | 15 | puniform (default) | 0.251 |

| 16 | SM (3PSM) | 0.272 | 16 | SM (3PSM) | 0.263 |

| 17 | mean (default) | 0.284 | 17 | mean (default) | 0.284 |

| 18 | SM (4PSM) | 0.444 | 18 | SM (4PSM) | 0.462 |

| 19 | puniform (star) | 147.205 | 19 | puniform (star) | 147.205 |

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | PETPEESE (default) | 0.011 | 1 | PETPEESE (default) | 0.011 |

| 2 | AK (AK1) | 0.036 | 2 | AK (AK2) | -0.018 |

| 3 | PEESE (default) | 0.037 | 3 | AK (AK1) | 0.036 |

| 4 | pcurve (default) | 0.042 | 4 | PEESE (default) | 0.037 |

| 5 | puniform (default) | 0.045 | 5 | pcurve (default) | 0.042 |

| 6 | WILS (default) | -0.048 | 6 | puniform (default) | 0.046 |

| 7 | EK (default) | -0.052 | 7 | WILS (default) | -0.048 |

| 8 | PET (default) | -0.052 | 8 | EK (default) | -0.052 |

| 9 | WAAPWLS (default) | 0.068 | 9 | PET (default) | -0.052 |

| 10 | AK (AK2) | -0.086 | 10 | WAAPWLS (default) | 0.068 |

| 11 | SM (3PSM) | -0.090 | 11 | SM (3PSM) | -0.084 |

| 12 | trimfill (default) | 0.090 | 12 | RoBMA (PSMA) | -0.085 |

| 13 | RoBMA (PSMA) | -0.091 | 13 | trimfill (default) | 0.090 |

| 14 | FMA (default) | 0.112 | 14 | FMA (default) | 0.112 |

| 14 | WLS (default) | 0.112 | 14 | WLS (default) | 0.112 |

| 16 | RMA (default) | 0.183 | 16 | RMA (default) | 0.183 |

| 17 | SM (4PSM) | -0.202 | 17 | SM (4PSM) | -0.204 |

| 18 | mean (default) | 0.277 | 18 | mean (default) | 0.277 |

| 19 | puniform (star) | -23.968 | 19 | puniform (star) | -23.968 |

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | 0.041 | 1 | RMA (default) | 0.041 |

| 2 | AK (AK1) | 0.042 | 2 | AK (AK1) | 0.042 |

| 3 | trimfill (default) | 0.044 | 3 | trimfill (default) | 0.045 |

| 4 | mean (default) | 0.052 | 4 | mean (default) | 0.052 |

| 5 | FMA (default) | 0.059 | 5 | FMA (default) | 0.059 |

| 5 | WLS (default) | 0.059 | 5 | WLS (default) | 0.059 |

| 7 | pcurve (default) | 0.071 | 7 | pcurve (default) | 0.070 |

| 8 | PEESE (default) | 0.074 | 8 | PEESE (default) | 0.074 |

| 9 | WAAPWLS (default) | 0.074 | 9 | WAAPWLS (default) | 0.074 |

| 10 | RoBMA (PSMA) | 0.078 | 10 | AK (AK2) | 0.081 |

| 11 | AK (AK2) | 0.079 | 11 | WILS (default) | 0.089 |

| 12 | WILS (default) | 0.089 | 12 | RoBMA (PSMA) | 0.091 |

| 13 | PETPEESE (default) | 0.095 | 13 | PETPEESE (default) | 0.095 |

| 14 | PET (default) | 0.118 | 14 | PET (default) | 0.118 |

| 15 | EK (default) | 0.118 | 15 | EK (default) | 0.118 |

| 16 | puniform (default) | 0.183 | 16 | puniform (default) | 0.175 |

| 17 | SM (3PSM) | 0.220 | 17 | SM (3PSM) | 0.212 |

| 18 | SM (4PSM) | 0.343 | 18 | SM (4PSM) | 0.361 |

| 19 | puniform (star) | 144.875 | 19 | puniform (star) | 144.875 |

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK2) | 1.212 | 1 | AK (AK2) | 0.960 |

| 2 | AK (AK1) | 1.235 | 2 | AK (AK1) | 1.240 |

| 3 | puniform (star) | 1.358 | 3 | puniform (star) | 1.358 |

| 4 | WAAPWLS (default) | 1.375 | 4 | WAAPWLS (default) | 1.375 |

| 5 | PETPEESE (default) | 1.437 | 5 | PETPEESE (default) | 1.437 |

| 6 | PEESE (default) | 1.462 | 6 | PEESE (default) | 1.462 |

| 7 | SM (3PSM) | 1.698 | 7 | SM (3PSM) | 1.674 |

| 8 | RoBMA (PSMA) | 1.724 | 8 | EK (default) | 1.745 |

| 9 | EK (default) | 1.745 | 9 | RoBMA (PSMA) | 1.780 |

| 10 | PET (default) | 1.819 | 10 | PET (default) | 1.819 |

| 11 | WILS (default) | 1.929 | 11 | WILS (default) | 1.929 |

| 12 | trimfill (default) | 2.200 | 12 | trimfill (default) | 2.202 |

| 13 | puniform (default) | 2.525 | 13 | puniform (default) | 2.516 |

| 14 | WLS (default) | 2.871 | 14 | WLS (default) | 2.871 |

| 15 | SM (4PSM) | 3.078 | 15 | SM (4PSM) | 2.975 |

| 16 | FMA (default) | 3.472 | 16 | FMA (default) | 3.472 |

| 17 | RMA (default) | 4.763 | 17 | RMA (default) | 4.763 |

| 18 | mean (default) | 8.442 | 18 | mean (default) | 8.442 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK2) | 0.735 | 1 | AK (AK2) | 0.686 |

| 2 | RoBMA (PSMA) | 0.653 | 2 | RoBMA (PSMA) | 0.650 |

| 3 | SM (3PSM) | 0.640 | 3 | SM (3PSM) | 0.635 |

| 4 | puniform (star) | 0.633 | 4 | puniform (star) | 0.633 |

| 5 | SM (4PSM) | 0.619 | 5 | SM (4PSM) | 0.614 |

| 6 | WAAPWLS (default) | 0.570 | 6 | WAAPWLS (default) | 0.570 |

| 7 | AK (AK1) | 0.553 | 7 | AK (AK1) | 0.551 |

| 8 | puniform (default) | 0.529 | 8 | puniform (default) | 0.529 |

| 9 | PETPEESE (default) | 0.512 | 9 | PETPEESE (default) | 0.512 |

| 10 | EK (default) | 0.468 | 10 | EK (default) | 0.468 |

| 11 | PET (default) | 0.453 | 11 | PET (default) | 0.453 |

| 12 | PEESE (default) | 0.446 | 12 | PEESE (default) | 0.446 |

| 13 | trimfill (default) | 0.427 | 13 | trimfill (default) | 0.426 |

| 14 | WILS (default) | 0.412 | 14 | WILS (default) | 0.412 |

| 15 | WLS (default) | 0.275 | 15 | WLS (default) | 0.275 |

| 16 | FMA (default) | 0.213 | 16 | FMA (default) | 0.213 |

| 17 | RMA (default) | 0.191 | 17 | RMA (default) | 0.191 |

| 18 | mean (default) | 0.059 | 18 | mean (default) | 0.059 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | FMA (default) | 0.089 | 1 | FMA (default) | 0.089 |

| 2 | WLS (default) | 0.134 | 2 | WLS (default) | 0.134 |

| 3 | mean (default) | 0.147 | 3 | mean (default) | 0.147 |

| 4 | PEESE (default) | 0.150 | 4 | PEESE (default) | 0.150 |

| 5 | trimfill (default) | 0.160 | 5 | trimfill (default) | 0.160 |

| 6 | WILS (default) | 0.160 | 6 | WILS (default) | 0.160 |

| 7 | RMA (default) | 0.164 | 7 | RMA (default) | 0.164 |

| 8 | AK (AK1) | 0.168 | 8 | AK (AK1) | 0.168 |

| 9 | PETPEESE (default) | 0.184 | 9 | PETPEESE (default) | 0.184 |

| 10 | WAAPWLS (default) | 0.198 | 10 | WAAPWLS (default) | 0.198 |

| 11 | PET (default) | 0.237 | 11 | PET (default) | 0.237 |

| 12 | EK (default) | 0.257 | 12 | EK (default) | 0.257 |

| 13 | RoBMA (PSMA) | 0.288 | 13 | AK (AK2) | 0.266 |

| 14 | puniform (star) | 0.332 | 14 | RoBMA (PSMA) | 0.286 |

| 15 | puniform (default) | 0.375 | 15 | puniform (star) | 0.332 |

| 16 | AK (AK2) | 0.433 | 16 | puniform (default) | 0.367 |

| 17 | SM (3PSM) | 0.543 | 17 | SM (3PSM) | 0.498 |

| 18 | SM (4PSM) | 1.069 | 18 | SM (4PSM) | 0.939 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | puniform (default) | 1.991 | 1 | puniform (default) | 1.983 |

| 2 | RoBMA (PSMA) | 1.807 | 2 | RoBMA (PSMA) | 1.743 |

| 3 | AK (AK2) | 1.595 | 3 | PETPEESE (default) | 1.261 |

| 4 | PETPEESE (default) | 1.261 | 4 | PET (default) | 1.012 |

| 5 | PET (default) | 1.012 | 5 | EK (default) | 1.012 |

| 6 | EK (default) | 1.012 | 6 | AK (AK2) | 0.847 |

| 7 | SM (3PSM) | 0.833 | 7 | SM (3PSM) | 0.828 |

| 8 | WAAPWLS (default) | 0.665 | 8 | WAAPWLS (default) | 0.665 |

| 9 | puniform (star) | 0.553 | 9 | puniform (star) | 0.553 |

| 10 | PEESE (default) | 0.461 | 10 | PEESE (default) | 0.461 |

| 11 | WILS (default) | 0.427 | 11 | WILS (default) | 0.427 |

| 12 | AK (AK1) | 0.242 | 12 | AK (AK1) | 0.241 |

| 13 | trimfill (default) | 0.193 | 13 | trimfill (default) | 0.193 |

| 14 | WLS (default) | 0.154 | 14 | WLS (default) | 0.154 |

| 15 | RMA (default) | 0.088 | 15 | RMA (default) | 0.088 |

| 16 | SM (4PSM) | 0.057 | 16 | SM (4PSM) | 0.072 |

| 17 | FMA (default) | 0.053 | 17 | FMA (default) | 0.053 |

| 18 | mean (default) | 0.019 | 18 | mean (default) | 0.019 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | WAAPWLS (default) | -4.827 | 1 | WAAPWLS (default) | -4.827 |

| 2 | PETPEESE (default) | -4.469 | 2 | PETPEESE (default) | -4.469 |

| 3 | EK (default) | -4.350 | 3 | EK (default) | -4.350 |

| 4 | PET (default) | -4.350 | 4 | PET (default) | -4.350 |

| 5 | PEESE (default) | -4.272 | 5 | PEESE (default) | -4.272 |

| 6 | puniform (default) | -3.630 | 6 | AK (AK2) | -4.067 |

| 7 | WLS (default) | -2.606 | 7 | puniform (default) | -3.629 |

| 8 | SM (3PSM) | -2.571 | 8 | WLS (default) | -2.606 |

| 9 | trimfill (default) | -2.517 | 9 | SM (3PSM) | -2.590 |

| 10 | WILS (default) | -2.389 | 10 | trimfill (default) | -2.519 |

| 11 | AK (AK2) | -2.109 | 11 | WILS (default) | -2.389 |

| 12 | FMA (default) | -2.070 | 12 | FMA (default) | -2.070 |

| 13 | puniform (star) | -2.025 | 13 | puniform (star) | -2.025 |

| 14 | RoBMA (PSMA) | -1.921 | 14 | RoBMA (PSMA) | -1.913 |

| 15 | AK (AK1) | -1.790 | 15 | AK (AK1) | -1.793 |

| 16 | RMA (default) | -1.448 | 16 | RMA (default) | -1.448 |

| 17 | mean (default) | -0.850 | 17 | mean (default) | -0.850 |

| 18 | SM (4PSM) | -0.444 | 18 | SM (4PSM) | -0.550 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK2) | 0.169 | 1 | RoBMA (PSMA) | 0.333 |

| 2 | RoBMA (PSMA) | 0.324 | 2 | PETPEESE (default) | 0.357 |

| 3 | PETPEESE (default) | 0.357 | 3 | PET (default) | 0.397 |

| 4 | PET (default) | 0.397 | 4 | EK (default) | 0.397 |

| 5 | EK (default) | 0.397 | 5 | puniform (default) | 0.483 |

| 6 | puniform (default) | 0.483 | 6 | AK (AK2) | 0.492 |

| 7 | SM (3PSM) | 0.491 | 7 | SM (3PSM) | 0.493 |

| 8 | WAAPWLS (default) | 0.515 | 8 | WAAPWLS (default) | 0.515 |

| 9 | puniform (star) | 0.518 | 9 | puniform (star) | 0.518 |

| 10 | WILS (default) | 0.601 | 10 | WILS (default) | 0.601 |

| 11 | SM (4PSM) | 0.623 | 11 | SM (4PSM) | 0.625 |

| 12 | PEESE (default) | 0.684 | 12 | PEESE (default) | 0.684 |

| 13 | trimfill (default) | 0.857 | 13 | trimfill (default) | 0.857 |

| 14 | AK (AK1) | 0.867 | 14 | AK (AK1) | 0.867 |

| 15 | WLS (default) | 0.876 | 15 | WLS (default) | 0.876 |

| 16 | RMA (default) | 0.932 | 16 | RMA (default) | 0.932 |

| 17 | FMA (default) | 0.954 | 17 | FMA (default) | 0.954 |

| 18 | mean (default) | 0.983 | 18 | mean (default) | 0.983 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | mean (default) | 1.000 | 1 | mean (default) | 1.000 |

| 2 | FMA (default) | 0.999 | 2 | FMA (default) | 0.999 |

| 3 | RMA (default) | 0.998 | 3 | RMA (default) | 0.998 |

| 4 | trimfill (default) | 0.995 | 4 | trimfill (default) | 0.995 |

| 5 | WLS (default) | 0.994 | 5 | WLS (default) | 0.994 |

| 6 | AK (AK1) | 0.990 | 6 | AK (AK1) | 0.990 |

| 7 | PEESE (default) | 0.981 | 7 | PEESE (default) | 0.981 |

| 8 | WAAPWLS (default) | 0.948 | 8 | WAAPWLS (default) | 0.948 |

| 9 | puniform (default) | 0.923 | 9 | puniform (default) | 0.923 |

| 10 | PETPEESE (default) | 0.915 | 10 | PETPEESE (default) | 0.915 |

| 11 | EK (default) | 0.900 | 11 | EK (default) | 0.900 |

| 12 | PET (default) | 0.900 | 12 | PET (default) | 0.900 |

| 13 | WILS (default) | 0.861 | 13 | AK (AK2) | 0.879 |

| 14 | SM (3PSM) | 0.821 | 14 | WILS (default) | 0.861 |

| 15 | puniform (star) | 0.755 | 15 | SM (3PSM) | 0.826 |

| 16 | AK (AK2) | 0.716 | 16 | puniform (star) | 0.755 |

| 17 | SM (4PSM) | 0.670 | 17 | SM (4PSM) | 0.684 |

| 18 | RoBMA (PSMA) | 0.629 | 18 | RoBMA (PSMA) | 0.634 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

By-Condition Performance (Conditional on Method Convergence)

The results below are conditional on method convergence. Note that the methods might differ in convergence rate and are therefore not compared on the same data sets.

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method. Values larger than 0.5 are visualized as 0.5.

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0. Values lower than -0.5 or larger than 0.5 are visualized as -0.5 and 0.5 respectively.

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance. Values larger than 0.5 are visualized as 0.5.

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method. Values larger than 100 are visualized as 100.

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

By-Condition Performance (Replacement in Case of Non-Convergence)

The results below incorporate method replacement to handle non-convergence. If a method fails to converge, its results are replaced with the results from a simpler method (e.g., random-effects meta-analysis without publication bias adjustment). This emulates what a data analyst may do in practice in case a method does not converge. However, note that these results do not correspond to “pure” method performance as they might combine multiple different methods. See Method Replacement Strategy for details of the method replacement specification.

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method. Values larger than 0.5 are visualized as 0.5.

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0. Values lower than -0.5 or larger than 0.5 are visualized as -0.5 and 0.5 respectively.

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance. Values larger than 0.5 are visualized as 0.5.

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method. Values larger than 100 are visualized as 100.

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

The positive likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a positive likelihood ratio greater than 1 (or a log positive likelihood ratio greater than 0). A higher (log) positive likelihood ratio indicates a better method.

The negative likelihood ratio is an overall summary measure of hypothesis testing performance that combines power and type I error rate. It indicates how much a non-significant test result changes the odds of the alternative hypothesis versus the null hypothesis. A useful method has a negative likelihood ratio less than 1 (or a log negative likelihood ratio less than 0). A lower (log) negative likelihood ratio indicates a better method.

The type I error rate is the proportion of simulation runs in which the null hypothesis of no effect was incorrectly rejected when it was true. Ideally, this value should be close to the nominal level of 5%.

The power is the proportion of simulation runs in which the null hypothesis of no effect was correctly rejected when the alternative hypothesis was true. A higher power indicates a better method.

Subset: High Questionable Research Practices

These results are based on Carter (2019) data-generating mechanism with a total of 252 conditions.

Average Performance

Method performance measures are aggregated across all simulated conditions to provide an overall impression of method performance. However, keep in mind that a method with a high overall ranking is not necessarily the “best” method for a particular application. To select a suitable method for your application, consider also non-aggregated performance measures in conditions most relevant to your application.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | AK (AK1) | 0.105 | 1 | AK (AK1) | 0.105 |

| 2 | PEESE (default) | 0.127 | 2 | AK (AK2) | 0.117 |

| 3 | PETPEESE (default) | 0.129 | 3 | PEESE (default) | 0.127 |

| 4 | pcurve (default) | 0.129 | 4 | PETPEESE (default) | 0.129 |

| 5 | WILS (default) | 0.130 | 5 | pcurve (default) | 0.129 |

| 6 | WAAPWLS (default) | 0.131 | 6 | WILS (default) | 0.130 |

| 7 | EK (default) | 0.140 | 7 | WAAPWLS (default) | 0.131 |

| 8 | PET (default) | 0.140 | 8 | EK (default) | 0.140 |

| 9 | puniform (default) | 0.149 | 9 | PET (default) | 0.140 |

| 10 | AK (AK2) | 0.158 | 10 | puniform (default) | 0.149 |

| 11 | trimfill (default) | 0.159 | 11 | trimfill (default) | 0.159 |

| 12 | RoBMA (PSMA) | 0.162 | 12 | RoBMA (PSMA) | 0.164 |

| 13 | FMA (default) | 0.175 | 13 | FMA (default) | 0.175 |

| 13 | WLS (default) | 0.175 | 13 | WLS (default) | 0.175 |

| 15 | RMA (default) | 0.244 | 15 | RMA (default) | 0.244 |

| 16 | mean (default) | 0.358 | 16 | mean (default) | 0.358 |

| 17 | SM (3PSM) | 0.493 | 17 | SM (3PSM) | 0.468 |

| 18 | SM (4PSM) | 0.736 | 18 | SM (4PSM) | 0.779 |

| 19 | puniform (star) | 314.821 | 19 | puniform (star) | 314.821 |

RMSE (Root Mean Square Error) is an overall summary measure of estimation performance that combines bias and empirical SE. RMSE is the square root of the average squared difference between the meta-analytic estimate and the true effect across simulation runs. A lower RMSE indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | WILS (default) | -0.012 | 1 | WILS (default) | -0.012 |

| 2 | EK (default) | -0.032 | 2 | EK (default) | -0.032 |

| 3 | PET (default) | -0.032 | 3 | PET (default) | -0.032 |

| 4 | pcurve (default) | 0.038 | 4 | pcurve (default) | 0.038 |

| 5 | PETPEESE (default) | 0.046 | 5 | PETPEESE (default) | 0.046 |

| 6 | AK (AK2) | 0.050 | 6 | AK (AK2) | 0.046 |

| 7 | puniform (default) | 0.054 | 7 | puniform (default) | 0.054 |

| 8 | AK (AK1) | 0.062 | 8 | AK (AK1) | 0.062 |

| 9 | PEESE (default) | 0.075 | 9 | PEESE (default) | 0.075 |

| 10 | WAAPWLS (default) | 0.101 | 10 | RoBMA (PSMA) | -0.094 |

| 11 | RoBMA (PSMA) | -0.103 | 11 | WAAPWLS (default) | 0.101 |

| 12 | trimfill (default) | 0.136 | 12 | SM (3PSM) | -0.130 |

| 13 | SM (3PSM) | -0.148 | 13 | trimfill (default) | 0.136 |

| 14 | FMA (default) | 0.159 | 14 | FMA (default) | 0.159 |

| 14 | WLS (default) | 0.159 | 14 | WLS (default) | 0.159 |

| 16 | RMA (default) | 0.237 | 16 | RMA (default) | 0.237 |

| 17 | SM (4PSM) | -0.291 | 17 | SM (4PSM) | -0.299 |

| 18 | mean (default) | 0.353 | 18 | mean (default) | 0.353 |

| 19 | puniform (star) | -67.517 | 19 | puniform (star) | -67.517 |

Bias is the average difference between the meta-analytic estimate and the true effect across simulation runs. Ideally, this value should be close to 0.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | RMA (default) | 0.038 | 1 | RMA (default) | 0.038 |

| 2 | AK (AK1) | 0.041 | 2 | AK (AK1) | 0.042 |

| 3 | trimfill (default) | 0.042 | 3 | trimfill (default) | 0.042 |

| 4 | mean (default) | 0.048 | 4 | mean (default) | 0.048 |

| 5 | pcurve (default) | 0.055 | 5 | pcurve (default) | 0.055 |

| 6 | WLS (default) | 0.057 | 6 | WLS (default) | 0.057 |

| 7 | FMA (default) | 0.057 | 7 | FMA (default) | 0.057 |

| 8 | WAAPWLS (default) | 0.067 | 8 | WAAPWLS (default) | 0.067 |

| 9 | PEESE (default) | 0.067 | 9 | PEESE (default) | 0.067 |

| 10 | puniform (default) | 0.070 | 10 | puniform (default) | 0.070 |

| 11 | AK (AK2) | 0.079 | 11 | AK (AK2) | 0.074 |

| 12 | RoBMA (PSMA) | 0.090 | 12 | PETPEESE (default) | 0.091 |

| 13 | PETPEESE (default) | 0.091 | 13 | WILS (default) | 0.097 |

| 14 | WILS (default) | 0.097 | 14 | PET (default) | 0.105 |

| 15 | PET (default) | 0.105 | 15 | EK (default) | 0.105 |

| 16 | EK (default) | 0.105 | 16 | RoBMA (PSMA) | 0.109 |

| 17 | SM (3PSM) | 0.436 | 17 | SM (3PSM) | 0.414 |

| 18 | SM (4PSM) | 0.618 | 18 | SM (4PSM) | 0.661 |

| 19 | puniform (star) | 305.910 | 19 | puniform (star) | 305.910 |

The empirical SE is the standard deviation of the meta-analytic estimate across simulation runs. A lower empirical SE indicates less variability and better method performance.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | puniform (star) | 1.717 | 1 | AK (AK2) | 1.346 |

| 2 | AK (AK1) | 1.951 | 2 | puniform (star) | 1.717 |

| 3 | EK (default) | 2.025 | 3 | AK (AK1) | 1.953 |

| 4 | RoBMA (PSMA) | 2.053 | 4 | EK (default) | 2.025 |

| 5 | WILS (default) | 2.094 | 5 | WILS (default) | 2.094 |

| 6 | PET (default) | 2.098 | 6 | PET (default) | 2.098 |

| 7 | SM (3PSM) | 2.262 | 7 | RoBMA (PSMA) | 2.140 |

| 8 | PETPEESE (default) | 2.446 | 8 | SM (3PSM) | 2.238 |

| 9 | WAAPWLS (default) | 2.507 | 9 | PETPEESE (default) | 2.446 |

| 10 | puniform (default) | 2.546 | 10 | WAAPWLS (default) | 2.507 |

| 11 | PEESE (default) | 2.736 | 11 | puniform (default) | 2.546 |

| 12 | AK (AK2) | 2.956 | 12 | PEESE (default) | 2.736 |

| 13 | SM (4PSM) | 3.798 | 13 | SM (4PSM) | 3.770 |

| 14 | trimfill (default) | 3.991 | 14 | trimfill (default) | 3.996 |

| 15 | WLS (default) | 4.658 | 15 | WLS (default) | 4.658 |

| 16 | FMA (default) | 5.182 | 16 | FMA (default) | 5.182 |

| 17 | RMA (default) | 7.051 | 17 | RMA (default) | 7.051 |

| 18 | mean (default) | 11.423 | 18 | mean (default) | 11.423 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

The interval score measures the accuracy of a confidence interval by combining its width and coverage. It penalizes intervals that are too wide or that fail to include the true value. A lower interval score indicates a better method.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | SM (3PSM) | 0.672 | 1 | AK (AK2) | 0.691 |

| 2 | SM (4PSM) | 0.663 | 2 | SM (3PSM) | 0.659 |

| 3 | RoBMA (PSMA) | 0.648 | 3 | SM (4PSM) | 0.654 |

| 4 | puniform (star) | 0.641 | 4 | RoBMA (PSMA) | 0.645 |

| 5 | AK (AK2) | 0.596 | 5 | puniform (star) | 0.641 |

| 6 | puniform (default) | 0.510 | 6 | puniform (default) | 0.510 |

| 7 | AK (AK1) | 0.505 | 7 | AK (AK1) | 0.504 |

| 8 | WAAPWLS (default) | 0.444 | 8 | WAAPWLS (default) | 0.444 |

| 9 | WILS (default) | 0.432 | 9 | WILS (default) | 0.432 |

| 10 | PETPEESE (default) | 0.409 | 10 | PETPEESE (default) | 0.409 |

| 11 | EK (default) | 0.396 | 11 | EK (default) | 0.396 |

| 12 | PET (default) | 0.382 | 12 | PET (default) | 0.382 |

| 13 | trimfill (default) | 0.361 | 13 | trimfill (default) | 0.360 |

| 14 | PEESE (default) | 0.329 | 14 | PEESE (default) | 0.329 |

| 15 | WLS (default) | 0.218 | 15 | WLS (default) | 0.218 |

| 16 | FMA (default) | 0.179 | 16 | FMA (default) | 0.179 |

| 17 | RMA (default) | 0.149 | 17 | RMA (default) | 0.149 |

| 18 | mean (default) | 0.040 | 18 | mean (default) | 0.040 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

95% CI coverage is the proportion of simulation runs in which the 95% confidence interval contained the true effect. Ideally, this value should be close to the nominal level of 95%.

| Rank | Method | Value | Rank | Method | Value |

|---|---|---|---|---|---|

| 1 | FMA (default) | 0.088 | 1 | FMA (default) | 0.088 |

| 2 | WLS (default) | 0.122 | 2 | WLS (default) | 0.122 |

| 3 | PEESE (default) | 0.126 | 3 | PEESE (default) | 0.126 |

| 4 | trimfill (default) | 0.144 | 4 | trimfill (default) | 0.144 |

| 5 | mean (default) | 0.147 | 5 | mean (default) | 0.147 |

| 6 | RMA (default) | 0.147 | 6 | RMA (default) | 0.147 |

| 7 | PETPEESE (default) | 0.148 | 7 | PETPEESE (default) | 0.148 |

| 8 | AK (AK1) | 0.155 | 8 | AK (AK1) | 0.155 |

| 9 | WAAPWLS (default) | 0.163 | 9 | WAAPWLS (default) | 0.163 |

| 10 | WILS (default) | 0.186 | 10 | WILS (default) | 0.186 |

| 11 | PET (default) | 0.197 | 11 | PET (default) | 0.197 |

| 12 | EK (default) | 0.213 | 12 | EK (default) | 0.213 |

| 13 | puniform (default) | 0.270 | 13 | AK (AK2) | 0.252 |

| 14 | RoBMA (PSMA) | 0.337 | 14 | puniform (default) | 0.270 |

| 15 | puniform (star) | 0.422 | 15 | RoBMA (PSMA) | 0.333 |

| 16 | AK (AK2) | 0.725 | 16 | puniform (star) | 0.422 |

| 17 | SM (3PSM) | 1.035 | 17 | SM (3PSM) | 0.907 |

| 18 | SM (4PSM) | 1.711 | 18 | SM (4PSM) | 1.606 |

| 19 | pcurve (default) | NaN | 19 | pcurve (default) | NaN |

95% CI width is the average length of the 95% confidence interval for the true effect. A lower average 95% CI length indicates a better method.

| Rank | Method | Log Value | Rank | Method | Log Value |

|---|---|---|---|---|---|

| 1 | puniform (default) | 1.992 | 1 | puniform (default) | 1.992 |

| 2 | RoBMA (PSMA) | 1.581 | 2 | RoBMA (PSMA) | 1.344 |

| 3 | PET (default) | 0.804 | 3 | PET (default) | 0.804 |

| 4 | EK (default) | 0.802 | 4 | EK (default) | 0.802 |

| 5 | SM (3PSM) | 0.781 | 5 | PETPEESE (default) | 0.739 |

| 6 | PETPEESE (default) | 0.739 | 6 | SM (3PSM) | 0.737 |

| 7 | AK (AK2) | 0.451 | 7 | WILS (default) | 0.435 |

| 8 | WILS (default) | 0.435 | 8 | puniform (star) | 0.400 |

| 9 | puniform (star) | 0.400 | 9 | AK (AK2) | 0.241 |

| 10 | WAAPWLS (default) | 0.236 | 10 | WAAPWLS (default) | 0.236 |

| 11 | SM (4PSM) | 0.153 | 11 | SM (4PSM) | 0.157 |

| 12 | PEESE (default) | 0.093 | 12 | PEESE (default) | 0.093 |

| 13 | AK (AK1) | 0.071 | 13 | AK (AK1) | 0.071 |

| 14 | trimfill (default) | 0.035 | 14 | trimfill (default) | 0.035 |

| 15 | WLS (default) | 0.034 | 15 | WLS (default) | 0.034 |

| 16 | RMA (default) | 0.013 | 16 | RMA (default) | 0.013 |

| 17 | FMA (default) | 0.007 | 17 | FMA (default) | 0.007 |

| 18 | mean (default) | 0.000 | 18 | mean (default) | 0.000 |